Rui Shu

@_smileyball

I draw smileyball http://instagram.com/smiley._.ball Calculating lower bounds @OpenAI Previously calculating phd salary lower bounds @Stanford

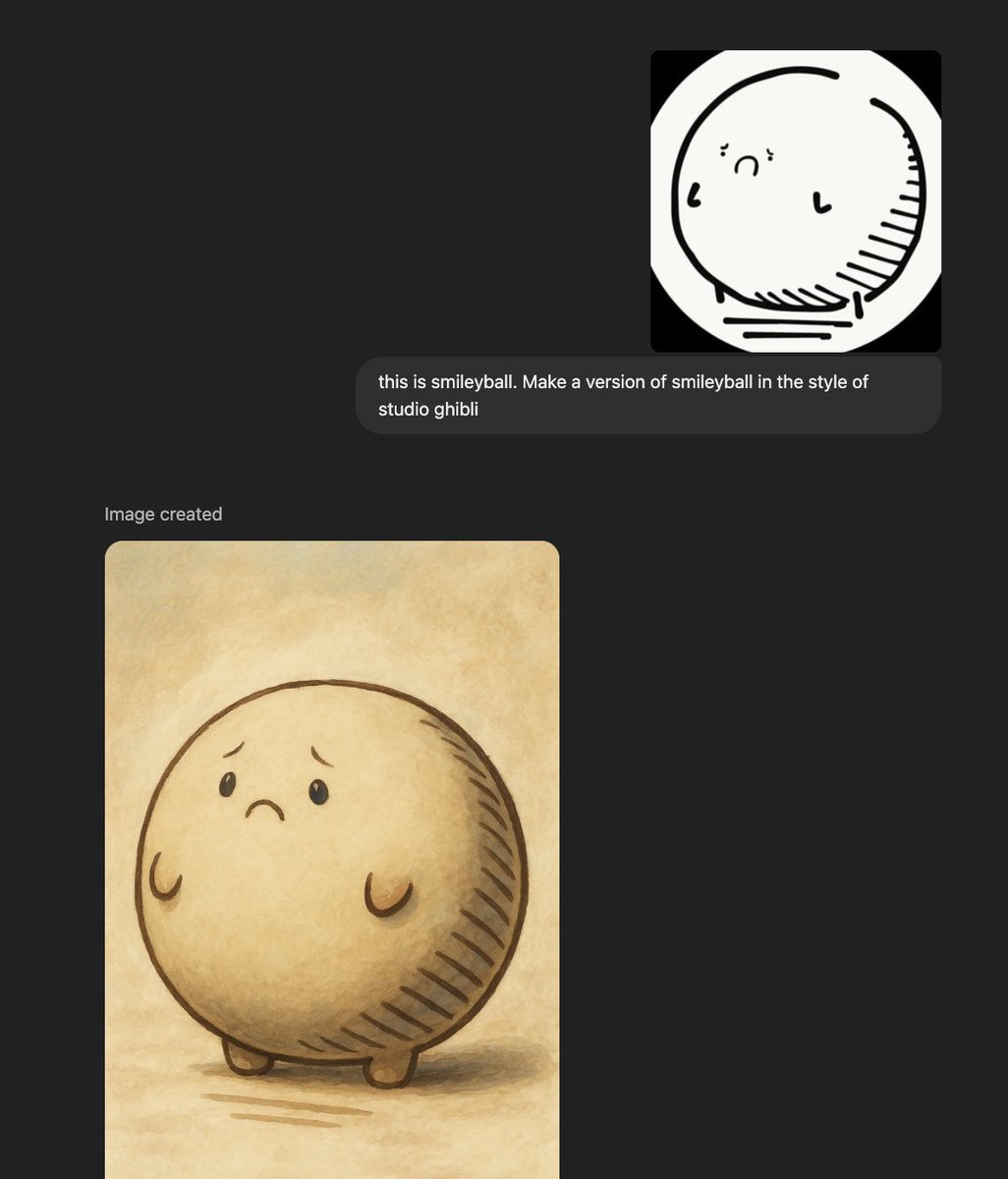

The past couple weeks at OAI have been extremely difficult for me. Time and time again I've had to argue with people about the "right" way to pronounce Ghibli. It's a "J" and this is a hill I'm willing to die on.

I've a lot of complicated feelings about the interplay of AI and art. But I have to admit, this LLM output brought me some joy.

Hard to imagine that a decade ago I was generating MNIST digits with VAEs/GANs and marveling at how pretty they look

A surprising amount of my LLM usage is just copying technical slack messages into the model and asking the model to walk me through wtf is going on

Hypothesis generation is an underappreciated use case of AI.

I have a hard time describing the real value of consumer AI because it’s less some grand thing around AI agents or anything and more AI saving humans a hour of work on some random task, millions of times a day.

I like that when you refresh @LumaLabsAI's website, the contribution list is randomly permuted c:

At CMU graduation and the way the graduates are wearing their cap with pointy part in front vs back can be simulated with an Ising model

Thanks to the ICLR Award Committee! And thank you for the kind words, Max! You were the perfect Ph.D. advisor and collaborator, kind and inspiring. I really couldn't have wished for better.

Thank you Yisong and the Award Committee for choosing the VAE for the Test of Time award. I like to congratulate Durk who was my first (brilliant) student when moving back to the Netherlands and who is the main architect of the VAE. It was absolutely fantastic working with him.

VAE is never the answer, but it's frequently answer-adjacent and everyone should learn about it!

And... the first-ever ICLR test-of-time award goes to "Auto-Encoding Variational Bayes" by Kingma & Welling. Runner-up: "Intriguing properties of neural networks", Szegedy et al. The awards will be presented at 2:15pm tomorrow; there will be retrospective talks. Please attend!

Writing LLM experiment launch scripts is lowkey a full-time job

Most under-appreciated: It tricks DL researchers into learning about PGMs

What are, according to you, the most interesting or most under-appreciated applications of VAEs? Please share below. Links appreciated. (I'm building a list for future reference.)

Bets are basically experiment preregistration

In AI research there is tremendous value in intuitions on what makes things work. In fact, this skill is what makes “yolo runs” successful, and can accelerate your team tremendously. However, there’s no track record on how good someone’s intuition is. A fun way to do this is…

Time to transition my jobs to this gpu

First @NVIDIA DGX H200 in the world, hand-delivered to OpenAI and dedicated by Jensen "to advance AI, computing, and humanity":

I use YYMMDD for all my experiment names bc I don't think I'll be around for the turn of the next century, let alone feel the need to revisit my old wandb runs if I somehow live that long

Zero padded YYYY/MM/DD is the best date format

"We're never gonna change how they do arithmetic to make it more like humans, that would be going backwards" Oh the irony

Interesting, but I don't necessarily agree! See this clip of Feynman from 1985, with the exact opposite characterization. He frames image recognition as far away, because of difficulties in encoding the relevant invariances, but chess etc. as approachable. youtube.com/watch?v=ipRvjS…