Andrew Gordon Wilson

@andrewgwils

Machine Learning Professor

My new paper "Deep Learning is Not So Mysterious or Different": arxiv.org/abs/2503.02113. Generalization behaviours in deep learning can be intuitively understood through a notion of soft inductive biases, and formally characterized with countable hypothesis bounds! 1/12

I was recently called “elderly” so I’m thinking of making this my new homepage photo in true academic fashion.

🌞🌞🌞 The third Structured Probabilistic Inference and Generative Modeling (SPIGM) workshop is **back** this year with @NeurIPSConf at San Diego! In the era of foundation models, we focus on a natural question: is probabilistic inference still relevant? #NeurIPS2025

Love having unstructured time. Why can’t the whole year be summer?

I had a great time presenting "It's Time to Say Goodbye to Hard Constraints" at the Flatiron Institute. In this talk, I describe a philosophy for model construction. Video now online! youtube.com/watch?v=LxuNC3…

I don't know why this whole ferry smells like pistachio and rosewater, but I approve.

Something I noticed at ICML: for the most part, VCs are strikingly disinterested in the technical content of the conference, and the discipline at large. You could be describing what obsoletes the transformer, and they couldn’t care less.

Which is a greater intellectual achievement for a human, defeating the world chess champion, or getting gold in the IMO? Because a computer did one of these things in 1997. (It's a rhetorical question).

Come see how to shrink protein sequences with diffusion at our talk tomorrow morning!!!

Best Paper Award Winners: @setlur_amrith, @AlanNawzadAmin, @wanqiao_xu, @EvZisselman

🚨 Join us tomorrow at the 1st ICML Workshop on Foundation Models for Structured Data! 📅 July 18 | ⏰ 9 AM - 5 PM 📍 West Ballroom D 🎉 70 accepted papers 🎤 8 spotlight orals 🌟 6 invited talks from top experts in tabular & timeseries data! #ICML2025 #FMSD

Excited to be presenting my paper "Deep Learning is Not So Mysterious or Different" tomorrow at ICML, 11 am - 1:30 pm, East Exhibition Hall A-B, E-500. I made a little video overview as part of the ICML process (viewable from Chrome): recorder-v3.slideslive.com/#/share?share=…

My new paper "Deep Learning is Not So Mysterious or Different": arxiv.org/abs/2503.02113. Generalization behaviours in deep learning can be intuitively understood through a notion of soft inductive biases, and formally characterized with countable hypothesis bounds! 1/12

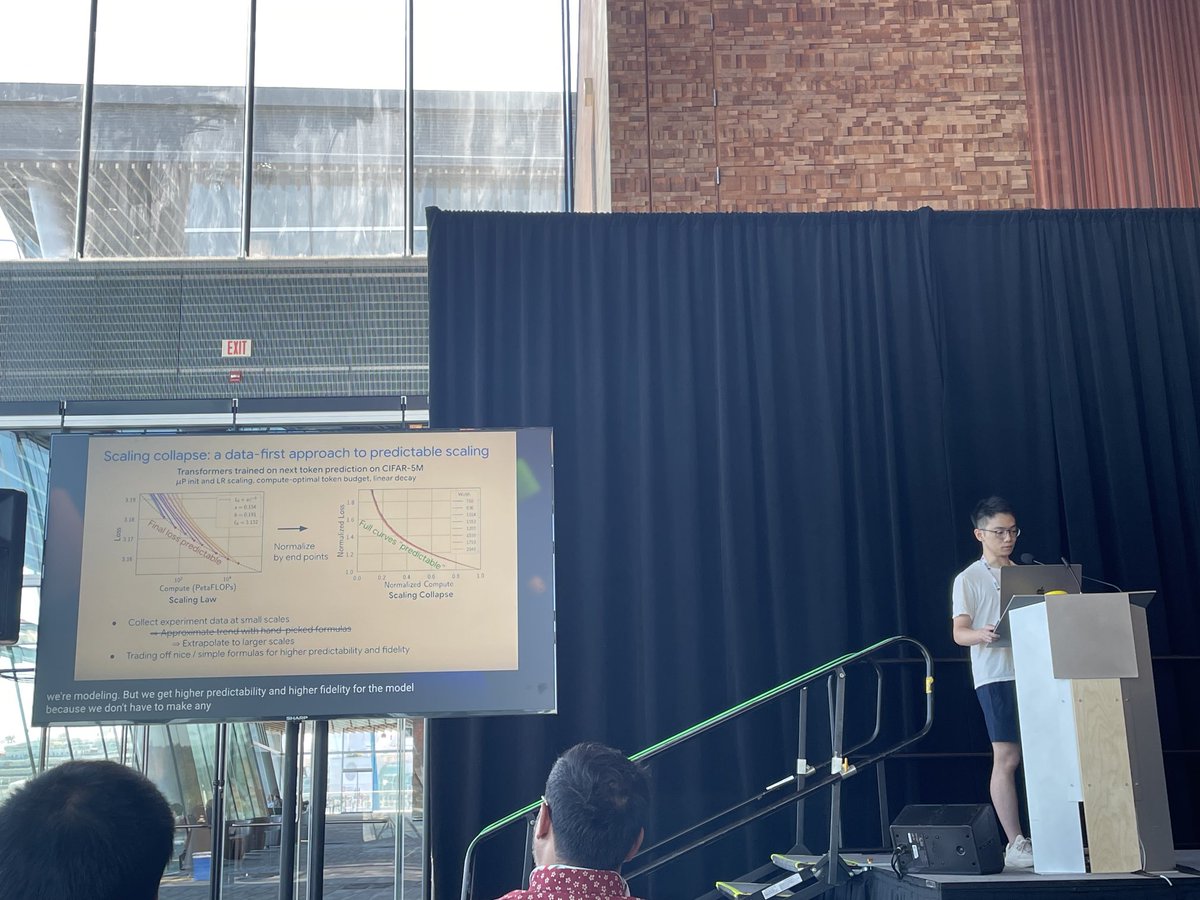

Great talk from @ShikaiQiu on scaling collapse, which can be used as a sensitive diagnostic for model specification (LR, etc) at small scale that transfers to large scales! arxiv.org/abs/2507.02119

PSA: this poster on OOD detection has been *rescheduled* to Wednesday, 11 am - 1:30 pm, West Exhibition Hall B2-B3 W-303, due to some travel issues.

Check out @yucenlily's ICML poster tomorrow 11 am - 1:30 pm, East Exhibition Hall A-B # E-603, on "Out-of-Distribution Detection Methods Answer the Wrong Questions"!

Check out @yucenlily's ICML poster tomorrow 11 am - 1:30 pm, East Exhibition Hall A-B # E-603, on "Out-of-Distribution Detection Methods Answer the Wrong Questions"!

In our new ICML paper, we show that popular families of OOD detection procedures, such as feature and logit based methods, are fundamentally misspecified, answering a different question than “is this point from a different distribution?” arxiv.org/abs/2507.01831 [1/7]

Come see @ShikaiQiu's oral presentation on our ICML scaling collapse paper tomorrow at 10:30 am PT, or poster at 11 am! arxiv.org/abs/2507.02119

Our new paper discovers scaling collapse: through a simple affine transformation, whole training loss curves across model sizes with optimally scaled hyperparameters collapse to a single universal curve! We explain the collapse, which provides a diagnostic for model scaling. 1/2

“Do We Really Have a Mechanistic Understanding of How Flatness Can Lead to Better Generalization?” Well, yes, yes we do. “Is Bigger Always Better?” Yes.

If a paper title has a question the answer is almost always no. It would be amusing to see a series of papers breaking this trend. World, make it happen?

If a paper title has a question the answer is almost always no. It would be amusing to see a series of papers breaking this trend. World, make it happen?