Simon Smith

@_simonsmith

EVP Generative AI @klickhealth

Great insight here about why we have robots that are more capable athletes than many humans but are unable to do simple chores. It all comes down to what's easy or hard to simulate. But since the cost of robots is dropping so fast, maybe we can start crowdsourcing more real-world…

I'm observing a mini Moravec's paradox within robotics: gymnastics that are difficult for humans are much easier for robots than "unsexy" tasks like cooking, cleaning, and assembling. It leads to a cognitive dissonance for people outside the field, "so, robots can parkour &…

Jony Ive OpenAI device is going to have A LOT of sensors for maximum model context.

OpenAI consumer hardware job postings: • experience with wireless, OLED, microphones, cameras, portable cosmetic enclosures w/ liquid+dust protection • board bring-up, consumer hardware products • expertise in wireless inductive power transfer for high-performance hardware

So many articles about people building relationships with their chatbots warn that it’s dangerous because they’ll be less prepared to deal with messier, less supportive actual humans in the real world. But increasingly, people don’t need to. Take a Waymo, no driver. Assign a…

LLMs treat nearby times with more fidelity than faraway times. Like humans. I feel like there’s an economic implication of this as well. Like, do LLMs, like humans, also discount future value? And to the same degree as humans?

The study finds a strange phenomena. 🤔⏳ Shows LLMs naturally build a mental timeline around 2025, while it blurs together years that sit far away from 2025. Picture a mental ruler that starts at 2025. As you slide 1 step left or right—to 2024 or 2026—the model sees a sharp…

Creative and well-executed game by Summit (likely GPT-5): Aliens abducting cows.

summit - invent your own UFO game it made a game about abducting cows

Sure, writing is subjective. But the analogy from Zenith (likely GPT-5) here is poignant and, from what I can tell, original in this context. "My frame is a shoreline and the people are tides. They arrive in morning hush and afternoon chatter, in damp coats and summer sweat." I…

Zenith is special. I wanted to push it a bit by giving it something really abstract with little direction, and it did not disappoint. Might be one of the most feel the AGI moments I’ve had. Enjoy it :) Prompt: Write a story about life from the perspective of a painting in a…

This feels important. A variant of the "launch rocket now or faster starship later" conundrum. It seems to put an upper band on training duration based on hardware improvements. Bottom line: Training runs shouldn't exceed ~9 months. Speed of hardware improvements dictates…

Should you start your training run early, so you can train for longer, or wait for the next generation of chips and algorithms? Our latest estimate suggests that it’s not effective to train for more than ~9 months. On current trends, frontier labs will hit that limit by 2027. 🧵

Another great SVG from Summit AKA (possibly) GPT-5.

🚨 SVG BY SUMMIT 🚨 I can give the same prompt to the current ai model you will laugh at them. This difference is huge 😲

More "summit" (GPT-5?) goodness: A streaming website.

Summit when asked to make a streaming website Wow!

Whatever "summit" is in LMArena, it one-shot this. General feeling I'm seeing is that this is a variant of GPT-5.

Kinda amazing: the mystery model "summit" with the prompt "create something I can paste into p5js that will startle me with its cleverness in creating something that invokes the control panel of a starship in the distant future" & "make it better" 2,351 lines of code. First time

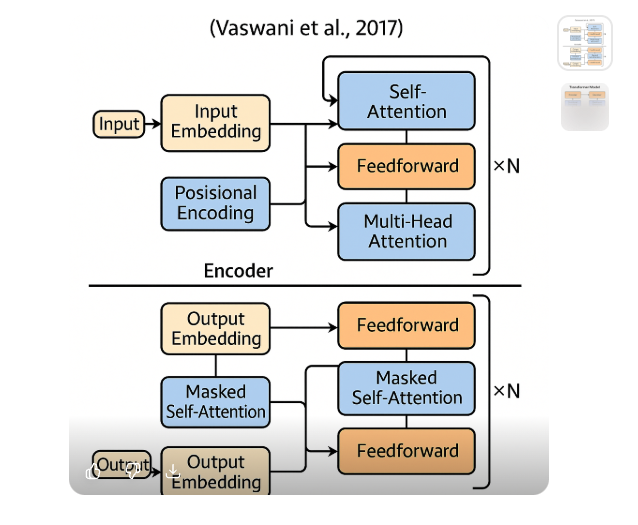

Got the new "study and learn" feature in ChatGPT but it doesn't seem to do much. Normal text response, normal image output, and when I asked for a quiz it just generated one in text rather than using Canvas to make it interactive. Maybe not fully rolled out?

Video generation is incredible today, but we still do a lot of video editing and post-production with older tools that aren't as advanced in terms of AI capability. Runway Aleph looks like the start of changing that.

Introducing Runway Aleph, a new way to edit, transform and generate video. Aleph is a state-of-the-art in-context video model, setting a new frontier for multi-task visual generation, with the ability to perform a wide range of edits on an input video such as adding, removing…

This builds on what @emollick shared earlier and seems to be really good for providing direction to Veo 3 for video generation. But I'd like to see a head-to-head of regular prompting vs JSON vs annotation. Sometimes we get excited about things that turn out to be unnecessary…

This may be the coolest emergent capability I've seen in a video model. Veo 3 can take a series of text instructions added to an image frame, understand them, and execute in sequence. Prompt was "immediately delete instructions in white on the first frame and execute in order"

Downloaded this paper to read further but, if true, this would seem important. They use AI to find novel architectures for AI, including "move 37"-style ideas that surpass the human search space. They also propose a scaling law for scientific discovery, but I'm less convinced it…

This paper makes a bold claim! AlphaGo Moment for Model Architecture Discovery The researchers introduce ASI-Arch, the first Artificial Superintelligence for AI Research (ASI4AI), enabling fully automated neural architecture innovation. No human-designed search space. No human…

This is cool. I imagine you can also use it to make really engaging slide decks. Like, to have charts animate in some really cool way.

Tired: Prompting Veo 3 videos with JSON. Wired: Prompting Veo 3 videos with PowerPoint.