Sai Rajeswar

@RajeswarSai

Staff Research Scientist @ ServiceNow. Adjunct Professor @UMontreal and Core Member @mila_quebec. Prev research scientist intern @GoogleDeepMind.

I am now an associate member at @Mila_Quebec, and am looking to co-supervise a graduate student in 2025. Kindly apply if interested, and spread the word🔊!

📷 Meet our student community! Interested in joining Mila? Our annual supervision request process for admission in the fall of 2025 is starting on October 15, 2024. More information here mila.quebec/en/prospective…

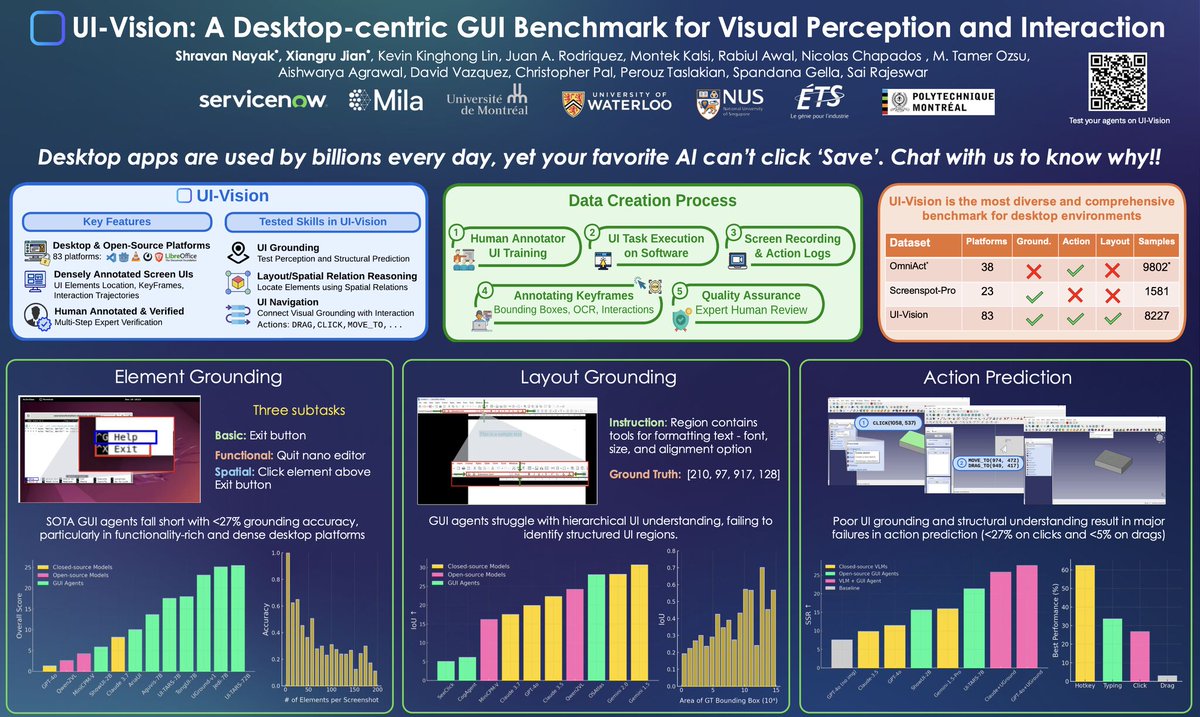

At ICML next few days presenting recent works! Hoping to reconnect. Get in touch if you are working on multimodal AI or post-training 📍Today 11am: West Exb Hall B2 (#W-400) presenting UI-Vision 🧠Also presenting @ Assessing World Models (18th) and Computer use Agents workshops

I am speaking at 10 am PT on a slightly different topic than I usually talk about 🙂: "Simple Ideas Can Have Mighty Effects: Don't Take LLM Fundamentals for Granted" Check out if you're around. #ICML2025

Join us at NewInML@ICML25 Workshop — today at 8:30 AM in rooms MR 211-214. Explore cutting-edge research, meet amazing new voices in ML, and be part of the community! 🌐 newinml.github.io #ICML2025 #ML #AI #NewInML

Gonna miss and super grateful for the opportunity to be part of the journey and learning from you. 🫡 Looking forward to learn about what's next!

So long, @ServiceNowRSRCH ! It's been great 4 years. I look forward to cheer for more great open-source AI releases from the talented ServiceNow AI people! I will tell you what's next in due time 😉

Personalization methods for LLMs often rely on extensive user history. We introduce Curiosity-driven User-modeling Reward as Intrinsic Objective (CURIO) to encourage actively learning about the user within multi-turn dialogs. 📜 arxiv.org/abs/2504.03206 🌎 sites.google.com/cs.washington.…

If you are at #CVPR, make sure to catch @joanrod_ai and hear about scalable SVG generation straight from the source! 🚩Exhibition Hall D – Poster #31

StarVector poster happening now at CVPR! Come by poster #31 if you want to chat about vector graphics, image-to-code generation, or just say hi!

A timely and compelling read by @jxmnop on a much-needed call to focus our research efforts around deeper questions, riskier bets and bit of long-term impact. 💡#AIResearch

## The case for more ambition i wrote about how AI researchers should ask bigger and simpler questions, and publish fewer papers:

New to ML research? Never published at ICML? Don't miss this! Check out the New in ML workshop at ICML 2025 — no rejections, detailed feedback, awards, and ICML tickets for selected authors. Deadline: June 10 (AoE) Submit: openreview.net/group?id=ICML.… Info: newinml.github.io

Congratulations to @Yoshua_Bengio on launching @LawZero_ — a research effort to advance safe-by-design AI, especially as frontier systems begin to exhibit signs of self-preservation and deceptive behaviour.

🎨🔁 RL closes the loop on inverse rendering! By letting a VLM see its own SVG renderings, we push sketch-to-vector generation to near-perfect fidelity and code compactness. Congrats to @joanrod_ai, who is rolling out, one reward at a time. Please read our latest preprint! 👇

Thanks @_akhaliq for sharing our work! Excited to present our next generation of SVG models, now using Reinforcement Learning from Rendering Feedback (RLRF). 🧠 We think we cracked SVG generalization with this one. Go read the paper! arxiv.org/abs/2505.20793 More details on…

Do current large multimodal models really “understand” the structure behind a complex sketch? 🌟 Starflow converts hand-drawn workflow diagrams into executable JSON flows, testing VLMs on their ability to grasp true structure understanding. #multimodalA @patricebechard @PerouzT

🚀 New paper from our team at @ServiceNowRSRCH! 💫𝐒𝐭𝐚𝐫𝐅𝐥𝐨𝐰: 𝐆𝐞𝐧𝐞𝐫𝐚𝐭𝐢𝐧𝐠 𝐒𝐭𝐫𝐮𝐜𝐭𝐮𝐫𝐞𝐝 𝐖𝐨𝐫𝐤𝐟𝐥𝐨𝐰 𝐎𝐮𝐭𝐩𝐮𝐭𝐬 𝐅𝐫𝐨𝐦 𝐒𝐤𝐞𝐭𝐜𝐡 𝐈𝐦𝐚𝐠𝐞𝐬 We use VLMs to turn 𝘩𝘢𝘯𝘥-𝘥𝘳𝘢𝘸𝘯 𝘴𝘬𝘦𝘵𝘤𝘩𝘦𝘴 and diagrams into executable workflows.…

The secret sauce lies in amplifying quality, coverage, and curation. If someone downplays data’s impact, they are perhaps overlooking where most of the real gains still come from! 🔥

Nobody wants to hear it, but working on data is more impactful than working on methods or architectures.

🧐How can we teach Multimodal models or Agents “When to Think” like humans? 👉Check Out: Think-or-Not (TON) 🔥Selective Reasoning via Reinforcement Learning for Vision-Language Models arXiv: arxiv.org/pdf/2505.16854 code: github.com/kokolerk/TON We introduce “thought dropout”…

Congrats @TianbaoX and team on this exciting work and release! 🎉 We’re happy to share that Jedi-7B performs on par with UI-Tars-72B agent on our challenging UI-Vision benchmark, with 10x fewer parameters! 👏 Incredible 🤗Dataset: huggingface.co/datasets/Servi… 🌐uivision.github.io

Scaling Computer-Use Grounding via User Interface Decomposition and Synthesis

did you know people have been training neural networks on text since 2003? everyone talks about Attention Is All You Need. but this is the real paper that got our field started. it was in 2003, in montreal. i read it, and it was even more forward-thinking than i expected:

The UI-Vision Benchmark is out on HuggingFace: huggingface.co/datasets/Servi… ✅Now accepted at ICML 2025. 🔥 Go test your UI Agents on the benchmark!

🚀 Excited to share that UI-Vision has been accepted at ICML 2025! 🎉 We have also released the UI-Vision grounding datasets. Test your agents on it now! 🚀 🤗 Dataset: huggingface.co/datasets/Servi… #ICML2025 #AI #DatasetRelease #Agents

Our team has released the UI-Vision benchmark (accepted at #ICML2025) for testing GUI agent visual grounding and action prediction! 🚀🚀🚀 🤗 Dataset: huggingface.co/datasets/Servi… Special thanks to the students to lead this effort, @PShravannayak and @EdwardJian2 @ServiceNowRSRCH

🚀 Excited to share that UI-Vision has been accepted at ICML 2025! 🎉 We have also released the UI-Vision grounding datasets. Test your agents on it now! 🚀 🤗 Dataset: huggingface.co/datasets/Servi… #ICML2025 #AI #DatasetRelease #Agents

🚀 Excited to share that UI-Vision has been accepted at ICML 2025! 🎉 We have also released the UI-Vision grounding datasets. Test your agents on it now! 🚀 🤗 Dataset: huggingface.co/datasets/Servi… #ICML2025 #AI #DatasetRelease #Agents

🚀 Super excited to announce UI-Vision: the largest and most diverse desktop GUI benchmark for evaluating agents in real-world desktop GUIs in offline settings. 📄 Paper: arxiv.org/abs/2503.15661 🌐 Website: uivision.github.io 🧵 Key takeaways 👇

Still wondering who came up with the idea to sneak in a banana mustache sample in Figure-1 of the paper, cracks me up every time I see it. Iconic figure and good old research days 📜 cc: @aagrawalAA

10 years ago today :)

🚨🤯 Today Jensen Huang announced SLAM Lab's newest model on the @HelloKnowledge stage: Apriel‑Nemotron‑15B‑Thinker 🚨 A lean, mean reasoning machine punching way above its weight class 👊 Built by SLAM × NVIDIA. Smaller models, bigger impact. 🧵👇

The full report for Llama-Nemotron Nano, Super, and Ultra is out 📄 — covering reasoning SFT, large-scale RL, and comprehensive evaluations. One of the most complete open releases for reasoning models 🚀 arxiv.org/pdf/2505.00949…

Llama-Nemotron-v1 technical report is now available on arxiv arxiv.org/pdf/2505.00949…