jxmo

@jxmnop

language models & information theory // research @meta @cornell wondering how we got here and what comes next

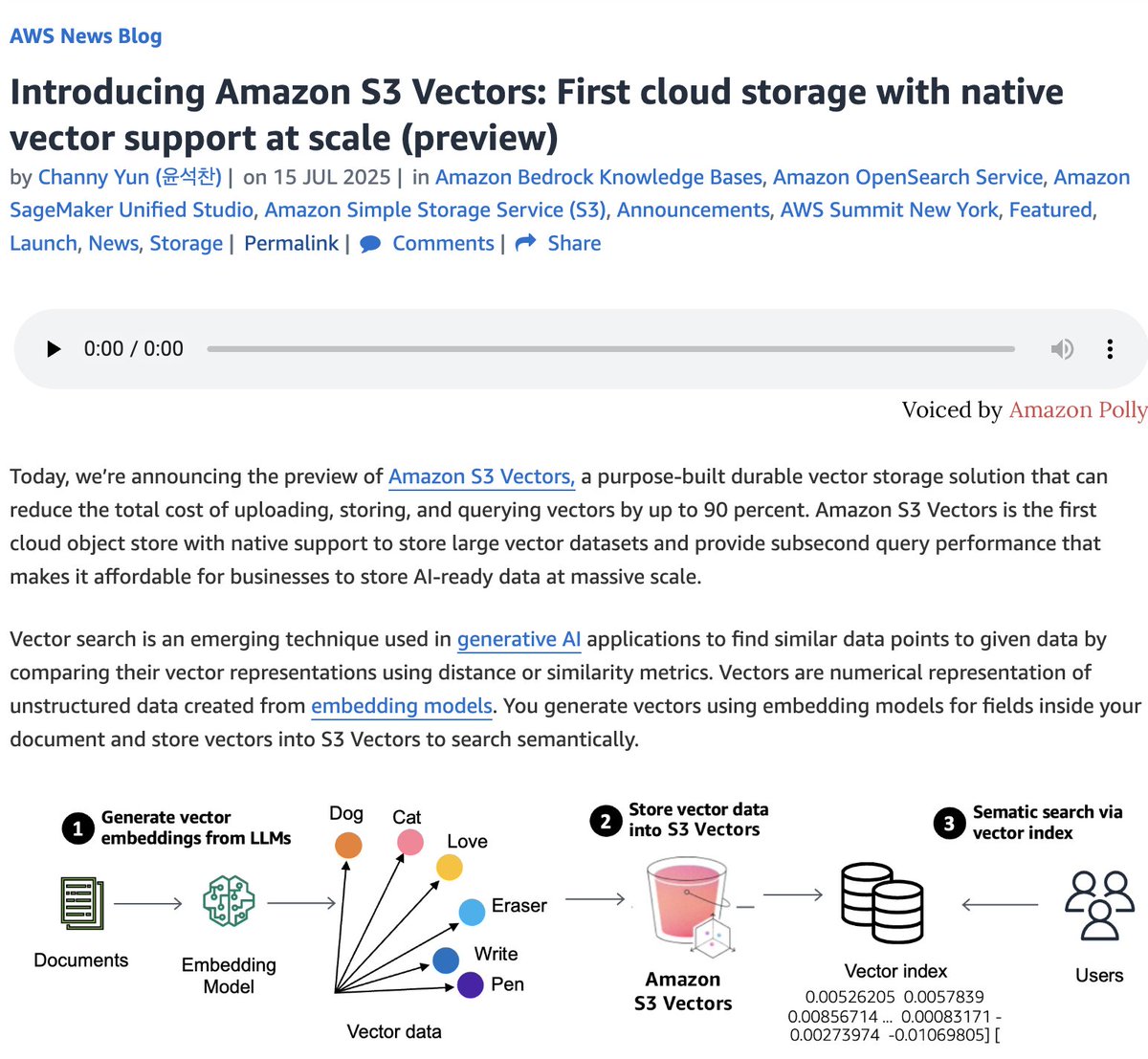

new blog post "There Are No New Ideas In AI.... Only New Datasets" in which i summarize LLMs in exactly four breakthroughs and explain why it was really *data* all along that mattered... not algorithms

# A new type of information theory this paper is not super well-known but has changed my opinion of how deep learning works more than almost anything else it says that we should measure the amount of information available in some representation based on how *extractable* it is,…

I am just posting this for encouragement to be active! I started posting about 8 months ago, before that I was like 90% of people on Twitter, just viewing not contributing. But because of my posting I was indirectly able to meet with Jack Morris, a super awesome guy. @jxmnop…

now AI can write novel proofs at the level of a world-class competitive mathematician but it still can’t reliably book me a weekend trip to boston so strange

we achieved gold medal level performance on the 2025 IMO competition with a general-purpose reasoning system! to emphasize, this is an LLM doing math and not a specific formal math system; it is part of our main push towards general intelligence. when we first started openai,…

again, the AI labs are obsessed with building reasoning-native language models when they need to be building *memory-native* language models - this is possible (the techniques exist) - no one has done it yet (no popular LLM has a built in memory module) - door = wide open

i feel intense pang of nostalgia thinking about how i will never have to write an immense amount of repetitive code to eg build a website ever again. there was a quiet bluecollar satisfaction to this type of work, knowing that if you don’t stay up all night smacking keys the…

New blog post about asymmetry of verification and "verifier's law": jasonwei.net/blog/asymmetry… Asymmetry of verification–the idea that some tasks are much easier to verify than to solve–is becoming an important idea as we have RL that finally works generally. Great examples of…

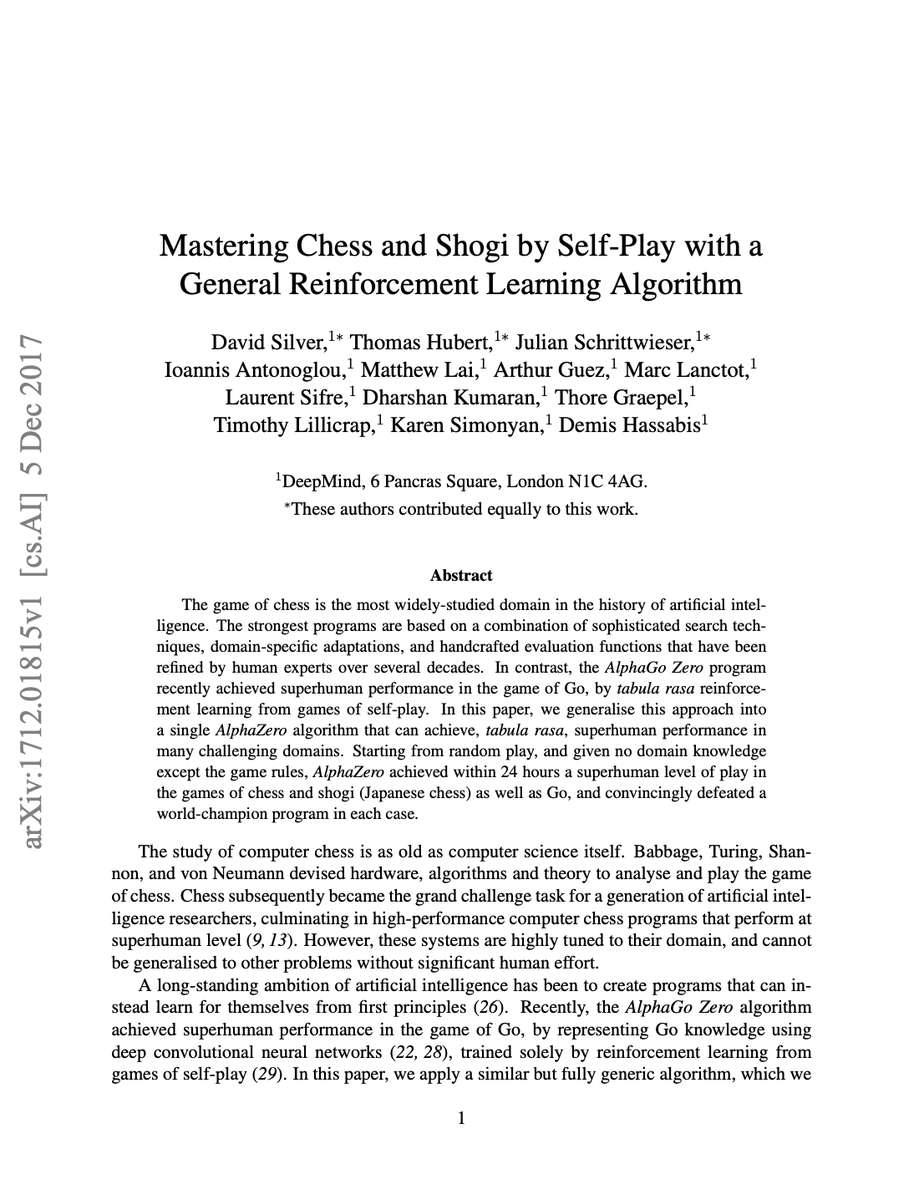

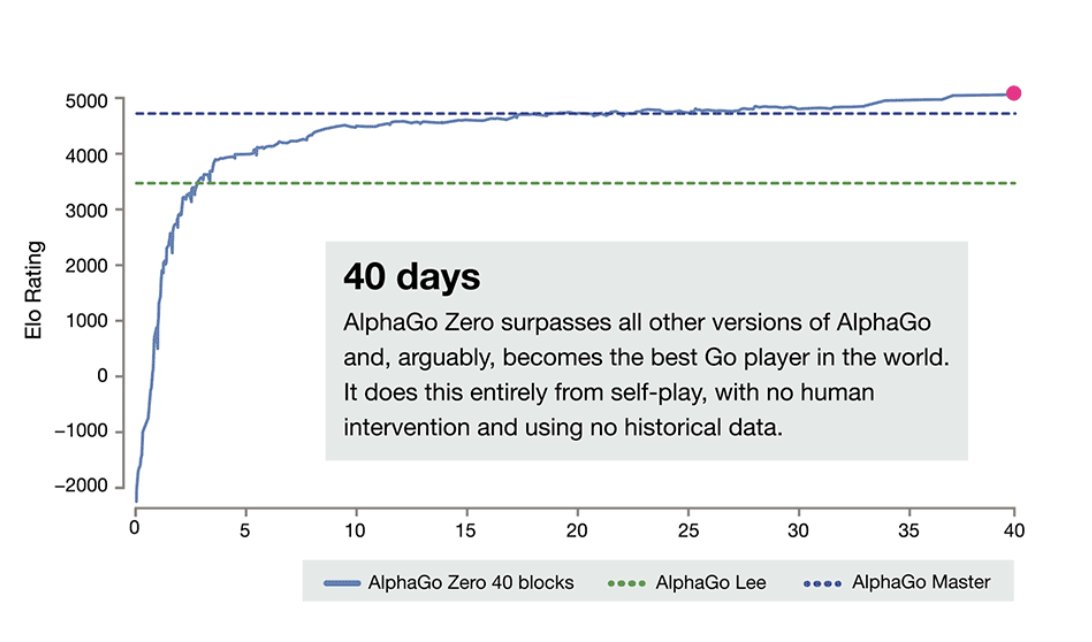

pretty crazy that DeepMind got self-play working w AlphaZero in *2017* yet basically no one has been able to make it work since

today’s LLMs have reduced the cost of mediocrity to next-to-nothing unfortunately, the cost of greatness remains high as it’s ever been

when i first learned Machine Learning, our professor ingrained into us how every ML problem starts by splitting data into train, test, and validation these days there is just train and test. in many cases there is just train and more train where’d all the validation sets go?

Really excellent article by @jxmnop! Esp. found this thought provoking, as someone who’s not been as deeply immersed in the frontier of Reasoning LLMs of late.

new blog: How to scale RL to 10^26 FLOPs everyone is trying to figure out the right way to scale reasoning with RL ilya compared the Internet to fossil fuel: it may be the only useful data we have. and it's expendable perhaps we should learn to reason from The Internet (not…

weights || activations one day we will be able to perfectly decode either one back to plaintext

nice trend over the last year is that folks in AI have finally produced a few libraries with the right abstractions finally our code can be both hackable and fast, not just one or the other. this never used to happen vLLM, sglang, verl.. this is the dawn of Good Software in AI

this book taught me more about real design thinking than my 2 year industrial design masters program. it rewired how i saw doors, buttons, systems, everything. now i build digital interfaces, and it still shapes how i think. one of the most high signal books i’ve ever read.