Juan Pablo Zuluaga

@Pablogomez3

Agigo AG | PhD @IDIAP_ch + @EPFL. Prev Apple+Amazon. ASR+NLP+ST | 🇨🇴🇨🇭 | ☕️

🚨 🔔 Paper alert in Efficient Streaming ASR 🔔🚨 I'm super excited to release our paper “XLSR-Transducer: Streaming ASR for Self-Supervised Pretrained Models” abs: arxiv.org/abs/2407.04439 [1/n]

Coming soon! Autumn reads :) #Gabo #HectorAbad #IsabelAllende

🚀 GSPO: Group Sequence Policy Optimization — a breakthrough RL algorithm for scaling LMs! 🔹 Sequence-level optimization — theoretically sound & matching reward 🔹 Rock-solid stability for large MoE models — no collapse 🔹 No hacks like Routing Replay — simpler, cleaner…

✅ Try out @Alibaba_Qwen 3 Coder on vLLM nightly with "qwen3_coder" tool call parser! Additionally, vLLM offers expert parallelism so you can run this model in flexible configurations where it fits.

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

Something better than traveling to Colombia to get a really nice coffee with buñuelos and pan de Bonos? 😭🫣 🇨🇴

New research from Exo done (in part) with MLX on Apple silicon: An algorithm for distributed training that leverages higher RAM capacity of Apple silicon relative to FLOPs and inter-machine bandwidth.

Paper is out. Link: openreview.net/pdf?id=TJjP8d5…

Our Team in Zurich has a job opening for Senior Front-End Engineer! We are working on a cool stuff related to #ASR, #TTS, #LLMs and more! linkedin.com/jobs/view/4264…

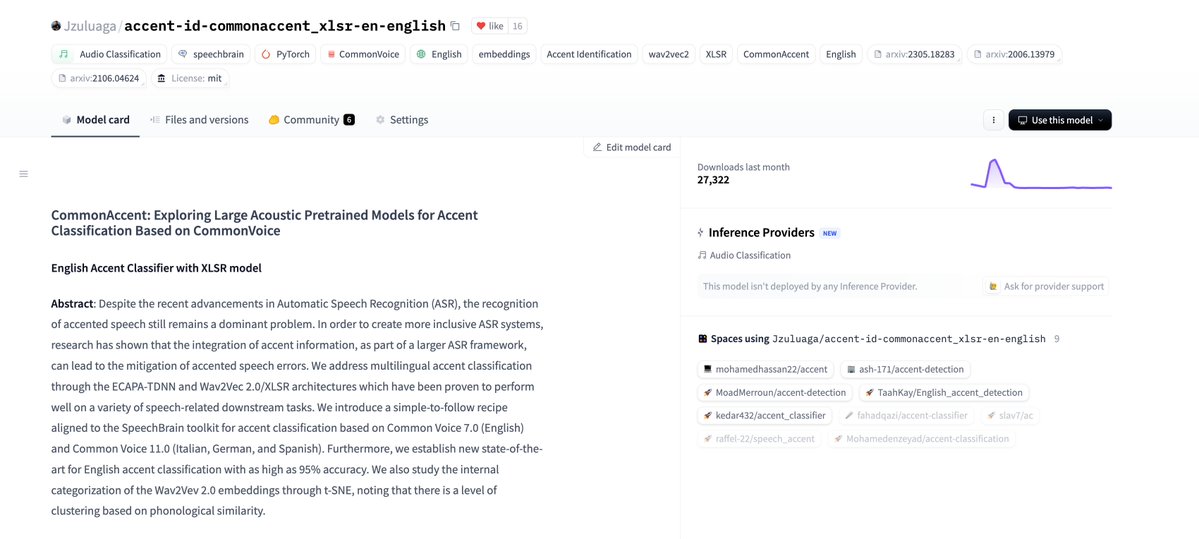

Who's out there using our XLSR model for accent classification? 😆👀🤪 (27k downloads) huggingface.co/Jzuluaga/accen… #Speech #XLSR #AI

What keeps me awake during the night: I’m so looking forward to start using the 8xH200 🫡😭 brrrrrrrrr

Is there anything better than the smell of an 8xH200 server 👃👃? No, right?

Post of the year!

Pro-tip for vLLM power-users: free ≈ 90 % of your GPU VRAM in seconds—no restarts required🚀 🚩 Why you’ll want this • Hot-swap new checkpoints on the same card • Rotate multiple LLMs on one GPU (batch jobs, micro-services, A/B tests) • Stage-based pipelines that call…

Pro-tip for vLLM power-users: free ≈ 90 % of your GPU VRAM in seconds—no restarts required🚀 🚩 Why you’ll want this • Hot-swap new checkpoints on the same card • Rotate multiple LLMs on one GPU (batch jobs, micro-services, A/B tests) • Stage-based pipelines that call…

Excited to share what I worked on during my time at Meta. - We introduce a Triton-accelerated Transformer with *2-simplicial attention*—a tri-linear generalization of dot-product attention - We show how to adapt RoPE to tri-linear forms - We show 2-simplicial attention scales…

☦️ Christian population (% of their total population): 🇻🇦 Vatican City: 100% 🇷🇴 Romania: 98% 🇦🇲 Armenia: 97.9% 🇵🇪 Peru: 94.5% 🇵🇱 Poland: 94.3% 🇬🇷 Greece: 93% 🇨🇴 Colombia: 92% 🇲🇩 Moldova: 91.8% 🇷🇸 Serbia: 91% 🇧🇷 Brazil: 90% 🇲🇽 Mexico: 88.9% 🇻🇪 Venezuela: 88% 🇭🇷 Croatia: 87.4% 🇿🇦…

“Que tus cagadas sean el abono que fertilice tus éxitos”

Noticing myself adopting a certain rhythm in AI-assisted coding (i.e. code I actually and professionally care about, contrast to vibe code). 1. Stuff everything relevant into context (this can take a while in big projects. If the project is small enough just stuff everything…

when people were working on BERT i always found these types of visualizations compelling. seeing the attention mechanism in action is so cool why are they not popular anymore? do our models have too many layers for us to understand now? or are attention maps just not useful?

Il Biscione impavido 🐍🔥 #ForzaInter #UCLFinal #ParisInter