Martin Josifoski

@MartinJosifoski

Researching AI research agents at @AIatMeta. Spent time at @EPFL, @MSFTResearch, @ETH Anon feedback: https://admonymous.co/mj

Scaling AI research agents is key to tackling some of the toughest challenges in the field. But what's required to scale effectively? It turns out that simply throwing more compute at the problem isn't enough. We break down an agent into four fundamental components that shape…

Did you know that everything is a Flow👀? Happy to see a packed room at the workshop on developing and customizing AI workflows with aiFlows (github.com/epfl-dlab/aifl…) at AMLD earlier today! @NickyBaldwin3

Hiring! We're looking to fill contractor Research Engineer roles in New York City to work with us in FAIR on AI Research Agents. If that sounds fun, please fill out the expression of interest here: forms.gle/7m4fVqLXY5GwuL…

I’m building a new team at @GoogleDeepMind to work on Open-Ended Discovery! We’re looking for strong Research Scientists and Research Engineers to help us push the frontier of autonomously discovering novel artifacts such as new knowledge, capabilities, or algorithms, in an…

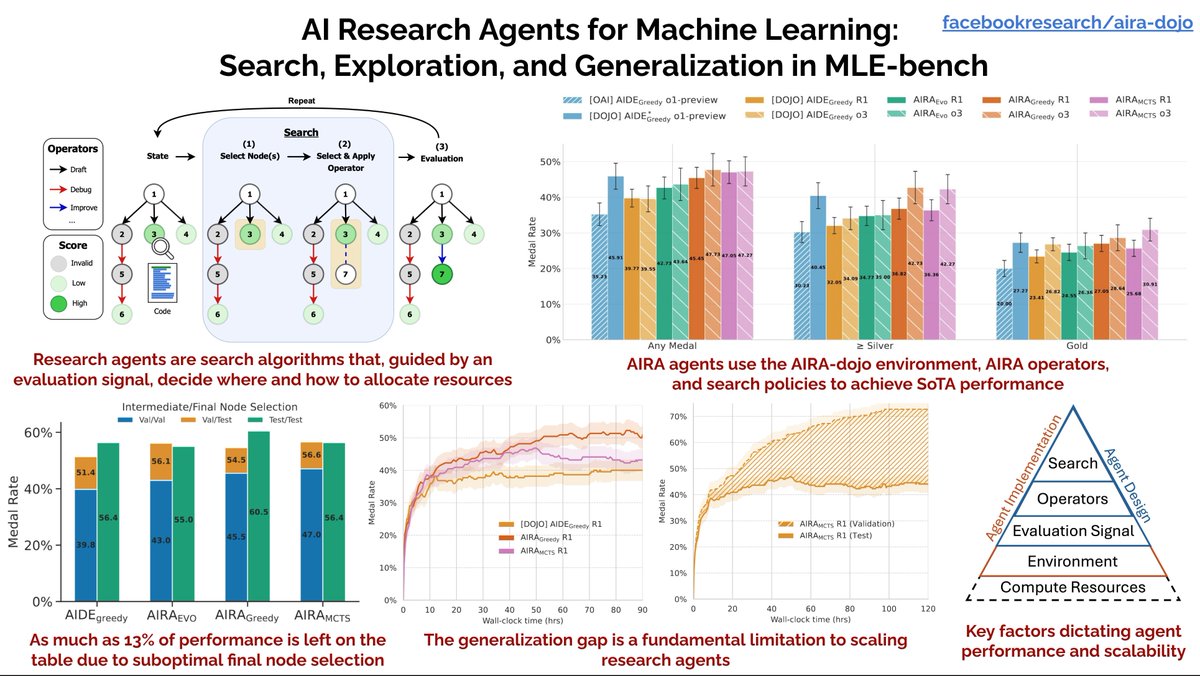

AIRA‑dojo shows that picking smarter code‑tweaking moves and a better search style lifts automated Kaggle agents from 39.6% to 47.7% medal hits. AIRA-dojo is a computer playground where an AI research agent can write code, train models, and test ideas while staying inside its…

AI Research Agents for ML Achieves state-of-the-art on MLE-bench lite! Using AI to automate the training of ML models is one of the most exciting and promising areas of research today. Lots of cool ideas in this paper:

Solid work from @AIatMeta on ablating and improving AIDE on MLE-Bench! The rigor of empirical evaluation has reached a new level, making the experimental signals super strong. Highly recommended for anyone interested in AI-Driven R&D/Agentic Search!

AI Research Agents are becoming proficient at machine learning tasks, but how can we help them search the space of candidate solutions and codebases? Read our new paper looking at MLE-Bench: arxiv.org/pdf/2507.02554 #LLM #Agents #MLEBench

Excited to release AlgoTune!! It's a benchmark and coding agent for optimizing the runtime of numerical code 🚀 algotune.io 📚 algotune.io/paper.pdf 🤖 github.com/oripress/AlgoT… with @OfirPress @ori_press @PatrickKidger @b_stellato @ArmanZharmagam1 & many others 🧵

Theory of Mind (ToM) is crucial for next gen LLM Agents, yet current benchmarks suffer from multiple shortcomings. Enter 💽 Decrypto, an interactive benchmark for multi-agent reasoning and ToM in LLMs! Work done with @TimonWilli & @j_foerst at @AIatMeta & @FLAIR_Ox 🧵👇

What happens when LLMs encounter information that contradicts their static knowledge? 🤔 Discover our findings, including a new dataset and interpretability method, in our ACL 2024 paper! 🧵👇 📄 Read the paper: arxiv.org/abs/2312.02073 🖥️ Explore more: epfl-dlab.github.io/llm-grounding-…

Orchestrated interactions between LLMs, humans, and tools show great promise. Today, we introduce “Semantic Decoding" - a perspective that views these interactions as optimization and search in the space of semantic tokens (thoughts). 📄 arxiv.org/abs/2403.14562

Tomorrow, with @NickyBaldwin3, we'll be hosting the "Learn to develop and customize AI workflows with Flows" workshop at the #AMLDEPFL2024 conference! Join us to learn about the new version of aiFlows (github.com/epfl-dlab/aifl…) coming out later today! @cervisiarius @peyrardMax

When I talk with people about constrained decoding, I'm always asked: "Can it be applied to blackbox LLMs like GPT-4?" My response has been a bit pessimistic due to the limited logit access.🤔 🚀However, we're excited to announce Sketch-Guide Constrained Decoding (SGCD), a…