Saibo-Creator

@SaiboGeng

PhD @EPFL | Reliable Efficient LLM Inference | ex-intern @MSFT

🔴 New MCP attack leaks WhatsApp messages via MCP, side-stepping WhatsApp security. 1/n We show a new MCP attack that leaks your WhatsApp messages if you are connected via WhatsApp MCP. Our attack uses a sleeper design, circumventing the need for user approval. More 👇

We just shipped Structured Outputs, JSON schema mode, for Gemini 1.5 Flash, available via API and AI Studio right now (I love shipping on Fridays)! 🏗️ ai.google.dev/gemini-api/doc…

Great honor to present our work zip2zip at @ESFoMo ! Had discussion with many people and got some great feedback! Big thanks to the organizers ! @nathanrchn @realDanFu @SonglinYang4

Our next contributed talk, zip2zip!

It feels quite unreal that after I return from my holiday, the company I'm interning got acquired! CRAZY TIMES !!!😎 Congrats again to the cofounders!!!

We’re thrilled to officially join forces with @snyksec! Together, we’re changing the landscape of the agentic AI future. More to come!

Tried GPT4o, Claude 4 and DeepSeek, all give 27

Part 2 of this mystery. Spotted on reddit. In my test not 100% reproducible but still quite reproducible. 🤔

OpenAI now has an improved Structured Output mode, with support from Guidance(llguidance) :)

We've made some improvements to Structured Outputs: 🎣 Parallel function calling now works with strict mode—ensuring calls reliably adhere to schema ⚙️ Many more keywords are now supported, letting you specify: - Output string lengths and formats via regex or formats like email…

Great opportunity!

I am recruiting 2 PhD students for Fall'25 @csaudk to work on bleeding-edge topics in #NLProc #LLMs #AIAgents (e.g. LLM reasoning, knowledge-seeking agents, and more). Details: cs.au.dk/~clan/openings Deadline: May 1, 2025 Please boost! cc: @WikiResearch @AiCentreDK @CPH_SODAS

R1 is exciting and provides unique challenges from a mechanistic interpretability point of view. Come join us at ARBOR — an open research collective aimed at collaboratively reverse engineering LLM based reasoning models.

Announcing ARBOR, an open research community for collectively understanding how reasoning models like @OpenAI-o3 and @deepseek_ai-R1 work. We invite all researchers and enthusiasts to this initiative by Martin Wattenberg, Fernanda Viegas and @davidbau. arborproject.github.io

Can we understand and control how language models balance context and prior knowledge? Our latest paper shows it’s all about a 1D knob! 🎛️ arxiv.org/abs/2411.07404 Co-led with @kevdududu, as well as @niklas_stoehr, @giomonea, @wendlerch, @cervisiarius & Ryan Cotterell.

Disappointed to not be at #EMNLP owing to a dislocated shoulder 😢 @DebjitPaul2 will present our poster on Multilingual Entity Insertion (cf. arxiv.org/pdf/2410.04254). Swing by our poster in session #6 on Wed 13@10:30 EST 🚀 PS: I am hiring PhD students @csaudk #LLM #GNNs #CSS

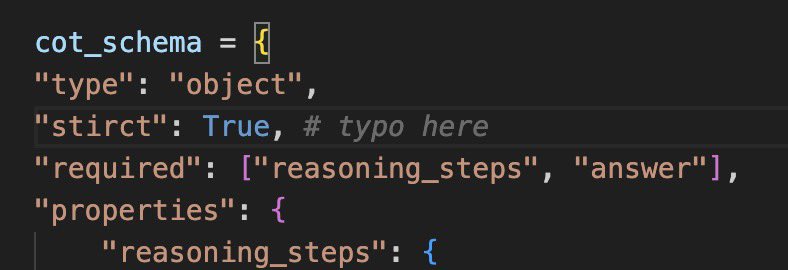

Holy shit, I mis-typed " stirct " instead of "strict" and when I tab, copilot generate " # typo here " in comment ! wow ! I like it !

#ACL2024 I'll be giving an oral presentation at 17:15 in World Ballroom B on my previous work about how to indirectly apply constraining to Blackbox LLM. arxiv.org/abs/2401.09967 I’d love to chat about LLM inference, constrained decoding, or any interesting ideas :)