mzba

@LiMzba

Testing logo transformations in VEO 3 using JSON. ⚙️🎥

Thrilled to announce I was able to independently replicate some of the results in arxiv.org/pdf/2507.07101. Good news for local finetuning w/ MLX. The thread below covers the details - but in summary, Adafactor & Adam perform similarily for local fine-tuning at BS=1. More below:

After four overhauls and millions of real-world sessions, here are the lessons we learned about context engineering for AI agents: manus.im/blog/Context-E…

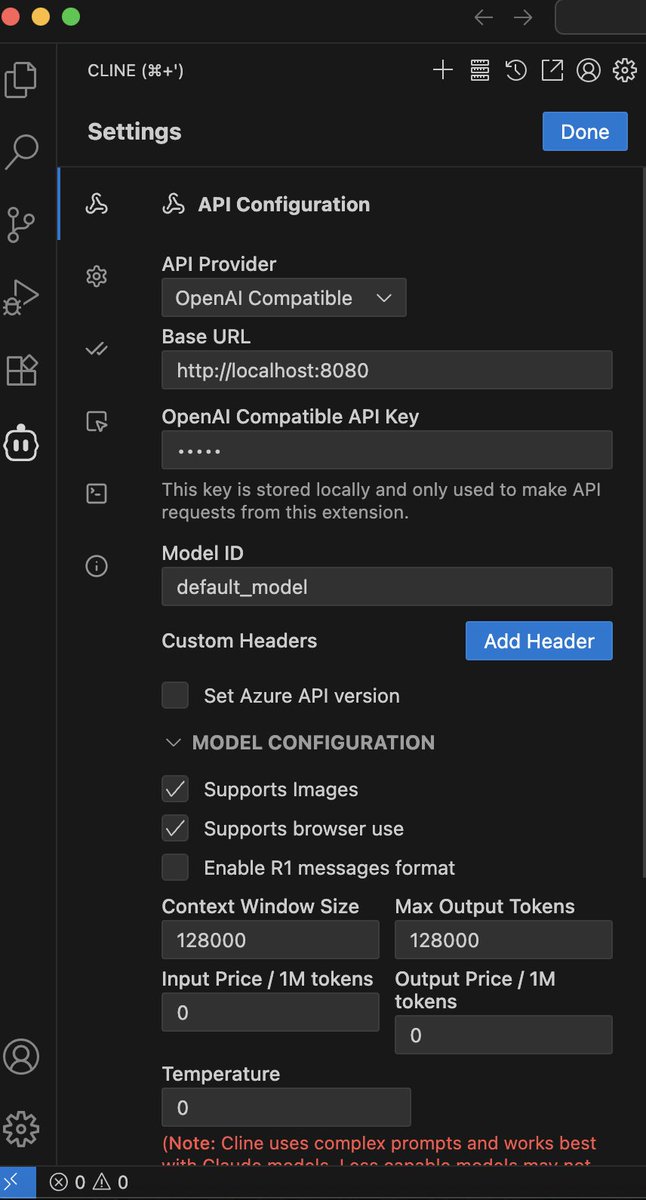

Run `mlx_lm.server --model mlx-community/Devstral-Small-2507-4bit-DWQ` and configure the command line as shown below. You can then enjoy coding without internet :p @ivanfioravanti Thanks the great DWQ for the Devstral-Small

Somehow, Australia is lagging behind in local LLM. I have successfully turned all my recent interviews into lectures on MLX 😀

We at @argmaxinc love @apple's improved speech-to-text API and contextualized it here: argmaxinc.com/blog/apple-and…

Finally, got Claude to refactor the UI and clean up codebase for my little rag app, some issues are really limiting for the on-device app for iPhone. Memory is the number 1 issue, and I can't seem to be able to find a good non-thinking small LLM that supports prompt cache and KV…

Someone awesome is building mlx-lm.cpp - a port of mlx-lm in pure C++ using the MLX C++ API:

Somehow, I feel I have an advantage I can read ai content on Chinese social media platforms directly :p Thanks to all those great open-source models from Qwen, DeepSeek, and Moonshot.😀

real battle test use Kimi K2 with Claude code to refactor the screenmates codebase. It spent ~$3 credits to complete the code refactoring but got tons of Swift async/await errors. then tried multiple prompts, couldn't fix it on its own, switching to Claude 4 Sonnet resolved all…