Awni Hannun

@awnihannun

AI @apple

MLX-VLM v0.3.2 is here 🔥 What’s new: - Migrated to .toml - UI and Audio dependencies are optional - Added CUDA support - Support text-only training - Lots of fixes and refactoring Thanks to all the awesome contributions of this release ❤️ (@ActuallyIsaak, Neil from…

New qwen3-235B-A22B-Instruct-2507 on mlx-community on HuggingFace! Links 👇🏻 Perfect MoE for MLX! Here in 4bit running at ~ 31 tokens/sec on M3 Ultra 512GB! Prompt: 14 tokens, 75.924 tokens-per-sec Generation: 300 tokens, 30.849 tokens-per-sec Peak memory: 132.346 GB

Run `mlx_lm.server --model mlx-community/Devstral-Small-2507-4bit-DWQ` and configure the command line as shown below. You can then enjoy coding without internet :p @ivanfioravanti Thanks the great DWQ for the Devstral-Small

🔥Run the latest fasted models on your Mac! 💻 Binary pushed, tests passed! MLX-GUI 1.2.3, now on pip! pypi.org/project/mlx-gu… and native mac binary. github.com/RamboRogers/ml…

I did it at the end! Another M3 Ultra 512GB to test distributed fine-tuning and inference. I will share this with all amazing MLX contributors out there like @Prince_Canuma @ActuallyIsaak @mzba @N8Programs @digitalix and anyone else looking to test their experiments on a 512GB…

We're doubling the number of Apple Silicon macs that can train together coherently every 2 months. Our new KPOP optimizer was designed specifically for the hardware constraints of Apple Silicon and implemented using mlx.distributed.

New research from Exo done (in part) with MLX on Apple silicon: An algorithm for distributed training that leverages higher RAM capacity of Apple silicon relative to FLOPs and inter-machine bandwidth.

New research from Exo done (in part) with MLX on Apple silicon: An algorithm for distributed training that leverages higher RAM capacity of Apple silicon relative to FLOPs and inter-machine bandwidth.

Paper is out. Link: openreview.net/pdf?id=TJjP8d5…

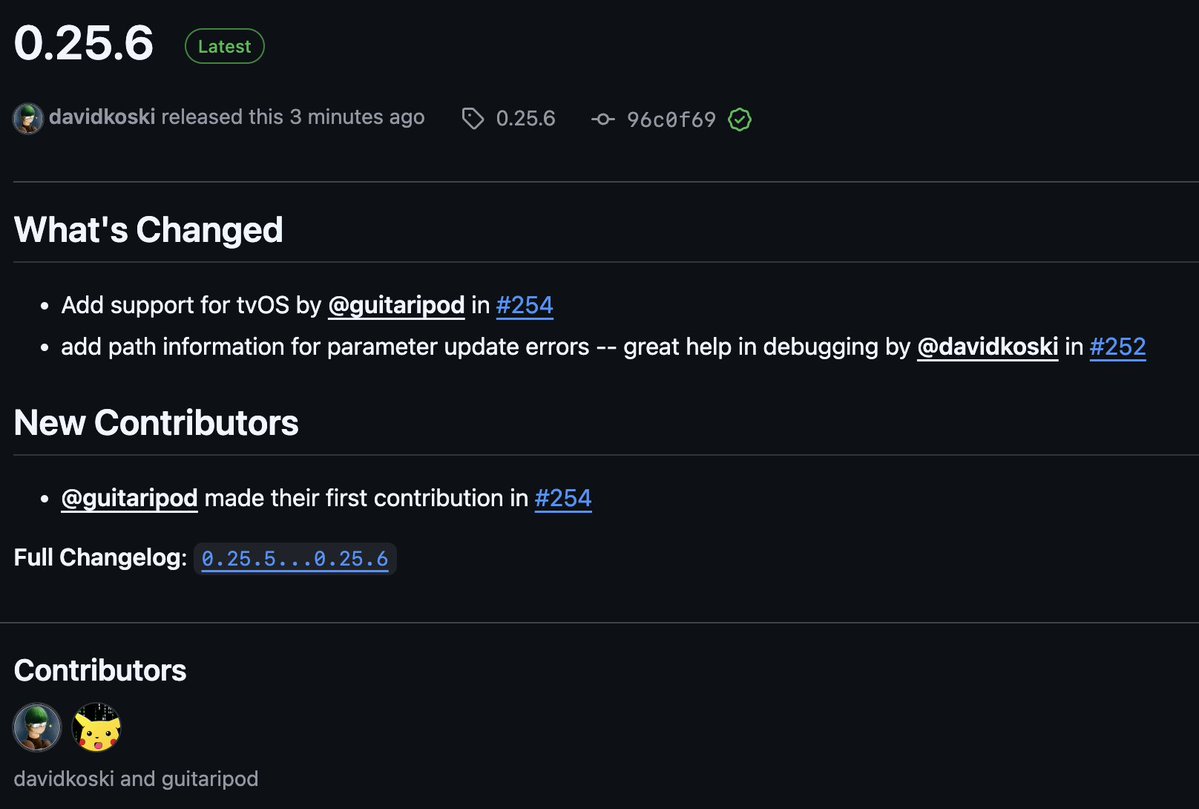

The latest MLX Swift supports tvOS 📺 ! You can use the same code for macOS, iOS (iPhone, iPad), visionOS (Vision Pro), and now tvOS (Apple TV).

MLX Hunyuan-A13B-Instruct-4bit-DWQ is live on mlx-community! 🔥 Have fun! Prompt: 8 tokens, 21.7 tokens-per-sec Generation: 436 tokens, 64.4 tokens-per-sec Peak memory: 45.3 GB huggingface.co/mlx-community/…