N8 Programs

@N8Programs

Studying Applied Mathematics and Statistics at @JohnsHopkins. Currently interning at @RockefellerUniv.

lets go! this'll be sorted out!

hey, we used the json format for convenient parsing. i'll dm you for reproduction.

quite strange. IK qwen team does everything in good faith, so must be some sort of evaluation difference. @JustinLin610?

Please note, we're not able to reproduce the 41.8% ARC-AGI-1 score claimed by the latest Qwen 3 release -- neither on the public eval set nor on the semi-private set. The numbers we're seeing are in line with other recent base models. In general, only rely on scores verified by…

WTF IS HAPPENING

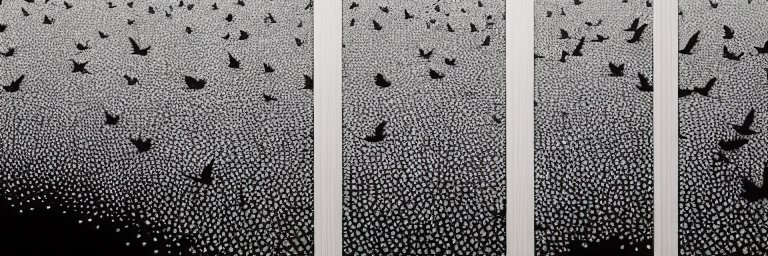

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

in my matrioshka brain we reach one gigashrimp per atom

simulated shrimps can outnumber the atoms used to simulate them. you can infact have more than 10^100 shrimps in a universe with less than 10^100 atoms

lets goo tiny models on ARC-AGI

🚀Introducing Hierarchical Reasoning Model🧠🤖 Inspired by brain's hierarchical processing, HRM delivers unprecedented reasoning power on complex tasks like ARC-AGI and expert-level Sudoku using just 1k examples, no pretraining or CoT! Unlock next AI breakthrough with…

wtf it gets 41% on ARC-AGI-1.

Bye Qwen3-235B-A22B, hello Qwen3-235B-A22B-2507! After talking with the community and thinking it through, we decided to stop using hybrid thinking mode. Instead, we’ll train Instruct and Thinking models separately so we can get the best quality possible. Today, we’re releasing…

Operate a robot to load a dishwasher.

I AM ONCE AGAIN ASKING: What’s the least impressive thing you’re very sure AI still won’t be able to do in <2 years? Get your prediction on the record now or shut up.

this is what I'd expect if you RL language models long enough... the distribution shifts to best solve the problem. is this good? idk.

BRUH

he knew

by end of 2024 it should be 70% bronze, 60% gold. by end of 2025 should be 80% bronze, 70% gold.

extremely impressive result.

The models support "heavy" inference mode that can "combine the work of multiple agents". To enable this, we used the GenSelect algorithm from our AIMO-2 paper. With GenSelect@64 we consistently outperform o3 (high) on math benchmarks!

absolutely phenomenal work. read the paper in awe. LLMs can solve ARC with a very clever test time training setup + scoring mechanism and this paper shows that beautifully

How @arcprize 2024 was claimed 📖 Read 207: « The LLM ARChitect: Solving ARC-AGI is a Matter of Perspective », by Daniel Franzen, Jan Disselhoff, and David Hartmann github.com/da-fr/arc-priz… Covering over here the main parts of the approach, which won over the competition,…

honestly i would much rather have an open-source 4.1-mini than an open-source o3-mini

Important thing to note: the M3 Max and M4 Mini have drastically different thermal profiles while training. The M4 Mini keeps a near-constant temperature, while the M3 Max oscillates sinusodially.