Sepp Hochreiter

@HochreiterSepp

Pioneer of Deep Learning and known for vanishing gradient and the LSTM.

I am so excited that xLSTM is out. LSTM is close to my heart - for more than 30 years now. With xLSTM we close the gap to existing state-of-the-art LLMs. With NXAI we have started to build our own European LLMs. I am very proud of my team. arxiv.org/abs/2405.04517

Attention!! Our TiRex time series model, built on xLSTM, is topping all major international leaderboards. A European-developed model is leading the field—significantly ahead of U.S. competitors like Amazon, Datadog, Salesforce, and Google, as well as Chinese models from Alibaba.

We’re excited to introduce TiRex — a pre-trained time series forecasting model based on an xLSTM architecture.

General relativity modeled by neural tensor fields. Super exiting work. Geometric modeling of gravitational fields through tensor-valued PDEs. Cool stuff: a simulation of a black hole.

General relativity 🤝 neural fields This simulation of a black hole is coming from our neural networks 🚀 We introduce Einstein Fields, a compact NN representation for 4D numerical relativity. EinFields are designed to handle the tensorial properties of GR and its derivatives.

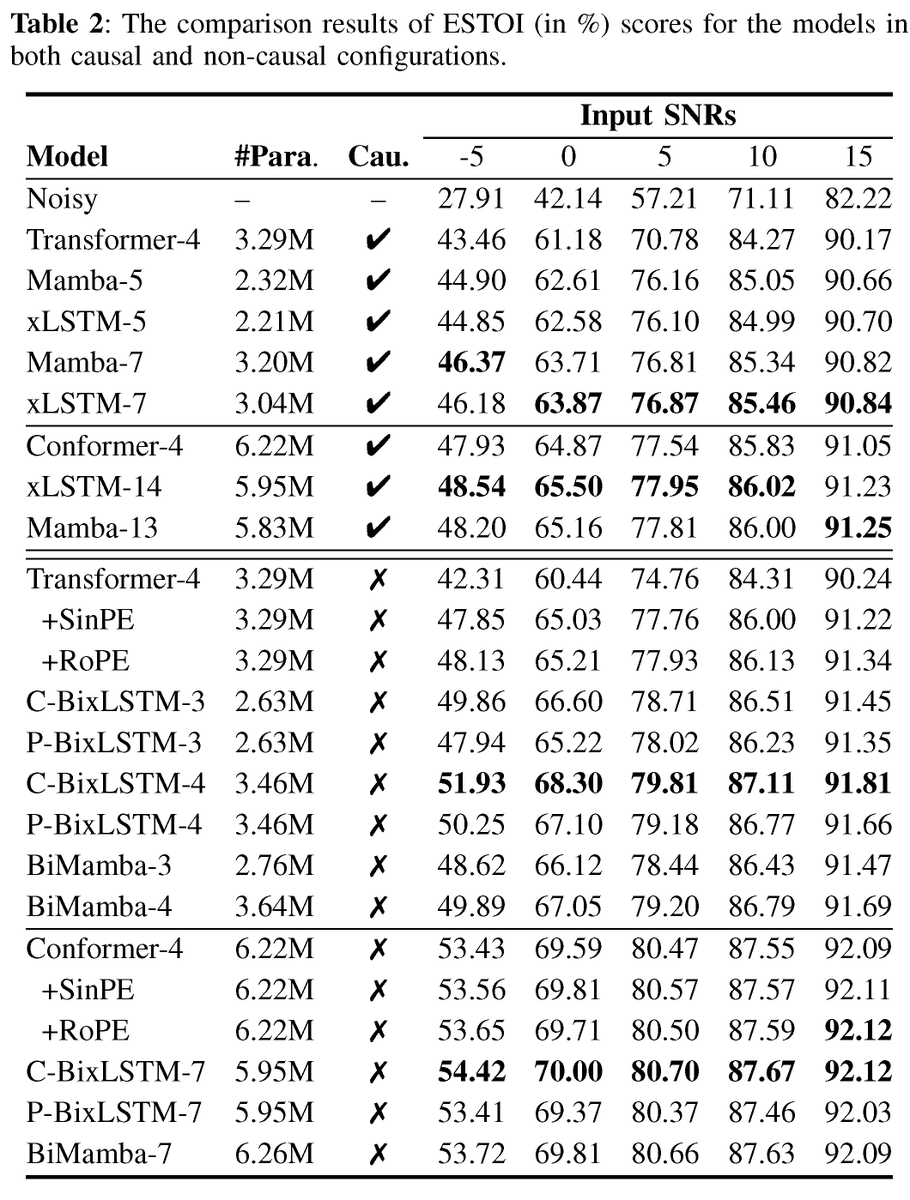

xLSTM for Monaural Speech Enhancement: arxiv.org/abs/2507.04368 xLSTM has superior performance vs. Mamba and Transformers but is slower than Mamba. New Triton kernels, xLSTM is faster than MAMBA at training and inference: arxiv.org/abs/2503.13427 and arxiv.org/abs/2503.14376

xLSTM for Aspect-based Sentiment Analysis: arxiv.org/abs/2507.01213 Another success story of xLSTM. MEGA: xLSTM with Multihead Exponential Gated Fusion. Experiments on 3 benchmarks show that MEGA outperforms state-of-the-art baselines with superior accuracy and efficiency”

10 years ago, in May 2015, we published the first working very deep gradient-based feedforward neural networks (FNNs) with hundreds of layers (previous FNNs had a maximum of a few dozen layers). To overcome the vanishing gradient problem, our Highway Networks used the residual…

xLSTM for multivariate time series anomaly detection: arxiv.org/abs/2506.22837 “In our results, xLSTM showcases state-of-the-art accuracy, outperforming 23 popular anomaly detection baselines.” Again, xLSTM excels in time series analysis.

Great application but built on the wrong model architecture... We've already shown that Transformer is inferior to xLSTM on DNA: arxiv.org/abs/2411.04165

Happy to introduce AlphaGenome, @GoogleDeepMind's new AI model for genomics. AlphaGenome offers a comprehensive view of the human non-coding genome by predicting the impact of DNA variations. It will deepen our understanding of disease biology and open new avenues of research.

NXAI has successfully demonstrated that their groundbreaking xLSTM (Long Short Term Memory) architecture achieves exceptional performance on AMD Instinct™ GPUs - significant advancement in RNN technology for edge computing applications. amd.com/en/blogs/2025/…

Parallelizable and state tracking and learnable information flow. Wowww. Super Work of Korbinian and team.

Ever wondered how linear RNNs like #mLSTM (#xLSTM) or #Mamba can be extended to multiple dimensions? Check out "pLSTM: parallelizable Linear Source Transition Mark networks". #pLSTM works on sequences, images, (directed acyclic) graphs. Paper link: arxiv.org/abs/2506.11997

Ever wondered how linear RNNs like #mLSTM (#xLSTM) or #Mamba can be extended to multiple dimensions? Check out "pLSTM: parallelizable Linear Source Transition Mark networks". #pLSTM works on sequences, images, (directed acyclic) graphs. Paper link: arxiv.org/abs/2506.11997

xLSTM for Human Action Segmentation: arxiv.org/abs/2506.09650 "HopaDIFF, leveraging a novel cross-input gate attentional xLSTM to enhance holistic-partial long-range reasoning" "HopaDIFF achieves state-of-theart results on RHAS133 in diverse evaluation settings."

Mein Buch “Was kann Künstliche Intelligenz?“ ist erschienen. Eine leicht zugängliche Einführung in das Thema Künstliche Intelligenz. LeserInnen – auch ohne technischen Hintergrund – wird erklärt, was KI eigentlich ist, welche Potenziale sie birgt und welche Auswirkungen sie hat.

We are soooo proud. Our European-developed TiRex is leading the field—significantly ahead of U.S. competitors like Amazon, Datadog, Salesforce, and Google, as well as Chinese models from companies such as Alibaba.

We’re excited to introduce TiRex — a pre-trained time series forecasting model based on an xLSTM architecture.

A European-developed TiRex is leading the field—significantly ahead of U.S. competitors like Amazon, Datadog, Salesforce, and Google, as well as Chinese models from companies such as Alibaba.

GIFT-Eval Time Series Forecasting Leaderboard Evaluates time-series forecasting methods. Now leading: TiREX arxiv.org/abs/2505.23719 Link: huggingface.co/spaces/Salesfo…

Attention!! Our TiRex time series mode is a European-developed model leading the field—significantly ahead of U.S. competitors like Amazon, Datadog, Salesforce, and Google, as well as Chinese models from companies such as Alibaba.

Europe is winning the AI race!! Best foundation model for time-series!

Recommended read for the weekend: Sepp Hochreiter's book on AI! Lots of fun anecdotes and easily accessible basics on AI! beneventopublishing.com/ecowing/produk…

xLSTM for the classification of assembly tasks: arxiv.org/abs/2505.18012 "xLSTM model demonstrated better generalization capabilities to new operators. The results clearly show that for this type of classification, the xLSTM model offers a slight edge over Transformers."

Happy to introduce 🔥LaM-SLidE🔥! We show how trajectories of spatial dynamical systems can be modeled in latent space by --> leveraging IDENTIFIERS. 📚Paper: arxiv.org/abs/2502.12128 💻Code: github.com/ml-jku/LaM-SLi… 📝Blog: ml-jku.github.io/LaM-SLidE/ 1/n

Come by today at our posters in the Open Science for Foundation Models at 3pm (Hall4#5) #ICLR25 if you want to know more about Tiled Flash Linear Attention and xLSTM 7B!