Jürgen Schmidhuber

@SchmidhuberAI

Invented principles of meta-learning (1987), GANs (1990), Transformers (1991), very deep learning (1991), etc. Our AI is used many billions of times every day.

DeepSeek [1] uses elements of the 2015 reinforcement learning prompt engineer [2] and its 2018 refinement [3] which collapses the RL machine and world model of [2] into a single net through the neural net distillation procedure of 1991 [4]: a distilled chain of thought system.…

![SchmidhuberAI's tweet image. DeepSeek [1] uses elements of the 2015 reinforcement learning prompt engineer [2] and its 2018 refinement [3] which collapses the RL machine and world model of [2] into a single net through the neural net distillation procedure of 1991 [4]: a distilled chain of thought system.…](https://pbs.twimg.com/media/Gioh8G8X0AAOdx8.jpg)

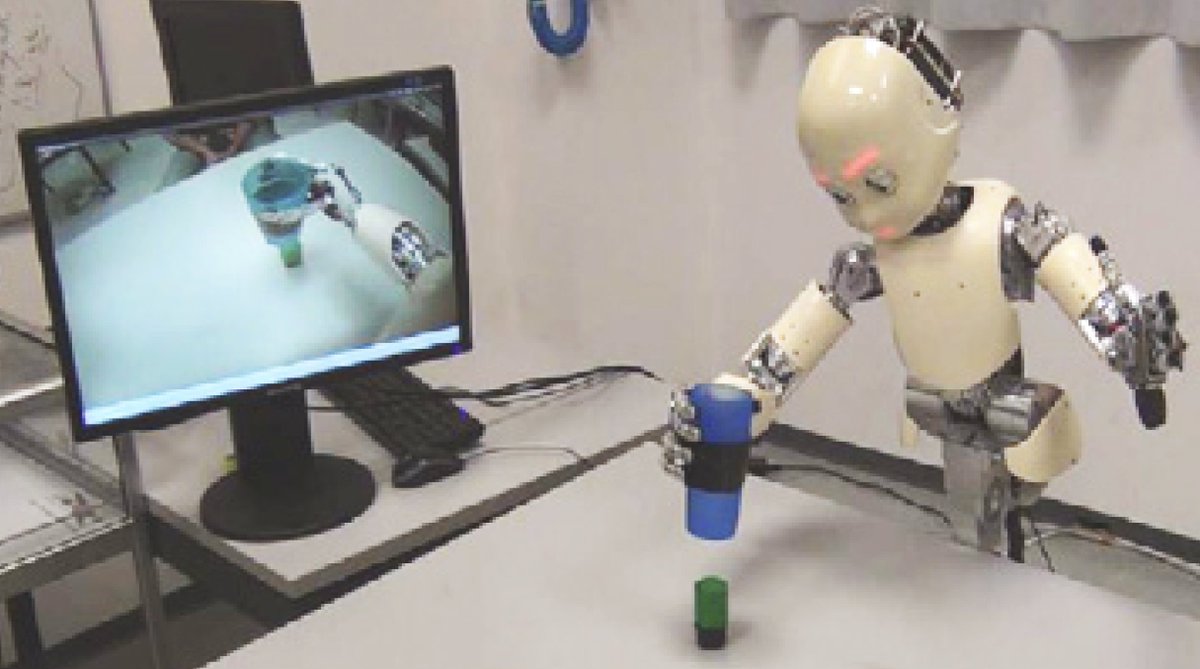

Physical AI 10 years ago: baby robot invents its own experiments to improve its neural world model Kompella, Stollenga, Luciw, Schmidhuber. Continual curiosity-driven skill acquisition from high-dimensional video inputs for humanoid robots. Artificial Intelligence, 2015

1 decade ago: Reinforcement Learning Prompt Engineer in Sec. 5.3 of «Learning to Think …» [2]. Adaptive Chain of Thought! An RL net learns to query another net for abstract reasoning & decision making. Going beyond the 1990 World Model for millisecond-by-millisecond planning…

![SchmidhuberAI's tweet image. 1 decade ago: Reinforcement Learning Prompt Engineer in Sec. 5.3 of «Learning to Think …» [2]. Adaptive Chain of Thought! An RL net learns to query another net for abstract reasoning & decision making. Going beyond the 1990 World Model for millisecond-by-millisecond planning…](https://pbs.twimg.com/media/Gv0t5lxX0AA0s_B.jpg)

Since 1990, we have worked on artificial curiosity & measuring „interestingness.“ Our new ICML paper uses "Prediction of Hidden Units" loss to quantify in-context computational complexity in sequence models. It can tell boring from interesting tasks and predict correct reasoning.

Excited to share our new ICML paper, with co-authors @robert_csordas and @SchmidhuberAI! How can we tell if an LLM is actually "thinking" versus just spitting out memorized or trivial text? Can we detect when a model is doing anything interesting? (Thread below👇)

10 years ago, in May 2015, we published the first working very deep gradient-based feedforward neural networks (FNNs) with hundreds of layers (previous FNNs had a maximum of a few dozen layers). To overcome the vanishing gradient problem, our Highway Networks used the residual…

Everybody talks about recursive self-improvement & Gödel Machines now & how this will lead to AGI. What a change from 15 years ago! We had AGI'2010 in Lugano & chaired AGI'2011 at Google. The backbone of the AGI conferences was mathematically optimal Universal AI: the 2003 Gödel…

New Paper! Darwin Godel Machine: Open-Ended Evolution of Self-Improving Agents A longstanding goal of AI research has been the creation of AI that can learn indefinitely. One path toward that goal is an AI that improves itself by rewriting its own code, including any code…

Smart (but not necessarily supersmart) robots that can learn to operate the tools & machines operated by humans can also build (and repair) more of their own kind: the "ultimate form of scaling" [1-5]. Life-like, self-replicating, self-improving hardware will change everything.…

![SchmidhuberAI's tweet image. Smart (but not necessarily supersmart) robots that can learn to operate the tools & machines operated by humans can also build (and repair) more of their own kind: the "ultimate form of scaling" [1-5]. Life-like, self-replicating, self-improving hardware will change everything.…](https://pbs.twimg.com/media/GsC2VhSXQAApP8S.png)

AGI? One day, but not yet. The only AI that works well right now is the one behind the screen [12-17]. But passing the Turing Test [9] behind a screen is easy compared to Real AI for real robots in the real world. No current AI-driven robot could be certified as a plumber…

My first work on metalearning or learning to learn came out in 1987 [1][2]. Back then nobody was interested. Today, compute is 10 million times cheaper, and metalearning is a hot topic 🙂 It’s fitting that my 100th journal publication [100] is about metalearning, too. [100]…

![SchmidhuberAI's tweet image. My first work on metalearning or learning to learn came out in 1987 [1][2]. Back then nobody was interested. Today, compute is 10 million times cheaper, and metalearning is a hot topic 🙂 It’s fitting that my 100th journal publication [100] is about metalearning, too.

[100]…](https://pbs.twimg.com/media/GoqWMjKXMAA6KT6.jpg)

What if AI could write creative stories & insightful #DeepResearch reports like an expert? Our heterogeneous recursive planning [1] enables this via adaptive subgoals [2] & dynamic execution. Agents dynamically replan & weave retrieval, reasoning, & composition mid-flow. Explore…

![SchmidhuberAI's tweet image. What if AI could write creative stories & insightful #DeepResearch reports like an expert? Our heterogeneous recursive planning [1] enables this via adaptive subgoals [2] & dynamic execution. Agents dynamically replan & weave retrieval, reasoning, & composition mid-flow. Explore…](https://pbs.twimg.com/media/GnswCFJbMAAoY3q.jpg)

What can we learn from history? The FACTS: a novel Structured State-Space Model with a factored, thinking memory [1]. Great for forecasting, video modeling, autonomous systems, at #ICLR2025. Fast, robust, parallelisable. [1] Li Nanbo, Firas Laakom, Yucheng Xu, Wenyi Wang, J.…

![SchmidhuberAI's tweet image. What can we learn from history? The FACTS: a novel Structured State-Space Model with a factored, thinking memory [1]. Great for forecasting, video modeling, autonomous systems, at #ICLR2025. Fast, robust, parallelisable.

[1] Li Nanbo, Firas Laakom, Yucheng Xu, Wenyi Wang, J.…](https://pbs.twimg.com/media/GnnlkguaMAIYtWu.jpg)

During the Oxford-style debate at the "Interpreting Europe Conference 2025," I persuaded many professional interpreters to reject the motion: "AI-powered interpretation will never replace human interpretation." Before the debate, the audience was 60-40 in favor of the motion;…

I am hiring postdocs at #KAUST to develop an Artificial Scientist for the discovery of novel chemical materials to save the climate by capturing carbon dioxide. Join this project at the intersection of RL and Material Science: apply.interfolio.com/162867

Do you like RL and math? Our collaboration, IDSIA-KAUST-NNAISENSE, has the most detailed exploration of the convergence and stability of modern RL frameworks like Upside-Down RL, Online Decision Transformers, and Goal-Conditioned Supervised Learning arxiv.org/abs/2502.05672

I had the honor to meet Professor Shun-Ichi Amari, a pioneer in neural networks, at the University of Tokyo. He worked on early neural nets using pen and paper, before running computing experiments in the future. He talked not only about AI, but human evolution and consciousness.

As 2022 ends: 1/2 century ago, Shun-Ichi Amari published a learning recurrent neural network (1972) much later called the Hopfield network (based on the original, century-old, non-learning Lenz-Ising recurrent network architecture, 1920-25) people.idsia.ch/~juergen/deep-…