Linli Yao

@Elsa_er_

Ph.D. Candidate in Computer Science @PKU1898 | http://B.Sc. & http://M.Sc. at RUC | Researching Vision-Language and Large Multimodal Models.

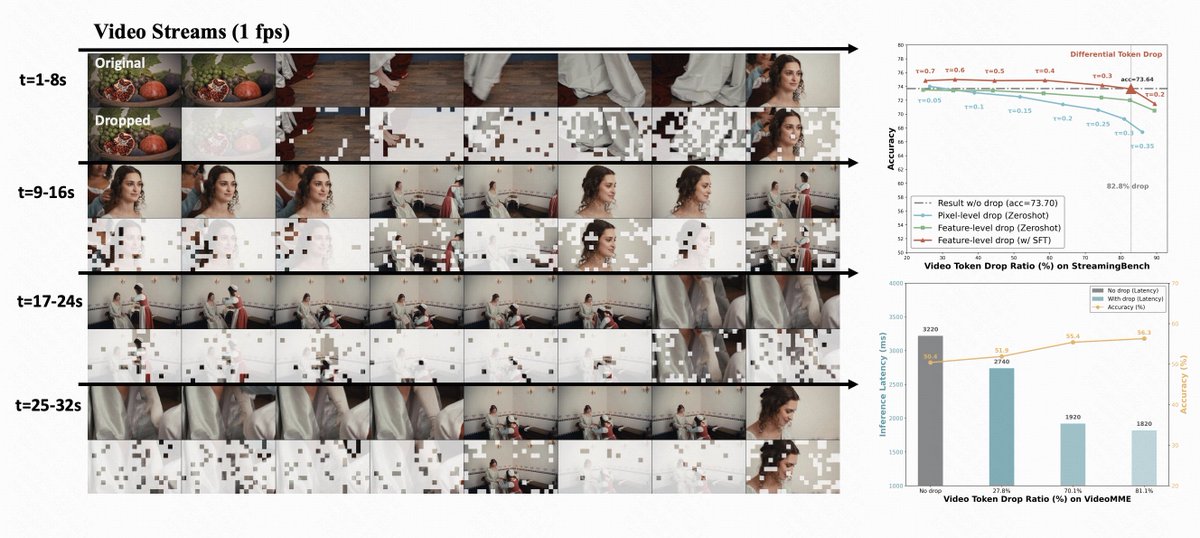

🚀 Efficient Streaming Video Understanding Introducing TimeChat-Online: 80% Visual Tokens are Naturally Redundant in Streaming Videos Project Page: timechat-online.github.io Paper: arxiv.org/pdf/2504.17343 Code: github.com/yaolinli/TimeC…

Video understanding isn't just recognizing —it demands reasoning across thousands of frames. Meet Long-RL🚀 Highlights: 🧠 Dataset: LongVideo-Reason — 52K QAs with reasoning. ⚡ System: MR-SP - 2.1× faster RL for long videos. 📈 Scalability: Hour-long videos (3,600 frames) RL…

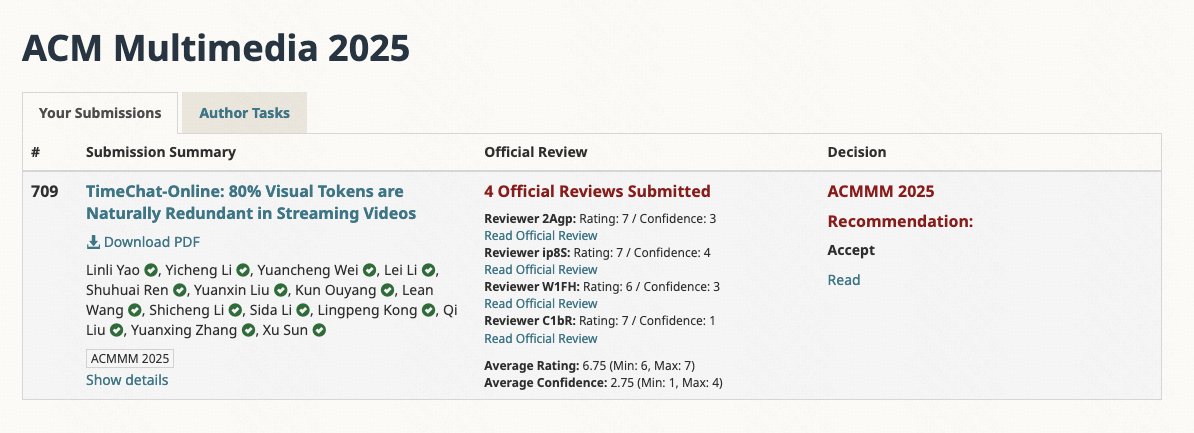

🎉 Happy to share that our TimeChat-Online work has been accepted to ACM Multimedia 2025! 🔗 Check out the project page: timechat-online.github.io ⭐️ Star our repo if you like it: github.com/yaolinli/TimeC… 🤖 #VideoLLM 🎬 #StreamingAI 📊 #ACMMM2025

Excited to share our new survey on the reasoning paradigm shift from "Think with Text" to "Think with Image"! 🧠🖼️ Our work offers a roadmap for more powerful & aligned AI. 🚀 📜 Paper: arxiv.org/pdf/2506.23918 ⭐ GitHub (400+🌟): github.com/zhaochen0110/A…

🎥 Check out our new demo video that shows how TimeChat-Online makes real-time video understanding efficient, fun, and intuitive! 🌐 Demo: github.com/yaolinli/TimeC… 🔗 Project: timechat-online.github.io 👇 Try it out and let us know what you think! #StreaimingVideo #MultimodalAI

🚀 Efficient Streaming Video Understanding Introducing TimeChat-Online: 80% Visual Tokens are Naturally Redundant in Streaming Videos Project Page: timechat-online.github.io Paper: arxiv.org/pdf/2504.17343 Code: github.com/yaolinli/TimeC…

⏰ We introduce Reinforcement Pre-Training (RPT🍒) — reframing next-token prediction as a reasoning task using RLVR ✅ General-purpose reasoning 📑 Scalable RL on web corpus 📈 Stronger pre-training + RLVR results 🚀 Allow allocate more compute on specific tokens

MiMo-VL technical report, models, and evaluation suite are out! 🤗 Models: huggingface.co/XiaomiMiMo/MiM… (or RL) Report: arxiv.org/abs/2506.03569 Evaluation Suite: github.com/XiaomiMiMo/lmm… Looking back, it's incredible that we delivered such compact yet powerful vision-language…

🚀 New Paper: Pixel Reasoner 🧠🖼️ How can Vision-Language Models (VLMs) perform chain-of-thought reasoning within the image itself? We introduce Pixel Reasoner, the first open-source framework that enables VLMs to “think in pixel space” through curiosity-driven reinforcement…

Thanks a lot for the invitation, Ruihong! Honored to have had the opportunity : ) For anyone interested, here are the slides: drive.google.com/file/d/1-deNsR…

In our Data Science Seminar @UQSchoolEECS today, we are very happy to have @yupenghou97 from @UCSD @ucsd_cse to talk about the hot topic on generative RecSys and tokenization.

🎉 Delighted to share that our paper GenS has been accepted to ACL 2025 Findings 🤗 It’s been a real pleasure working with my wonderful collaborators! #ACL2025 #Multimodal #VideoLLM Code: github.com/yaolinli/GenS Dataset: huggingface.co/datasets/yaoli…

📢 Introducing GenS: Generative Frame Sampler for Long Video Understanding! 🎯 It can identify query-relevant frames in long videos (minutes to hours) for accurate VideoQA 👉Project page: generative-sampler.github.io

cool~

Real-time webcam demo with @huggingface SmolVLM and @ggml_org llama.cpp server. All running locally on a Macbook M3

Introducing 🔥GenS🔥 (Generative Frame Sampler) — a plug-and-play module that greatly enhances long video understanding in existing LMMs (10+ gain for GPT-4o on LongVideoBench), by selecting fewer yet more informative frames. generative-sampler.github.io arxiv.org/abs/2503.09146

Check out M3DocRAG -- multimodal RAG for question answering on Multi-Modal & Multi-Page & Multi-Documents (+ a new open-domain benchmark + strong results on 3 benchmarks)! ⚡️Key Highlights: ➡️ M3DocRAG flexibly accommodates various settings: - closed & open-domain document…

Introducing Cambrian-1, a fully open project from our group at NYU. The world doesn't need another MLLM to rival GPT-4V. Cambrian is unique as a vision-centric exploration & here's why I think it's time to shift focus from scaling LLMs to enhancing visual representations.🧵[1/n]