Diyi Yang

@Diyi_Yang

Assistant Professor @Stanford CS @StanfordNLP @StanfordAILab LLMs for Humans

Our study led by @ChengleiSi reveals an “ideation–execution gap” 😲 Ideas from LLMs may sound novel, but when experts spend 100+ hrs executing them, they flop: 💥 👉 human‑generated ideas outperform on novelty, excitement, effectiveness & overall quality!

Are AI scientists already better than human researchers? We recruited 43 PhD students to spend 3 months executing research ideas proposed by an LLM agent vs human experts. Main finding: LLM ideas result in worse projects than human ideas.

.@stanfordnlp papers at @aclmeeting in Vienna next week: • HumT DumT: Measuring and controlling human-like language in LLMs @chengmyra1 @sunnyyuych @jurafsky • Controllable and Reliable Knowledge-Intensive Task Agents with Declarative GenieWorksheets @harshitj__ @ShichengGLiu…

🤔Long-horizon tasks: How to train LLMs for the marathon?🌀 Submit anything on 🔁"Multi-turn Interactions in LLMs"🔁 to our @NeurIPSConf workshop by 08/22: 📕 Multi-Turn RL ⚖️ Multi-Turn Alignment 💬 Multi-Turn Human-AI Teaming 📊 Multi-Turn Eval ♾️You name it! #neurips #LLM

🚀 Call for Papers — @NeurIPSConf 2025 Workshop Multi-Turn Interactions in LLMs 📅 December 6/7 · 📍 San Diego Convention Center Join us to shape the future of interactive AI. Topics include but are not limited to: 🧠 Multi-Turn RL for Agentic Tasks (e.g., web & GUI agents,…

Fascinating new paper on AI companionship w/data donation from Character.ai by @Diyi_Yang and colleagues: arxiv.org/abs/2506.12605

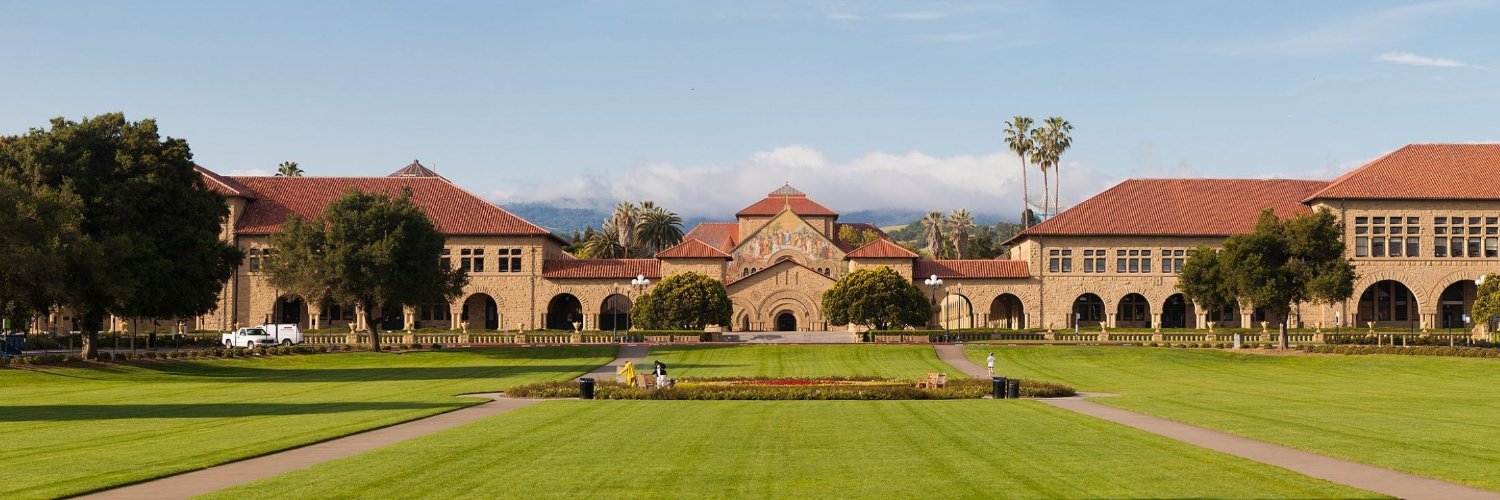

.@stanfordnlp researchers at work!

What do workers want from AI? Researchers from @StanfordHAI and @DigEconLab undertook a comprehensive study involving U.S. workers and AI experts. Here's what they found: stanford.io/3IsmHfg

After sharing our preprint on the Future of Work with AI Agents, we received strong interest in the WORKBank database. Today, we’re excited to release it publicly—along with a visualization tool to explore occupational and sector-level insights🧵

What do workers want from AI? Researchers from @StanfordHAI and @DigEconLab undertook a comprehensive study involving U.S. workers and AI experts. Here's what they found: stanford.io/3IsmHfg

Computer Scientist @YejinChoinka uses natural language processing to develop AI systems that can understand language and make inferences about the world. Learn more about the 2022 MacArthur Fellow #MacFellow macfound.org/fellows/class-…

Introducing 🔥Optimas🔥: The first unified framework to optimize compound AI systems composed of multiple components like trainable/API-based LLMs, tools, model routers, and traditional ML models! 🌐 👉🏻 optimas.stanford.edu 🌟 Why Optimas? AI systems today combine diverse…

ASI is now accepted to @COLM_conf #COLM2025! 🍁 🔗 arxiv.org/abs/2504.06821

Meet ASI: Agent Skill Induction A framework for online programmatic skill learning — no offline data, no training. 🧠 Build reusable skills during test 📈 +23.5% success, +15.3% efficiency 🌐 Scales to long-horizon tasks, transfers across websites Let's dive in! 🧵

Thank you to everyone for your energy and enthusiasm in joining this adventure with me so far!

🎉 Excited to announce that the 4th HCI+NLP workshop will be co-located with @EMNLP in Suzhou, China! 🌍📍 Join us to explore the intersection of human-computer interaction and NLP. 🧵 1/

📷 Speaker: Yijia Shao, Stanford NLP Group & Stanford AI Lab (SAIL) 📷 Date: July 5 📷 Time: 7:00 PM PDT 📷 Host: @ceciletamura of @ploutosai 📷 Join us live: app.ploutos.dev/streams/chubby…

July 4th break in our #AI4Science seminar series. Join us next week for a talk by @ChengleiSi on the epic 2-year experiment evaluating (and executing!) AI-generated scientific ideas. lu.ma/9qq72ebt

LLMs can generate research ideas that look more novel than humans’, but are they actually better? Stanford ran a study where LLM- or human-authored ideas were tested Human ideas were blindly rated consistently better, with LLM ideas seeing 37× larger score drops post-execution

Extraordinary work from @EchoShao8899 and the kind of work that can help shape policy in an extremely fast moving AI world🚀🚀🚀. This are the kind of studies we need the most and huge congrats to Yijia and the team.

🚨 70 million US workers are about to face their biggest workplace transmission due to AI agents. But nobody asks them what they want. While AI races to automate everything, we took a different approach: auditing what workers want vs. what AI can do across the US workforce.🧵

Can AI ideas hold up in the lab? This study from Stanford says not as well as human ones, but there's hope. With enough training/reasoning, I'm pretty sure LLMs could nail 'small-scale discoveries' not Nobel stuff though Great work @ChengleiSi @tatsu_hashimoto @Diyi_Yang

Are AI scientists already better than human researchers? We recruited 43 PhD students to spend 3 months executing research ideas proposed by an LLM agent vs human experts. Main finding: LLM ideas result in worse projects than human ideas.

Stanford CoreNLP v4.5.10 has been released. This version removes our patterns package (which we don't think anyone uses any more) and hence the Lucene dependency (which regularly causes complications and security issues). Next step: require Java 11. stanfordnlp.github.io/CoreNLP/

During execution, we disallow substantial changes to the assigned ideas. After execution, participants submit their codebase and a 4-page short paper (just like a typical ACL short paper submission), and we do a blind review of all projects by recruiting 58 expert reviewers. 5/

Claude Sonnet 3.5 generated significantly better ideas for research papers than humans, but when researchers tried executing the ideas the gap between human & AI idea quality disappeared Execution is a harder problem for AI. (Yet this is a better outcome for AI than I expected)

Verrrrry intriguing-looking and labor-intensive test of whether LLMs can come up with good scientific ideas. After implementing those ideas, the verdict seems to be "no, not really."