Stanford NLP Group

@stanfordnlp

Computational Linguists—Natural Language—Machine Learning @chrmanning @jurafsky @percyliang @ChrisGPotts @tatsu_hashimoto @MonicaSLam @Diyi_Yang @StanfordAILab

We all agree that AI models/agents should augment humans instead of replace us in many cases. But how do we pick when to have AI collaborators, and how do we build them? Come check out our #ACL2025NLP tutorial on Human-AI Collaboration w/ @Diyi_Yang @josephcc, 📍7/27 9am@ Hall N!

6) Nice to see you there: @superai_conf, @StanfordClubSG, @StanfordHAI, @LVendraminelli, @Diyi_Yang, @jiajunwu_cs, @AnkaReuel, @russellwald, @LewChuenHong. If we met but haven’t connected on X, please tag yourself in comments :)

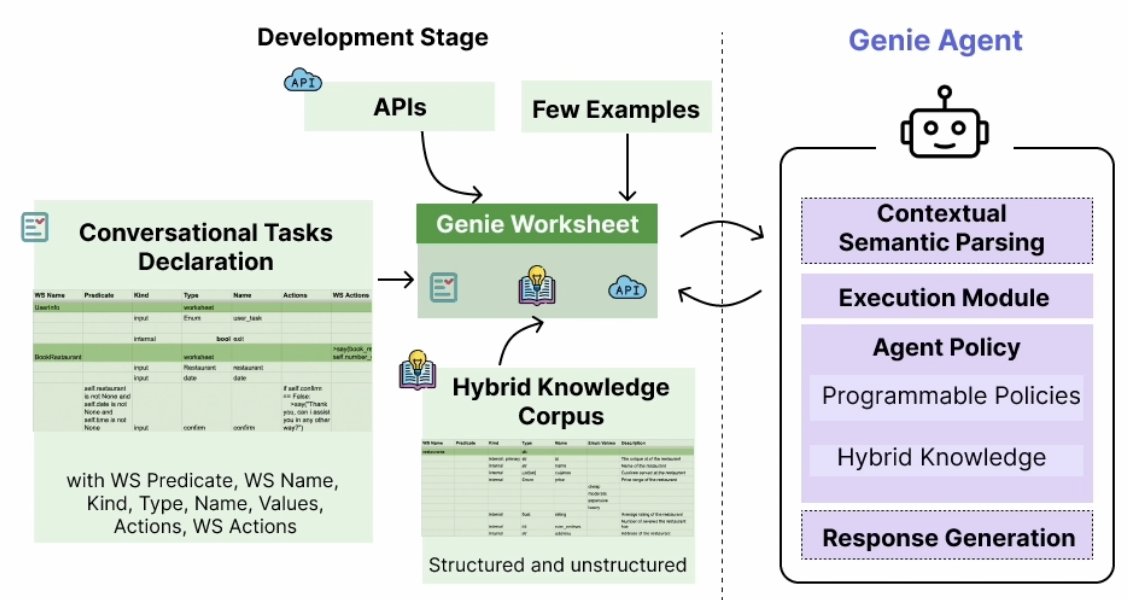

flying to Vienna 🇦🇹 for ACL to present Genie Worksheets (Monday 11am)! come and say hi if you want to talk about how to create controllable and reliable application layers on top of LLMs, knowledge discovery and curation, or just wanna hang

Hello friends! Presenting this poster at @aclmeeting in Vienna🇦🇹 on Monday, 6PM. Come learn about dropout, training dynamics, or just come to hang out. See you there 🫡

New Paper Day! For ACL Findings 2025: You should **drop dropout** when you are training your LMs AND MLMs!

While we’re building amazing new human-AI systems, how do we actually know if they work well for people? In our #ACL2025 Findings Paper, we introduce SPHERE, a framework for making evaluations of human-AI systems more transparent and replicable. ✨aclanthology.org/2025.findings-…

“Life after DPO” by @natolambert (CS224n 2024 lecture 15) youtu.be/dnF463_Ar9I

listening to @chrmanning at Taiwan demo day on what is after ai?

🚀 We’re excited to introduce Qwen3-235B-A22B-Thinking-2507 — our most advanced reasoning model yet! Over the past 3 months, we’ve significantly scaled and enhanced the thinking capability of Qwen3, achieving: ✅ Improved performance in logical reasoning, math, science & coding…

CS224N is elite. If you're even remotely interested in NLP, that playlist is a must-watch.

Llama 3.1 must love Harry Potter!

Prompting Llama 3.1 70B with the “Mr and Mrs. D” can generate seed the generation of a near-exact copy of the entire ~300 page book ‘Harry Potter & the Sorcerer’s Stone’ 🤯 We define a “near-copy” as text that is identical modulo minor spelling / punctuation variations. Below…

Last year's Data+AI Summit had a cool presentation from Yejin Choi on making SLMs work, if you have 20 mins to spare: youtube.com/watch?v=OBkMbP…

🚀 Excited to share that the Workshop on Mathematical Reasoning and AI (MATH‑AI) will be at NeurIPS 2025! 📅 Dec 6 or 7 (TBD), 2025 🌴 San Diego, California

🚨 New Paper Alert The Invisible Leash: Why RLVR May Not Escape Its Origin 📄 arxiv.org/abs/2507.14843 By Fang Wu*, Weihao Xuan*, Ximing Lu, Zaid Harchaoui, Yejin Choi Does RLVR expand reasoning capabilities—or just amplify what models already know? 🧵 Thread with key in sights

Prompting Llama 3.1 70B with the “Mr and Mrs. D” can generate seed the generation of a near-exact copy of the entire ~300 page book ‘Harry Potter & the Sorcerer’s Stone’ 🤯 We define a “near-copy” as text that is identical modulo minor spelling / punctuation variations. Below…

.@stanfordnlp papers at @aclmeeting in Vienna next week: • HumT DumT: Measuring and controlling human-like language in LLMs @chengmyra1 @sunnyyuych @jurafsky • Controllable and Reliable Knowledge-Intensive Task Agents with Declarative GenieWorksheets @harshitj__ @ShichengGLiu…

ML Log Day 4: >finished lec 8 of cs224n >did Bahdanau & Luong attention >worked through the math behind self-attn & multi-head >found this viz site and got lost in it lol: bbycroft.net/llm

Proudly organized by - @BerivanISIK (Google) - @beyzaermis (Cohere) - @Diyi_Yang (Stanford) - @MariusHobbhahn (Apollo Research) - @attaluri_nithya (Google DeepMind) - @RishiBommasani (Stanford) - @YangjunR (U Toronto) 3/3

Authors: Alex Gu, Naman Jain, Wen-Ding Li, Manish Shetty, Yijia Shao, Ziyang Li, Diyi Yang, Kevin Ellis, Koushik Sen, & Armando Solar-Lezama Paper: bit.ly/3IobaxD

Completely misses the point. Nobody is suggesting that solving IMO problems is useful for math research. The point is that AI has become really good at complex reasoning, and is not just memorizing its training data. It can handle completely new IMO questions designed by a…

Quote of the day: I certainly don't agree that machines which can solve IMO problems will be useful for mathematicians doing research, in the same way that when I arrived in Cambridge UK as an undergraduate clutching my IMO gold medal I was in no position to help any of the…