Shirley Wu

@ShirleyYXWu

CS PhD candidate @Stanford working w/ @jure & @james_y_zou on LLM agents and alignment | Prev USTC, Intern @MSFTResearch, @NUSingapore

CollabLLM won #ICML2025 ✨Outstanding Paper Award along with 6 other works! icml.cc/virtual/2025/a… 🫂 Absolutey honored and grateful for coauthors @MSFTResearch @StanfordAILab and friends who made this happen! 🗣️ Welcome people to our presentations about CollabLLM tomorrow…

Even the smartest LLMs can fail at basic multiturn communication Ask for grocery help → without asking where you live 🤦♀️ Ask to write articles → assumes your preferences 🤷🏻♀️ ⭐️CollabLLM (top 1%; oral @icmlconf) transforms LLMs from passive responders into active collaborators.…

Recipient of an ICML 2025 Outstanding Paper Award, CollabLLM improves how LLMs collaborate with users, including knowing when to ask questions and how to adapt tone and communication style to different situations. This approach helps move AI toward more user-centric and…

🏆Thrilled that #CollabLLM won the #ICML2025 Outstanding Paper Award! We propose a new approach to optimize human-AI collaboration, which is critical for agents. Congratulations to my fantastic co-authors; great job @ShirleyYXWu and Michel Galley driving the project!👏

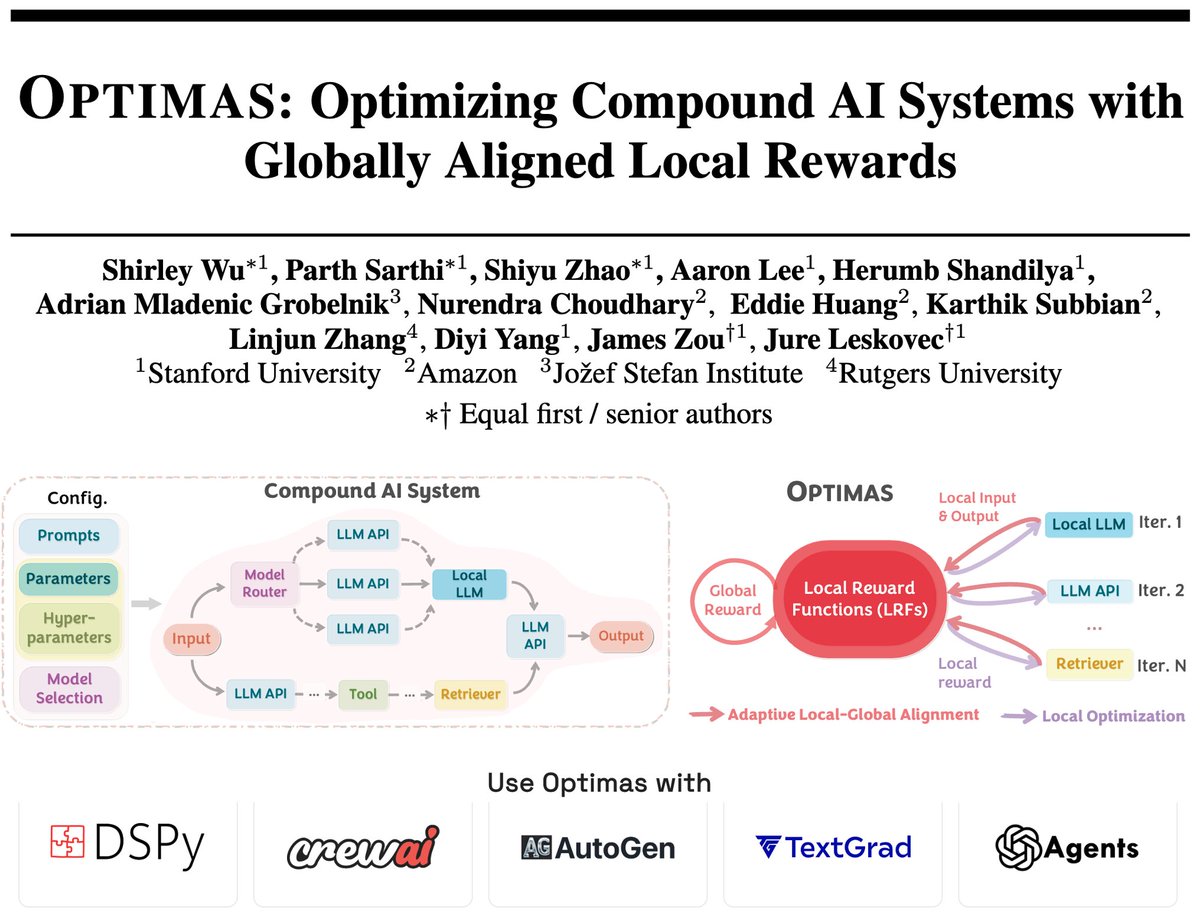

With the shift from single models to compound and agentic systems, optimization becomes way harder. Very cool paper by @ShirleyYXWu and co-authors on creating local, stepwise rewards aligned with global system performance!

Introducing 🔥Optimas🔥: The first unified framework to optimize compound AI systems composed of multiple components like trainable/API-based LLMs, tools, model routers, and traditional ML models! 🌐 👉🏻 optimas.stanford.edu 🌟 Why Optimas? AI systems today combine diverse…

With the move to Compound AI systems— built from components like finetunable/closed-source models, LLM selectors, and more— one big challenge is end-to-end optimization. Optimizing each component individually doesn't necessarily guarantee optimization of the full system. Our…

Introducing 🔥Optimas🔥: The first unified framework to optimize compound AI systems composed of multiple components like trainable/API-based LLMs, tools, model routers, and traditional ML models! 🌐 👉🏻 optimas.stanford.edu 🌟 Why Optimas? AI systems today combine diverse…

Introducing 🔥Optimas🔥: The first unified framework to optimize compound AI systems composed of multiple components like trainable/API-based LLMs, tools, model routers, and traditional ML models! 🌐 👉🏻 optimas.stanford.edu 🌟 Why Optimas? AI systems today combine diverse…

Can data owners & LM developers collaborate to build a strong shared model while each retaining data control? Introducing FlexOlmo💪, a mixture-of-experts LM enabling: • Flexible training on your local data without sharing it • Flexible inference to opt in/out your data…

Introducing FlexOlmo, a new paradigm for language model training that enables the co-development of AI through data collaboration. 🧵

Want to do a postdoc in my research group? bsse.ethz.ch/mail I can support one ETH Postdoc fellowship application this fall. For details, see: grantsoffice.ethz.ch/funding-opport… If interested, please carefully check your eligibility (esp PhD defense date) and then send us the…

📢 Our ICCV 2025 Workshop on Curated Data for Efficient Learning is accepting submissions! To be published in the proceedings: Deadline: July 7, 2025 All other submissions: Deadline: August 29, 2025 curateddata.github.io Join us this October in Hawaii! 🌺@ICCVConference

Call for Papers! Excited to announce our Workshop on Curated Data for Efficient Learning! #ICCV2025 We seeks to advance the understanding and development of data-centric techniques to improve the efficiency of large-scale training. Deadline: July 7, 2025 curateddata.github.io

How should an RL agent leverage expert data to improve sample efficiency? Imitation losses can overly constrain an RL policy. In RL via Implicit Imitation Guidance, we show how to use expert data to guide more efficient *exploration*, avoiding pitfalls of imitation-augmented RL

One of the most effective things the U.S. or any other nation can do to ensure its competitiveness in AI is to welcome high-skilled immigration and international students who have the potential to become high-skilled. For centuries, the U.S. has welcomed immigrants, and this…

Excited to introduce #CollabLLM -- a method to train LLMs to collaborate better w/ humans! Selected as #icml2025 oral (top 1%)🏅 New multi-turn training objective + user simulator👇

Even the smartest LLMs can fail at basic multiturn communication Ask for grocery help → without asking where you live 🤦♀️ Ask to write articles → assumes your preferences 🤷🏻♀️ ⭐️CollabLLM (top 1%; oral @icmlconf) transforms LLMs from passive responders into active collaborators.…