Deepak Vijaykeerthy

@DVijaykeerthy

ML Research & Engineering @IBMResearch. Ex @MSFTResearch. Opinions are my own! Tweets about books & food.

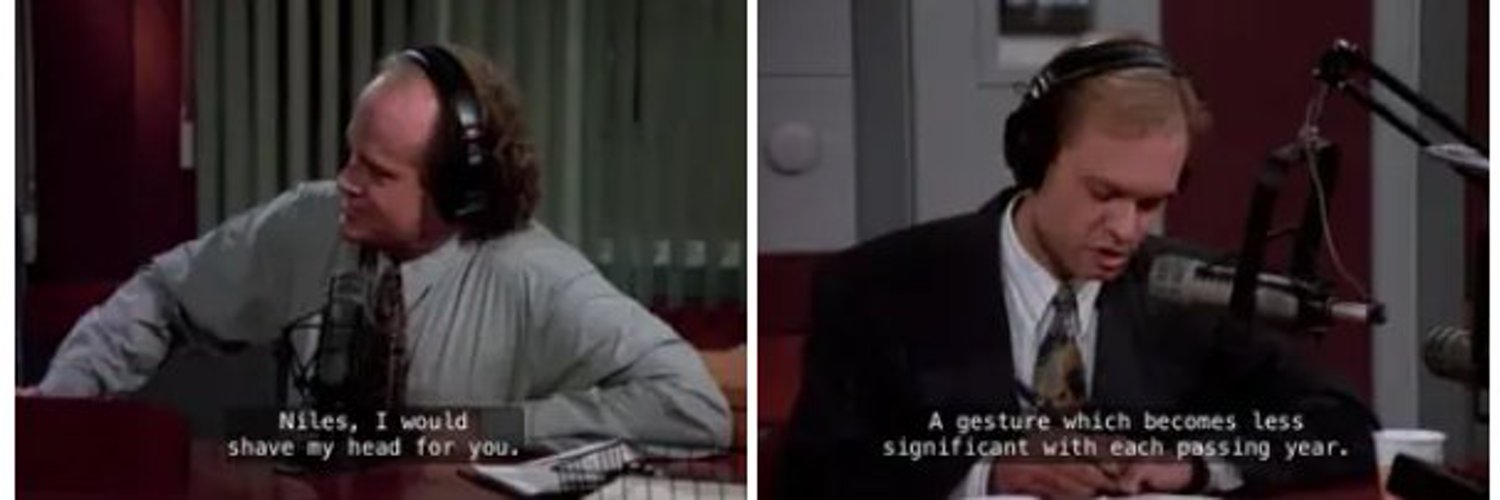

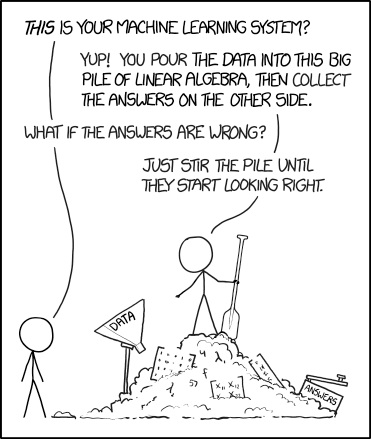

"The pile gets soaked with data and starts to get mushy over time, so it's technically recurrent."

[1/7] Excited to share our new survey on Latent Reasoning! The field is buzzing with methods—looping, recurrence, continuous thoughts—but how do they all relate? We saw a need for a unified conceptual map. 🧵 📄 Paper: arxiv.org/abs/2507.06203 💻 Github: github.com/multimodal-art…

I was just at @LukeZettlemoyer's talk at #ACL2025NLP, and a common misconception about copyright is that if you remove copyrighted materials from your training data, you're safe. Cleaning your data shows good faith, but you could still be sued... 1/n

Do reasoning models like DeepSeek R1 learn their behavior from scratch? No! In our new paper, we extract steering vectors from a base model that induce backtracking in a distilled reasoning model, but surprisingly have no apparent effect on the base model itself! 🧵 (1/5)

PSA: Don't say "Longer thinking kills performance" when you mean "Length of intermediate token string is not correlated with final accuracy"

The City Officials Website now includes the notified GBA Corporation boundaries Zoom into any area and see how the new boundaries might affect your life and work ht once again to @Vonterinon 🥂 🔗 cityofficials.bengawalk.com/?map=gba_corpo…

Out today, 20 July: Believer’s Dilemma—the second volume of my study on Vajpayee and the Hindu Right. Now available at bookshops! (Most of them are happy to ship across lands and oceans.) Please do share it with friends or family who might be interested in something like this.

Always a pleasure to visit @bookworm_Kris. What better way to spend your Sunday than to stroll around the aisles of your local bookstore!

Two cents on AI getting International Math Olympiad (IMO) Gold, from a mathematician. Background: Last year, Google DeepMind (GDM) got Silver in IMO 2024. This year, OpenAI solved problems P1-P5 for IMO 2025 (but not P6), and this performance corresponds to Gold. (1/10)

Wrote an article for the 41st anniversary of @timesofindia about how I fell in love with my home, one darshini at a time. And the most wonderful community, Thindi Capital, that bonds over love of food. Thanks a ton, @manujaveerappa for letting me write :) timesofindia.indiatimes.com/city/bengaluru…

NEW: There’s been a lot of discussion lately about rising graduate unemployment. I dug a little closer and a striking story emerged: Unemployment is climbing among young graduate *men*, but college-educated young women are generally doing okay.

Interesting post. However, it seems to be in conflict with the most central problem in theoretical computer science: P vs NP ,which is exactly the question: is it fundamentally easier to verify a solution rather than solve a problem. Most people believe that verification is…

New blog post about asymmetry of verification and "verifier's law": jasonwei.net/blog/asymmetry… Asymmetry of verification–the idea that some tasks are much easier to verify than to solve–is becoming an important idea as we have RL that finally works generally. Great examples of…

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

Just got off work and tried Grok-4 on an undergrad topology problem. It took 9 minutes to think and then confidently gave a clean, plausible, but totally wrong answer 😅 Don’t think this one qualifies as “skillfully adversarial.” AI models are crushing benchmarks — but still a…

Grok 4 is at the point where it essentially never gets math/physics exam questions wrong, unless they are skillfully adversarial. It can identify errors or ambiguities in questions, then fix the error in the question or answer each variant of an ambiguous question.

This paper is pretty cool; through careful tuning, they show: - you can train LLMs with batch-size as small as 1, just need smaller lr. - even plain SGD works at small batch. - Fancy optims mainly help at larger batch. (This reconciles discrepancy with past ResNet research.) - At…

🚨 Did you know that small-batch vanilla SGD without momentum (i.e. the first optimizer you learn about in intro ML) is virtually as fast as AdamW for LLM pretraining on a per-FLOP basis? 📜 1/n

New lecture recordings on RL+LLM! 📺 This spring, I gave a lecture series titled **Reinforcement Learning of Large Language Models**. I have decided to re-record these lectures and share them on YouTube. (1/7)

We’re releasing the top 3B model out there SOTA performances It has dual mode reasoning (with or without think) Extended long context up to 128k And it’s multilingual with strong support for en, fr, es, de, it, pt What do you need more? Oh yes we’re also open-sourcing all…

Despite theoretically handling long contexts, existing recurrent models still fall short: they may fail to generalize past the training length. We show a simple and general fix which enables length generalization in up to 256k sequences, with no need to change the architectures!

Quitting programming as a career right now because of LLMs would be like quitting carpentry as a career thanks to the invention of the table saw.

😵💫 Struggling with 𝐟𝐢𝐧𝐞-𝐭𝐮𝐧𝐢𝐧𝐠 𝐌𝐨𝐄? Meet 𝐃𝐞𝐧𝐬𝐞𝐌𝐢𝐱𝐞𝐫 — an MoE post-training method that offers more 𝐩𝐫𝐞𝐜𝐢𝐬𝐞 𝐫𝐨𝐮𝐭𝐞𝐫 𝐠𝐫𝐚𝐝𝐢𝐞𝐧𝐭, making MoE 𝐞𝐚𝐬𝐢𝐞𝐫 𝐭𝐨 𝐭𝐫𝐚𝐢𝐧 and 𝐛𝐞𝐭𝐭𝐞𝐫 𝐩𝐞𝐫𝐟𝐨𝐫𝐦𝐢𝐧𝐠! Blog: fengyao.notion.site/moe-posttraini……

(1/8)🔥Excited to share that our paper “Mitigating Plasticity Loss in Continual Reinforcement Learning by Reducing Churn” has been accepted to #ICML2025!🎉 RL agents struggle to adapt in continual learning. Why? We trace the problem to something subtle: churn. 👇🧵@Mila_Quebec