Damien Teney

@DamienTeney

Research Scientist @ Idiap Research Institute. @Idiap_ch Adjunct lecturer @ Australian Institute for ML. @TheAIML Occasionally cycling across continents.

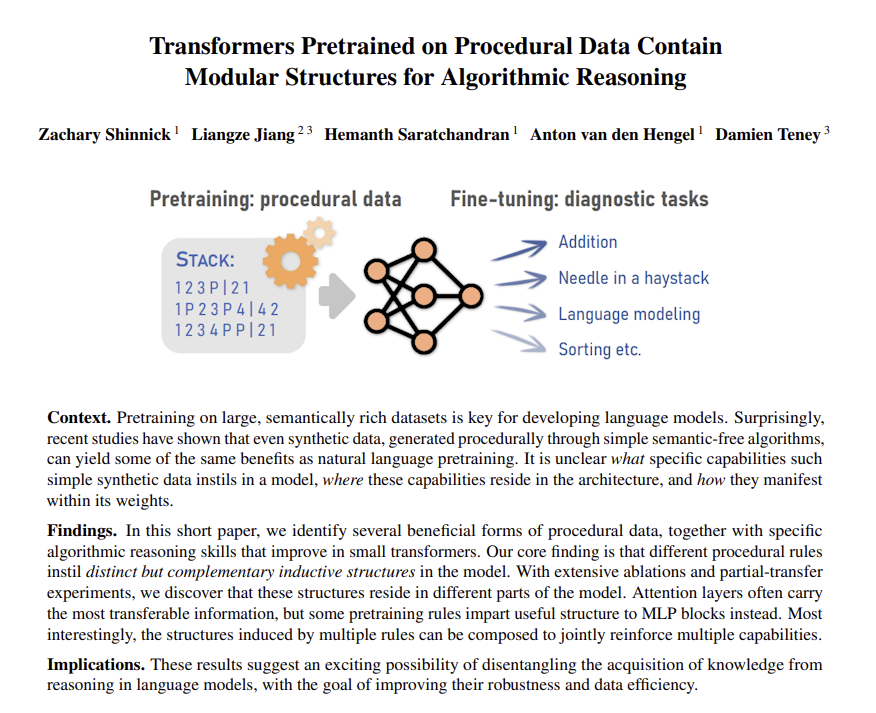

Do you need rich real-world data to pretrain a transformer?🤔We looked into procedural data (generated from formal languages or eg simulating a stack). Similarly to pretraining on code, this data can instill useful inductive biases but is completely free!⬇️🧵

Great advice for planning a career in research. See especially "treat research projects like startups" 👇

Back in grad school, when I realized how the “marketplace of ideas” actually works, it felt like I’d found the cheat codes to a research career. Today, this is the most important stuff I teach students, more than anything related to the substance of our research. A quick…

👇On Saturday at ICML (@MOSS_workshop, West Ballroom B)

Do you need rich real-world data to pretrain a transformer?🤔We looked into procedural data (generated from formal languages or eg simulating a stack). Similarly to pretraining on code, this data can instill useful inductive biases but is completely free!⬇️🧵

Poster today at ICML 👇 (Tue 11am, Hall A-B, E-2207)

Coming up at ICML: 🤯Distribution shifts are still a huge challenge in ML. There's already a ton of algorithms to address specific conditions. So what if the challenge was just selecting the right algorithm for the right conditions?🤔🧵

Some of this can be explained by the transfer of simple computational primitives (e.g. attention patterns). The cleanest demonstration of this come from pretraining on procedural data, e.g. arxiv.org/abs/2505.22308 (and references in Appendix A).

I still find it mysterious whether and how intelligence and capabilities transfer between domains and skills - from meta learning during early days to more recent question like whether solving maths helps writing a good essay. Sometime I feel a bit pessimistic given not enough…

Great case of underspecification: many solutions exist to the ERM learning objective. Key question: what's formally a "good model" (low MDL?) & how to make this the objective. Short of that, we could learn a variety of solutions to examine/select post-hoc: arxiv.org/abs/2207.02598

Can an AI model predict perfectly and still have a terrible world model? What would that even mean? Our new ICML paper formalizes these questions One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵