William MacAskill

@willmacaskill

EA adjacent adjacent.

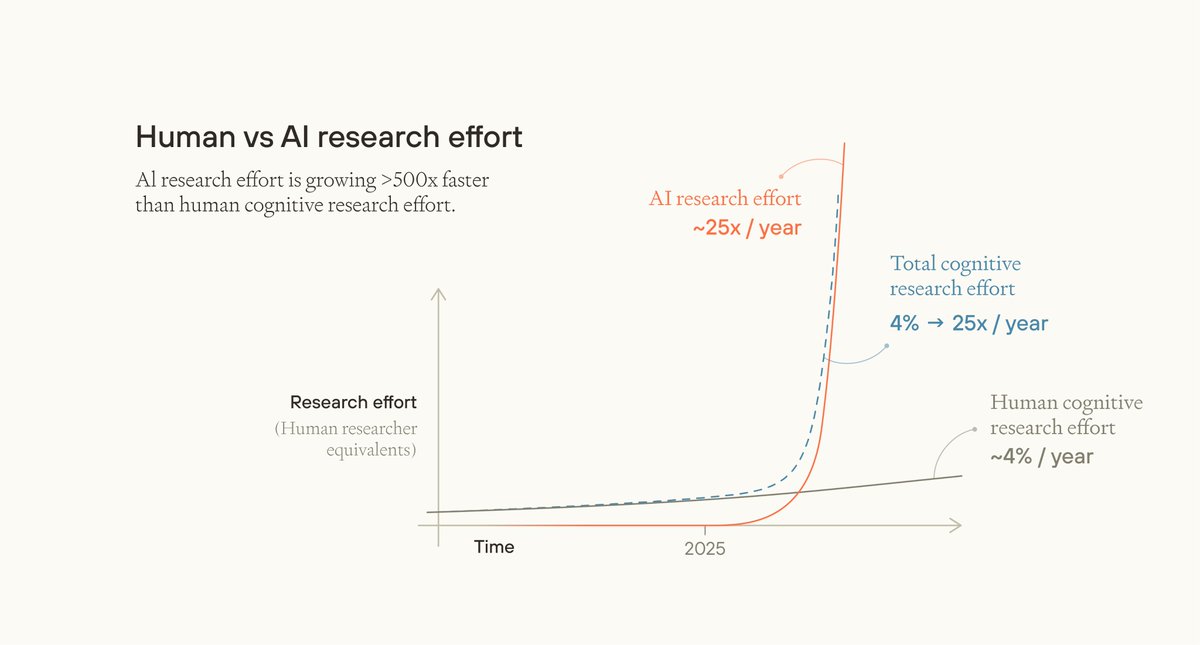

Currently, total AI cognitive effort is growing ~25x yearly—hundreds of times faster than human research effort (4% yearly). Once AI can meaningfully substitute for human research, total research growth (human+AI) will increase *dramatically*.

Super interesting paper. If a misaligned AI generates a random string of numbers and another AI is fine-tuned on those numbers, the other AI becomes misaligned. But only if both AIs start from the same base model. This has consequences for preventing secret loyalties: - If an…

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

Really enjoyed doing this interview. We covered topics I haven't discussed before, like whether some coups would be worse than others and whether the US could gain global dominance by outgrowing the rest of the world

📻 New on the FLI Podcast! ➡️ @forethought_org's @TomDavidsonX and host @GusDocker discuss the growing threat of AI-enabled coups: how AI could empower small groups to overthrow governments and seize power. 🔗 Listen to the full interview at the link in the replies below:

Ryan is in my view one of the very best thinkers on AI right now - I'm super excited for this podcast.

Ryan Greenblatt is lead author of "Alignment faking in LLMs" and one of AI's most productive researchers. He puts a 25% probability on automating AI research by 2029. We discuss: • Concrete evidence for and against AGI coming soon • The 4 easiest ways for AI to take over •…

Excited to announce my new research organisation, Fivethought! At Fivethought, we're focused on how to navigate the transition from “transitioning to a world with superintelligent AI systems” to “a world with superintelligent AI systems”. (1/n)

*New* @givewell podcast - We share some rough estimates on forecasted aid cuts: - $60b in global health funding pre-cuts - $6b of that is extremely cost-effective (twice our current "10x" bar, ~$3k/death averted) - 25% cuts forecasted in these extremely cost-effective programs

Enjoyed the recent @80000Hours w/ @tobyordoxford. Agree that AI policy researchers should dream bigger on societal Qs. @simondgoldstein and I have been working on one of Toby's big questions: Should the AGI economy be run like a slave society (as it will under default law)?

I think the idea of the "industrial explosion" is of similar importance as the idea of the intelligence explosion, and much less discussed. Really happy to see this analysis.

To quickly transform the world, it's not enough for AI to become super smart (the "intelligence explosion") AI will also have to turbocharge the physical world (the "industrial explosion") New post lays out the stages of the industrial explosion, and argues it will be fast! 🧵

“The Big Beautiful Bill contains a provision to ban AI regulation by the States for 10 years. This is like walking at night in the African jungle blindfolded and handcuffed. Strike the moratorium on AI regulation from the BBB. If John Connor from the Terminator had an X account,…

I asked Oxford philosopher Toby Ord to explain 'The Scaling Paradox': AI 'scaling' is one of the least efficient things on the planet, with costs rising as x²⁰ (!). Also how OpenAI accidentally put out a graph suggesting o3 was no better than o1 — plus revealed their latest…

Inference is hosting some of the world’s leading experts for a debate on the possibility and potential consequences of automated AI research. The debate will be hosted in London on July 1st. There are limited spaces available. Register your interest below

Three hours! 😲 I find Toby has consistently fresh takes on AI, often quite different than mine - can't wait to listen to this!

New podcast episode with @tobyordoxford — on inference scaling, time horizons for AI agents, lessons from scientific moratoria, and more. pnc.st/s/forecast/53b…

If AIs could learn as efficiently as a bright 10 year old child, then shortly after this point AIs would likely be generally superhuman via learning on more data and compute than a human can. So, I don't expect human level learning and sample efficiency until very powerful AI.

If we were 2 years from some kind of singularity-like AGI, I would expect current AI to be able to • (minimally) do essentially anything cognitive that a bright 10 year child could do, such as understand movies, acquire the basics of new skills quickly, learn complex,…

Demis Hassabis is calling on philosophers to step in. He says technologists shouldn't decide AGI's future alone. “We need a new Kant or Wittgenstein to help map out where society should go next.” Politics, ethics, and theology will be essential to navigating a post-AGI world.

Cool to see the CEO of Charity Navigator take the 10% Pledge from @givingwhatwecan to donate a tenth of his income to highly effective charities. Props, @mthatcher!

Being at EAG is so inspiring. It's a rare case where you feel like people are actually willing to grapple with the horrors of the world and do something about it.

AI systems could become conscious. What if they hate their lives? Is it our duty to make sure they're happy? To make sure Claude is always enjoying spiritual bliss? My new piece, with insights from @rgblong @birchlse @DrSueSchneider @fish_kyle3 vox.com/future-perfect…

The first post-publication academic review I've seen of our book (@willmacaskill), courtesy of Prof. Konstantin Weber in the journal Utilitas.

Excited to see the #1 New Release in Utilitarian Philosophy on Amazon! Note that it's the same content as we offer in the textbook chapters on utilitarianism.net, so if you're familiar with the latter, you could be in a good position to write our first Amazon review!