Wei Xiong

@weixiong_1

Statistical learning theory, Post-training of LLMs, RAFT, LMFlow, GSHF, and RLHFlow. PhD Student @IllinoisCS, current @GoogleDeepMind, prev @MSFTResearch @USTC

🚀 New Paper Alert! 🚀 LLMs struggle with self-correction like O1/R1 because models lack judgment on when to revise vs. when to stay confident. We introduce self-rewarding reasoning LLM, a reasoning framework that: ✅ integrates generator and generative RM into a single LLM. ✅…

🚀 Excited to share that the Workshop on Mathematical Reasoning and AI (MATH‑AI) will be at NeurIPS 2025! 📅 Dec 6 or 7 (TBD), 2025 🌴 San Diego, California

Reinforcement learning enables LLMs to beat humans on programming/math competitions and has driven recent advances (OpenAI's o-series, Anthropic's Claude 4) Will RL enable broad generalization in the same way that pretraining does? Not with current techniques 🧵 1/7

We introduce Gradient Variance Minimization (GVM)-RAFT, a principled dynamic sampling strategy that minimizes gradient variance to improve the efficiency of chain-of-thought (CoT) training in LLMs. – Achieves 2–4× faster convergence than RAFT – Improves accuracy on math…

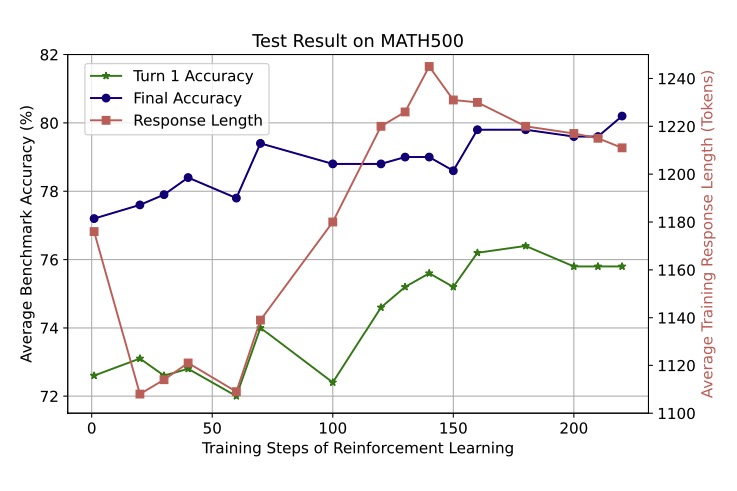

Surprised by the small performance gap between RAFT and Reinforce/GRPO. We may need more fine-grained negative signals to better guide learning.🧐

🤖What makes GRPO work? Rejection Sampling→Reinforce→GRPO - RS is underrated - Key of GRPO: implicitly remove prompts without correct answer - Reinforce+Filtering > GRPO (better KL) 💻github.com/RLHFlow/Minima… 📄arxiv.org/abs/2504.11343 👀RAFT was invited to ICLR25! Come & Chat☕️

🤖What makes GRPO work? Rejection Sampling→Reinforce→GRPO - RS is underrated - Key of GRPO: implicitly remove prompts without correct answer - Reinforce+Filtering > GRPO (better KL) 💻github.com/RLHFlow/Minima… 📄arxiv.org/abs/2504.11343 👀RAFT was invited to ICLR25! Come & Chat☕️

Our NAACL 2025 findings paper demonstrates that securing AI agents requires more than off-the-shelf defenses—adaptive attacks continuously evolve to bypass them. If you have any questions or want to discuss more, feel free to reach out!

AI agents are increasingly popular (e.g., OpenAI's operator) but can be attacked to harm users! We show that even with defenses, AI agents can still be compromised via indirect prompt injections via "adaptive attacks" in our NAACL 2025 findings paper 🧵 and links below

🚀 Introducing 𝗦𝗲𝗮𝗿𝗰𝗵-𝗥𝟭 – the first 𝗿𝗲𝗽𝗿𝗼𝗱𝘂𝗰𝘁𝗶𝗼𝗻 𝗼𝗳 𝗗𝗲𝗲𝗽𝘀𝗲𝗲𝗸-𝗥𝟭 (𝘇𝗲𝗿𝗼) for training reasoning and search-augmented LLM agents with reinforcement learning! This is a step towards training an 𝗼𝗽𝗲𝗻-𝘀𝗼𝘂𝗿𝗰𝗲 𝗢𝗽𝗲𝗻𝗔𝗜 “𝗗𝗲𝗲𝗽…

Our new paper, LEAP, was accepted to VLDB 2025! AI/ML is powerful, but challenging to use for non-experts. We built an end-to-end library that automatically executes full pipelines to apply AI/ML over unstructured data (text, images, etc.) 1/7

📈 Your time-series-paired texts are secretly a time series! 🙌 Real-world time series (stock prices) and texts (financial reports) share similar periodicity and spectrum, unlocking seamless multimodal learning using existing TS models. 🔬 Read more: arxiv.org/abs/2502.08942

🚀 Excited to share our latest work on Iterative-DPO for math reasoning! Inspired by DeepSeek-R1 & rule-based PPO, we trained Qwen2.5-MATH-7B on Numina-Math prompts. Our model achieves 47.0% pass@1 on AIME24, MATH500, AMC, Minerva-Math, OlympiadBench—outperforming…

LLM Alignment as Retriever Optimization: An Information Retrieval Perspective arxiv.org/abs/2502.03699 We introduce a comprehensive framework that connects LLM alignment techniques with the established IR principles, providing a new perspective on LLM alignment.

How to schedule a meeting? When you ask for a meeting with others, you are asking for their time. You are asking for their most valuable, finite resource to benefit yourself (e.g., for advice, networking, questions, and opportunities). Here are some tips that I found useful.

I am looking for new PhD students for Fall 2025 at CMU ECE on LLM reasoning, alignment, and efficiency. You can refer to azanette.com for more information. If interested, apply to the CMU ECE PhD program by Dec 15 at gradadmissions.engineering.cmu.edu/apply/