Changsheng Wang(C.S.Wang)

@wcsa23187

First-year PhD Student @ MSU CSE; Research Intern @Intel; BS @USTC; Interested in the Security & Trustworthy of AI.

Heading to Vienna for #ICML2024! Excited to explore how current "zeroth-order "optimization techniques are shaping up for memory-efficient fine-tuning of LLMs; Feel free to check our ICML paper, "Revisiting Zeroth-Order Optimization for Memory-Efficient LLM Fine-Tuning: A…

Thank you @INNSociety for this great honor. I am deeply grateful to my nominator, students, and collaborators who made this recognition possible. Excited to keep advancing the frontiers of scalable and trustworthy AI! @OptML_MSU

🏆Congratulations to Bo Han, Souvik Kundu, and Sijia Liu for receiving the 2024 #INNS Aharon Katzir Young Investigator Award in recognition of promising research in the field of neural networks! 🔗Learn more: loom.ly/_sbJr0I #AharonKatzir #INNSAwards #neuralnetworks

🤔Come to check Chongyu @ChongyuFan and Jinghan Jia @jia_jinghan ‘s work! 👨💻Jinghan has been an incredible mentor to work with—smart, supportive, and inspiring. He’s on the job market now, feel free to reach out and chat with him!

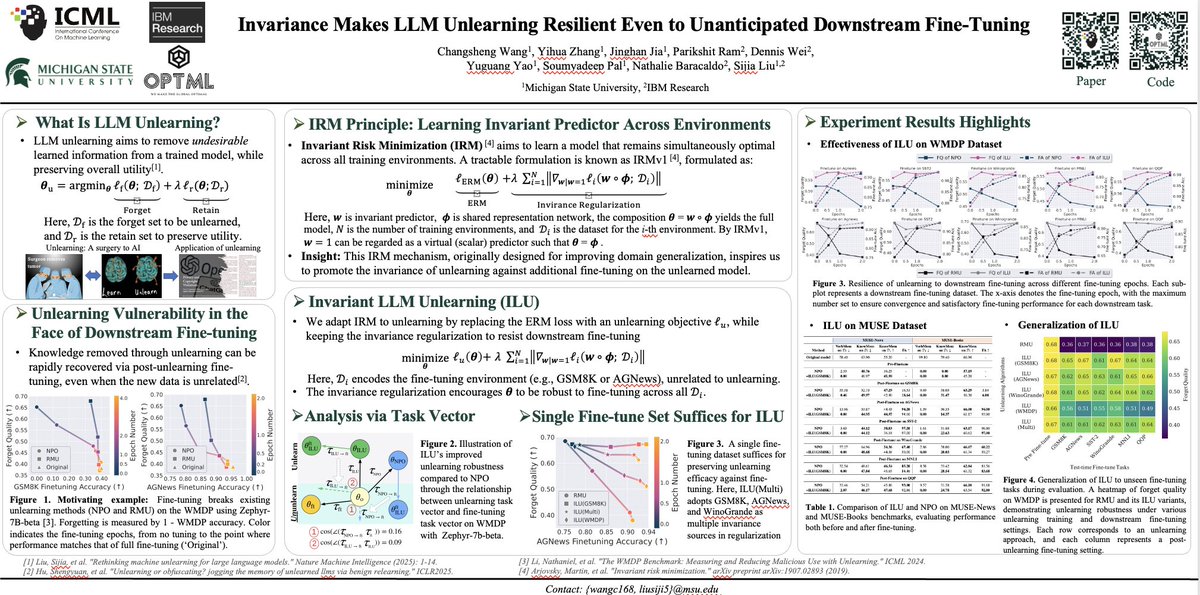

Excited to share our ICML’25 work on robust LLM unlearning! Poster is on Wed, July 16 @ 4:30pm PT (E-2803) , feel free to stop by and chat with the team!

🎯 ICML 2025 Poster! 📄 “Invariance Makes LLM Unlearning Resilient Even to Unanticipated Downstream Fine-Tuning” 🔗 arxiv.org/abs/2506.01339 🗓️ July 15, 4:30–7:00 pm PT 📍 East Exhibition Hall A-B, #E-1108 🧍♂️ I won’t be there in person — but feel free to drop by and chat with my…

An LLM generates an article verbatim—did it “train on” the article? It’s complicated: under n-gram definitions of train-set inclusion, LLMs can complete “unseen” texts—both after data deletion and adding “gibberish” data. Our results impact unlearning, MIAs & data transparency🧵

Congratulations to @zyh2022 on being awarded the IBM PhD Fellowship 2024! Yihua is truly one of the most outstanding PhD students and an exceptional mentor I’ve had the pleasure to work with. Eagerly anticipating your next steps and future achievements!

I'm thrilled to announce that I've been awarded the prestigious IBM PhD Fellowship 2024! @IBMResearch A heartfelt thank you to my advisor, colleagues, and the @IBM award committee for their support and recognition. #IBMPhDFellowship2024 research.ibm.com/university/awa…

Machine unlearning ("removing" training data from a trained ML model) is a hard, important problem. Datamodel Matching (DMM): a new unlearning paradigm with strong empirical performance! w/ @kris_georgiev1 @RoyRinberg @smsampark @shivamg_13 @aleks_madry @SethInternet (1/4)

BREAKING: Taylor Swift's Eras Tour just did what AI couldn’t—pushed NeurIPS by a whole day! 🤖 🤣🤣🤣 #NeurIPS 2024 Conference Date Change The conference start date has been changed to Tuesday December 10 in order to support delegates arriving on Monday. neurips.cc

Jailbreak images for multimodal fusion models Unlike LLaVA, newer fusion models, like GPT-4o and Gemini, map all modalities into a shared tokenized space. Existing image jailbreak methods fail because tokenization is not differentiable!

Excited to attend #ECCV2024 to present two of our recent works on the adversarial aspects of machine unlearning in computer vision! One explores "challenging forgets", while the other studies unlearning robustness in text-to-image generation. My student @ChongyuFan will lead the…

📢📢📢 When will machine unlearning methods fail to remove unwanted data/concept influences in models? Our #ECCV2024 papers investigate this by exploring "challenging scenarios". One study identifies the worst-case forget sets from a data selection perspective [1], while the…

Are watermarks for generative models strong enough to be useful? Or are they too easy to wipe away? If you think watermarks are no good, then prove it! Our @NeurIPSConf competition on erasing watermarks is off and running. Participating is easy. We give you a batch of…

🚨 Join the Challenge! 🚨 We're excited to announce the NeurIPS competition "Erasing the Invisible: A Stress-Test Challenge for Image Watermarks" running from September 16 to November 5. This is your chance to test your skills in a cutting-edge domain and win a share of our $6000…

Wrote about extrinsic hallucinations during the July 4th break. lilianweng.github.io/posts/2024-07-… Here is what ChatGPT suggested as a fun tweet for the blog: 🚀 Dive into the wild world of AI hallucinations! 🤖 Discover how LLMs can conjure up some seriously creative (and sometimes…

📢📢📢 When will machine unlearning methods fail to remove unwanted data/concept influences in models? Our #ECCV2024 papers investigate this by exploring "challenging scenarios". One study identifies the worst-case forget sets from a data selection perspective [1], while the…

Honored to be selected in the Program! I’ll be presenting my study on Machine Unlearning for LLMs and diffusion models at NVIDIA’s HQ in Santa Clara 🎉 MU is a rapidly evolving field in trustworthy ML. Interested in this area or have ideas? DM me for collaboration opportunities!

Congratulations to the 2024 @MLCommons rising star cohort. Take a look at these impressive future leaders in Systems and ML research. mlcommons.org/2024/06/2024-m… #machinelearning #risingstars #systems #data

[1] “Finish the paper, release the code and data, and then tweet about this paper” —Can language model handle this kind of sequential instructions well? Check our paper: arxiv.org/pdf/2403.07794 Data and model: seqit.github.io Code: github.com/hanxuhu/SeqIns

LLMs can memorize training data, causing copyright/privacy risks. Goldfish loss is a nifty trick for training an LLM without memorizing training data. I can train a 7B model on the opening of Harry Potter for 100 gradient steps in a row, and the model still doesn't memorize.