TUM Computer Vision Group

@tumcvg

Computer Vision Group of Prof. Daniel Cremers at @TU_Muenchen. (Account managed by students)

We are thrilled that our group has twelve papers accepted at #CVPR2025! 🚀 Congratulations to all of our students for this great achievement! 🎉 For more details, check out: cvg.cit.tum.de

🦖 We present “Feed-Forward SceneDINO for Unsupervised Semantic Scene Completion”. #ICCV2025 🌍: visinf.github.io/scenedino/ 📃: arxiv.org/abs/2507.06230 💻: github.com/tum-vision/sce… 🤗: huggingface.co/spaces/jev-ale… w/ A. Jevtić @felixwimbauer @olvr_hhn C. Rupprecht @stefanroth D. Cremers

Many Congratulations to @jianyuan_wang, @MinghaoChen23, @n_karaev, Andrea Vedaldi, Christian Rupprecht and @davnov134 for winning the Best Paper Award @CVPR for "VGGT: Visual Geometry Grounded Transformer" 🥇🎉 🙌🙌 #CVPR2025!!!!!!

Let's make noise nonisotropic and inject connectivity prior into diffusion noise! Happening now, poster #158 @tumcvg #CVPR2025

Excited to be attending CVPR 2025 this week in Nashville! I’ll be presenting our recent work: “4Deform: Neural Surface Deformation for Robust Shape Interpolation” 📍 Poster session: [13th June 4pm-6pm poster #111] #CVPR2025 #computervision

Looking forward to presenting our paper "Finsler multi-dimensional scaling" at #CVPR2025 on Sunday 10:30am, Poster 462! We investigate a largely uncharted research direction in computer vision, aka Finsler manifolds...

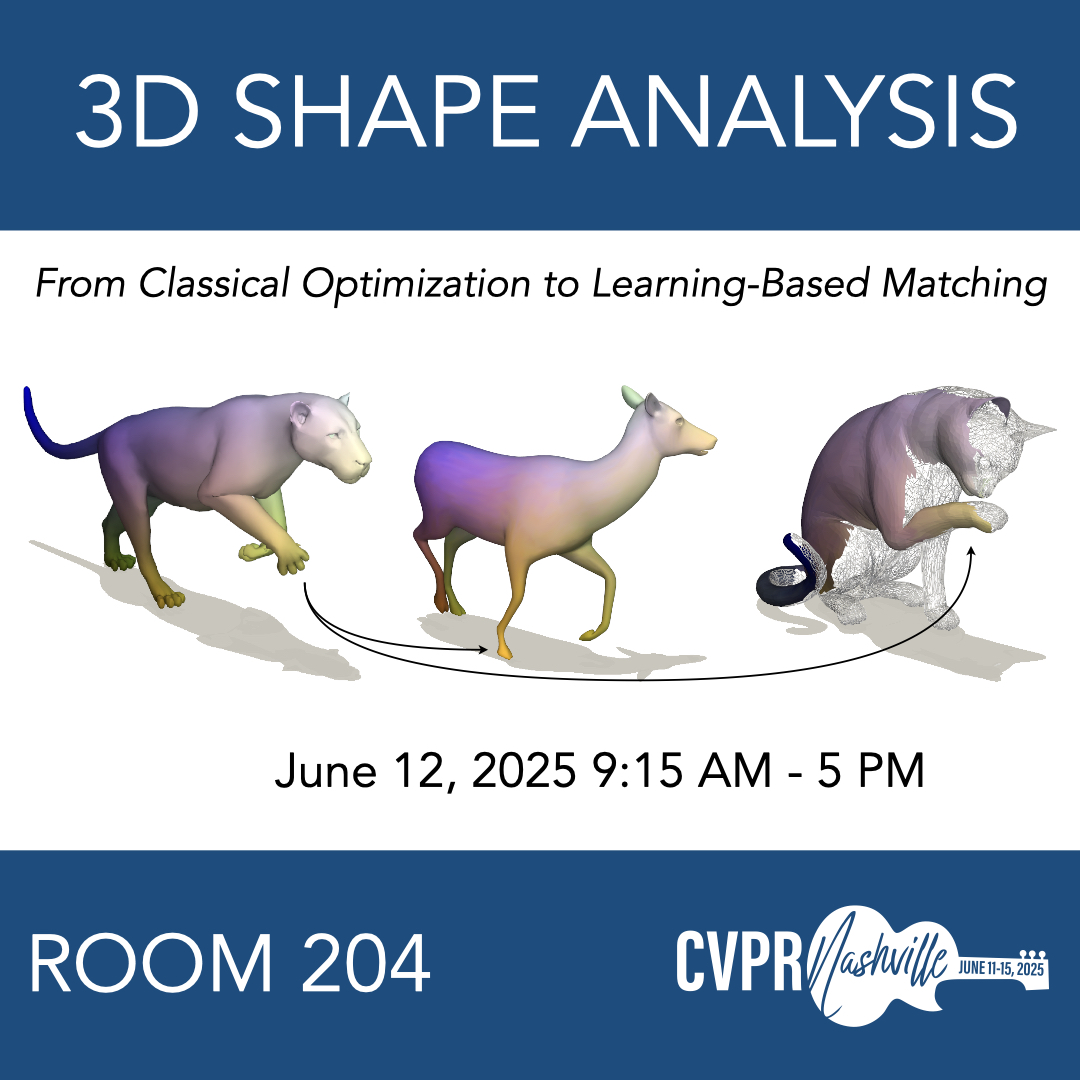

Join us for a full-day 3D Shape Analysis tutorial at @CVPR in Nashville, on Thursday, June 12, from 9:15 AM to 5:00 PM in Room 204! 👉 More details and the full schedule are available at: shape-analysis-tutorial.github.io/cvpr-25/

Can we match vision and language representations without any supervision or paired data? Surprisingly, yes! Our #CVPR2025 paper with @neekans and Daniel Cremers shows that the pairwise distances in both modalities are often enough to find correspondences. ⬇️1/4

#ICCV2025 AC thought: Part of being a responsible reviewer is engaging throughout the review process, not simply submitting your initial review. If you haven’t already, join your paper discussions.

I'm beyond excited to share our latest work ✨️AlphaEvolve✨️, a Gemini-powered coding agent that designs advanced algorithms, tackling open mathematical challenges, and optimizing Google infrastructure (data centers, TPUs, AI training). Learn how 🚀: deepmind.google/discover/blog/…

Introducing AlphaEvolve: a Gemini-powered coding agent for algorithm discovery. It’s able to: 🔘 Design faster matrix multiplication algorithms 🔘 Find new solutions to open math problems 🔘 Make data centers, chip design and AI training more efficient across @Google. 🧵

Can you train a model for pose estimation directly on casual videos without supervision? Turns out you can! In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors! ⬇️

Check out how AnyCam is using Rerun viz on their project page 🔥🔥🔥

Check out our recent #CVPR2025 paper AnyCam, a method for pose estimation in casual videos! 1️⃣ Can be directly trained on casual videos without the need for 3D annotation. 2️⃣ Based around a feed-forward transformer and light-weight refinement. ♦️ fwmb.github.io/anycam/

Introducing LoftUp! A stronger (than ever) and lightweight feature upsampler for vision encoders that can boost performance on dense prediction tasks by 20%–100%! Easy to plug into models like DINOv2, CLIP, SigLIP — simple design, big gains. Try it out! github.com/andrehuang/lof…

Back on Track: Bundle Adjustment for Dynamic Scene Reconstruction Weirong Chen, Ganlin Zhang, @felixwimbauer , Rui Wang, @neekans Andrea Vedaldi, Daniel Cremers tl;dr even for non-rigid SfM you can do BA on static parts -> improves everything. arxiv.org/abs/2504.14516

🤗I’m excited to share our recent work: TwoSquared: 4D Reconstruction from 2D Image Pairs. 🔥 Our method produces geometry-consistent, texture-consistent, and physically plausible 4D reconstructions 📰 Check our project page sangluisme.github.io/TwoSquared/ ❤️ @zehranaz98 @_R_Marin_

I’m exited to share our work today at Hall 3 Singapore EXPO 3:00pm #ICLR2025 See you there.😄😄

🥳Thrilled to share our work, "Implicit Neural Surface Deformation with Explicit Velocity Fields", accepted at #ICLR2025 👏 code is available at: github.com/Sangluisme/Imp… 😊Huge thanks to my amazing co-authors. Special thanks to @_R_Marin_ @zehranaz98 @tumcvg @TU_Muenchen

Check out our latest #CVPR2025 work AnyCam! Instead of relying on videos with 3D annotations, AnyCam learns pose estimation directly from unlabeled dynamic videos. This is an interesting alternative to methods like Monst3r, which rely on expensive pose labels during training!

Check out our recent #CVPR2025 paper AnyCam, a method for pose estimation in casual videos! 1️⃣ Can be directly trained on casual videos without the need for 3D annotation. 2️⃣ Based around a feed-forward transformer and light-weight refinement. ♦️ fwmb.github.io/anycam/

Check out our recent #CVPR2025 paper AnyCam, a method for pose estimation in casual videos! 1️⃣ Can be directly trained on casual videos without the need for 3D annotation. 2️⃣ Based around a feed-forward transformer and light-weight refinement. ♦️ fwmb.github.io/anycam/

📣 #CVPR2025 (Highlight): Scene-Centric Unsupervised Panoptic Segmentation Check out our recent CVG paper on unsupervised panoptic segmentation!🚀

📢 #CVPR2025: Scene-Centric Unsupervised Panoptic Segmentation 🔥 We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery. Using self-supervised features, depth & motion, we achieve SotA results! 🌎 visinf.github.io/cups