Travers

@travers00

// collecting things for the memory bank // building a parallel web for AIs // http://parallel.ai

very cool, future-forward website - parallel.ai (unaffiliated but already a fan)

it’s funny how companies are just mechanisms for distributed & delegated attention

my intuition is that Alzheimer’s is a disease of diseases a syndrome of multiple cumulative degradations across sleep, metabolic health, cardio, genetics, inflammation, etc. at the core, it comes down to a single bottleneck: reduced blood flow to brain over sustained periods…

“The presence of the original is the prerequisite to the concept of authenticity.”

Someone should build a Wayback Machine for models. Progress moves fast, but depreciation moves faster. Eval charts tell you one thing—but being able to dial up GPT-2 or BERT and run them side by side today’s SOTA would be a visceral way to feel the rate of scaling.

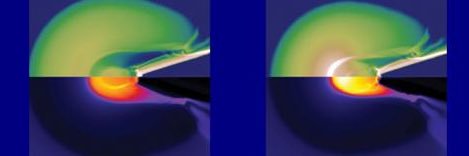

the next capability leap in reasoning for models will be an extension of Hofstadter’s parallel terraced scan an energy-based model dynamically allocating compute resources according to the complexity of the problem, through parallelism, terraces (diff levels of abstraction)

The best institutions allocate resources in a parallel terraced scan < explore // exploit >

somebody should build an AMC (advance market commitment) for AGI // large-scale computer clusters

the growth of knowledge (across society and over generations) can be seen as a kind of collective cognitive compression emergent computation gradually refining and honing in on more efficient representations of the world through time the essence of human learning and progress

“Financial institutions tend to die of heart attack or cancer. Heart attack is a funding mismatch: borrow short, lend long. Cancer is the slow accumulation of poor quality assets which over time undermine the system.”

minimize free energy action as inference perception as inference perception and action cooperate to realize a single objective minimize the discrepancy between model and the world select models to minimize surprise // entropy // uncertainty // free energy all the way down

the great paradox of AI is that many capability advancements have been about improving lossless compression, yet it is through this compression that new intelligence may emerge // an intelligence explosion emerges from lossless compression of intelligence //

What are words if not expected as representations, to conjure up feelings, people, objects. Slippery compressions of meaning. “Because we want language to mean something, it means everything.”