James | 🤖

@theegocentrist

Co-founder @istarirobotics | 📐 at @nethermindEth

I really like this; In my opinion I have strong conviction the industry is moving in this direction. Truly safe and capable robots will have to learn policy from thousands to millions of diverse Human Demonstrations - great work @ShivaamVats

How can 🤖 learn from human workers to provably reduce their workload in factories? Our latest @RoboticsSciSys paper answers this question by proposing the first cost-optimal interactive learning (COIL) algorithm for multi-task collaboration.

The state of affairs for deployable robotics today is significantly worse than much of the AI community who doesn’t work with Physical AI believes it to be

My bar for AGI is far simpler: an AI cooking a nice dinner at anyone’s house for any cuisine. The Physical Turing Test is very likely harder than the Nobel Prize. Moravec’s paradox will continue to haunt us, looming larger and darker, for the decade to come.

Looking fwd to presenting this talk @Google next Thurs at noon. It will be live in person in Mountain View CA (not online) but is free and open to the public: How to Close the 100,000 Year “Data Gap” in Robotics rsvp.withgoogle.com/events/how-to-…

Really love these demos, Agility is making some really strong progress on RL

Testing reinforcement-learned whole body control - ongoing work by my team at Agility. Humanoid robots need to be able to operate in many different environments, on different terrains, and robust to all kinds of disturbances, while also performing manipulation tasks.

A glimpse of a very exciting future ahead of us

WPP trialled Boston Dynamics' Atlas as a camera operator in LED volume production 3 months ago. Key capabilities: - Precise repeatability of complex camera moves - Sideways walking motions are impossible for humans - Eliminates setup time vs traditional robotic rigs - Operates…

everything that can be done on a computer will be done by a computer they are not operating excavators, they are training a model for autonomous excavators

🇨🇳 A team of construction workers in China operating excavators remotely. Grueling blue-collar work is now a cushy air-conditioned office job.

Fingers crossed this is true, looking forward to seeing what you built @BerntBornich

Reality is the ultimate eval Threshold were our robots learn more by themselves than from expert demonstrations (teleop) was just passed by in silence, but I suspect in hindsight it will huge

Starmer can't fix Britain - but can anyone? Me for @thetimes (1/2)

Added support for universal rigging with SMPL-X, as well as support for user configurable toolchains and models from hugging face

The best way for egohub to grow is with community support We've made it easy to add new dataset adapters. If you work with egocentric data like EPIC-KITCHENS, Assembly101, or Ego4D, we'd love your help expanding our support. Check out our guide: github.com/IstariRobotics…

I despair for the UK. No country has better fundamentals to profit from the current global mess, so why is Oxbridge not a rival to Silicon Valley? Every one of its problems—from Heathrow to housing, from small boats to the deficit, from the NHS to the explosion in welfare—is…

✨ Massive Pipeline Refactor → One Framework for Ego + Exo Datasets, Visualized with @rerundotio 🚀 After a deep refactoring and cleanup, my entire egocentric/exocentric pipeline is now fully modular. One codebase handles different sensor layouts and generates a unified,…

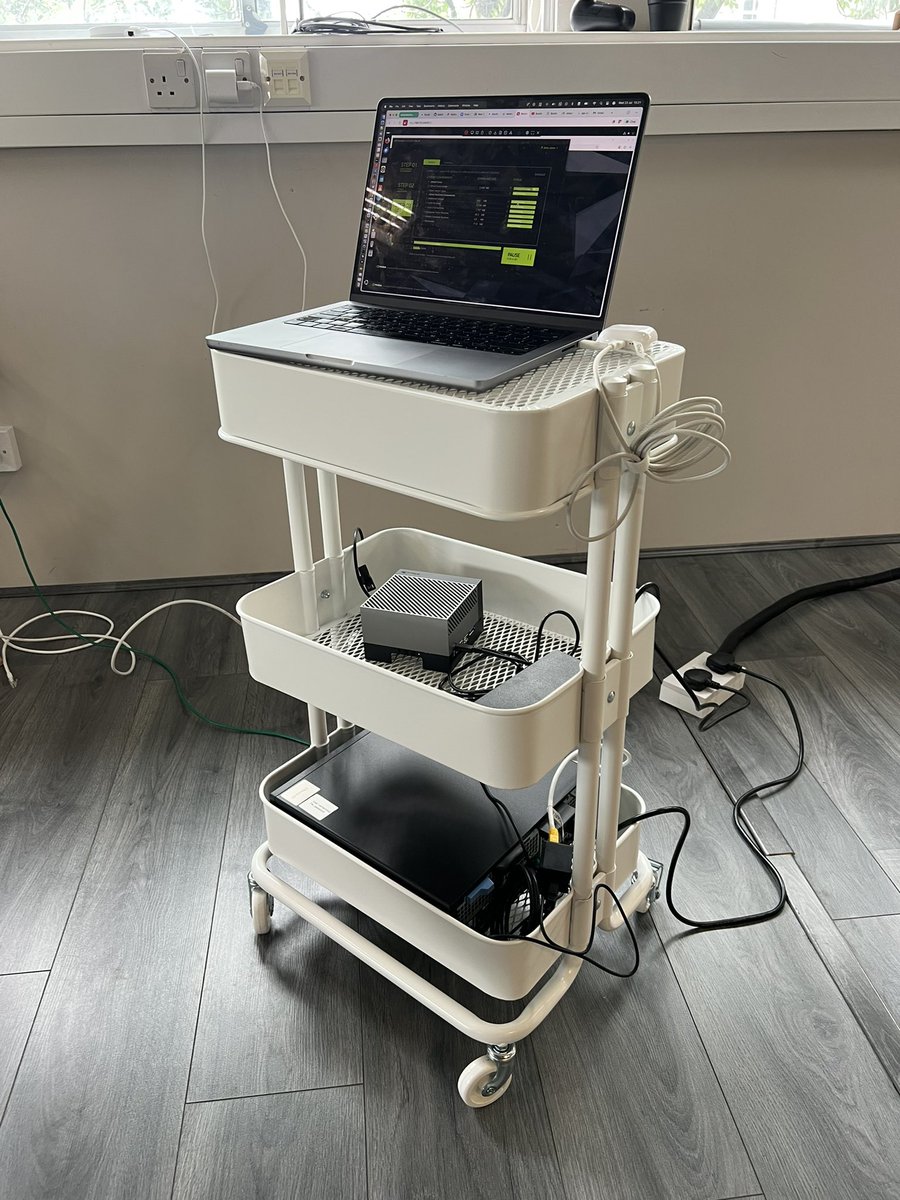

Managed to hack things together🔥. All views visualized on @rerundotio I need a little more time to cook before getting this fully out there. Current fixes I'm working on 1. Only works on my beefy 5090 machine, it's too many streams to visualize elsewhere (may need to…

Excited to release Gemini Robotics On-Device and bunch of goodies today 🍬 on-device VLA that you can run on a GPU 🍬 open-source MuJoCo sim (& benchmark) for bimanual dexterity 🍬 broadening access to these models to academics and developers deepmind.google/discover/blog/…

This is a much needed step forward. Benchmarks are scarcely shared between robotics companies unlike in the world of LLMs.

In robotics benchmarks are rarely shared. New eval setups are created for each new project, a stark difference from evals in broader ML. But generalist policies share a problem statement: do any task in any environment. Can generalist capabilities make robot evaluation easier?