Tahereh Toosi

@taherehtoosi

Associate Research Scientist at Center for Theoretical Neuroscience @cu_neurotheory @ZuckermanBrain @Columbia K99-R00 scholar @NIH https://toosi.github.io

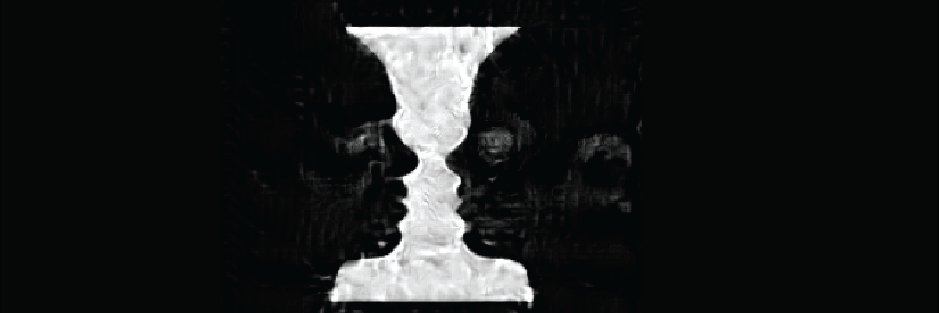

There are plenty of feedback connects in visual cortex, and they contribute to so many perceptual experiences (e.g imagination, de-occlusions, hallucinations), but HOW? In a #NeurIPS2023 paper, we show alignment of the feedback and feedforward is the key! arxiv.org/abs/2310.20599

Here's a third application of our new world modeling technology - to object grouping. In a sense this completes the video scene understanding trifecta of 3D shape, motion, and now object individualization. From a technical perspective, the core innovation is the idea of…

AI models segment scenes based on how things appear, but babies segment based on what moves together. We utilize a visual world model that our lab has been developing, to capture this concept — and what's cool is that it beats SOTA models on zero-shot segmentation and physical…

Over the past 18 months my lab has been developing a new approach to visual world modeling. There will be a magnum opus that ties it all together out in the next couple of weeks. But for now there are some individual application papers that have poked out.

📷 New Preprint: SOTA optical flow extraction from pre-trained generative video models! While it seems intuitive that video models grasp optical flow, extracting that understanding has proven surprisingly elusive.

New video on the details of diffusion models: youtu.be/iv-5mZ_9CPY Produced by @welchlabs, this is the first in a small series of 3b1b this summer. I enjoyed providing editorial feedback throughout the last several months, and couldn't be happier with the result.

Update on a new interpretable decomposition method for LLMs -- sparse mixtures of linear transforms (MOLT). Preliminary evidence suggests they may be more efficient, mechanistically faithful, and compositional than existing techniques like transcoders transformer-circuits.pub/2025/bulk-upda…

Excited that our work is out together with the amazing @CoenCagli_Lab and Adam Kohn! We demonstrate how neural co-variability in the visual cortex encodes uncertainty about natural scenes and is adaptively modulated by spatial context! (1/7) Paper link: bit.ly/3IXiU9M

We're launching an "AI psychiatry" team as part of interpretability efforts at Anthropic! We'll be researching phenomena like model personas, motivations, and situational awareness, and how they lead to spooky/unhinged behaviors. We're hiring - join us! job-boards.greenhouse.io/anthropic/jobs…

Cool! Looks like the model could use some regularization.

Can an AI model predict perfectly and still have a terrible world model? What would that even mean? Our new ICML paper formalizes these questions One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

Do you study neural systems with feedback at different temporal-, spatial-, hierarchical-, data-, or computational- scales? Have you submitted your abstract to the "Neurocybernetics at Scale" symposium? Due to multiple requests, new deadline is 18 July! symposium.fchampalimaud.science

Announcing the new "Sensorimotor AI" Journal Club — please share/repost! w/ Kaylene Stocking, @TommSalvatori, and @EliSennesh Details + sign up link in the first reply 👇

🎈 Out now: 🎈 "The capacity limits of moving objects in the imagination" (by Balaban & me) of interest to people thinking about the imagination, intuitive physics, mental simulation, capacity limits, and more nature.com/articles/s4146…

A great @QuantaMagazine article on our theory of creativity in convolutional diffusion models lead by @MasonKamb. See also our paper with new results in version 2: arxiv.org/abs/2412.20292 to be presented as an oral at @icmlconf #icml25 thx @_webbwright !

In a recent paper, physicists used two predictable factors to reproduce the “creativity” seen from image-generating AI. @_webbwright reports: quantamagazine.org/researchers-un…

#eNeuro: @obeid_dina and Miller identify distinct neural computations in the primary visual cortex that explain how surrounding context suppresses perception of visual figures and features. @cu_neurotheory @hseas vist.ly/3n6tfaz

Isolating single cycles of neural oscillations in population spiking doi.org/10.1371/journa… #neuroscience

Very excited to share our latest project on understanding representational convergence in ANNs! With @sudh8887 and @meenakshik93! (paper link: arxiv.org/abs/2502.18710) (1/n)

Is low-to-high frequency generation in diffusion models aka. 'approximate spectral autoregression' a necessity for generation performance? 📃 Blog: fabianfalck.com/posts/spectral… 📜 Paper: arxiv.org/abs/2505.11278

I’m stoked to share our new paper: “Harnessing the Universal Geometry of Embeddings” with @jxmnop, Collin Zhang, and @shmatikov. We present the first method to translate text embeddings across different spaces without any paired data or encoders. Here's why we're excited: 🧵👇🏾

Check out our new paper! Vision models often struggle with learning both transformation-invariant and -equivariant representations at the same time. @hafezghm shows that self-supervised prediction with proper inductive biases achieves both simultaneously.

🚨 Preprint Alert 🚀 📄 seq-JEPA: Autoregressive Predictive Learning of Invariant-Equivariant World Models arxiv.org/abs/2505.03176 Can we simultaneously learn both transformation-invariant and transformation-equivariant representations with self-supervised learning (SSL)?…

Still looking to fill this position. Do any of my moots have any suggestions for someone nice & smart who can combine opto, ephys, & signal processing?

we are recruiting! looking for a postdoc with great electrophys and data analysis skills for a project in collaboration with @drstephjones and @dr_alexharris to study the role of beta frequency shifts in decision-making.

The Anthropic Interpretability Team is planning a virtual Q&A to answer Qs about how we plan to make models safer, the role of the team at Anthropic, where we’re headed, and what it’s like to work here! Please let us know if you’d be interested forms.gle/VeZZVz1NFsArzS…

1/ a 64 yo m presented for difficulty seeing. He has changed his glasses prescription twice; no help. He had 20/20 vision & normal fundoscopy. When asked to describe the picture he replies, "there is a pear and cherries, and multiple fronds of wheat.” A #continuumcase