surya

@suryaasub

working on triton and pytorch distributed @aiatmeta, ml systems @georgiatech

perhaps a great eval opportunity here!

PyTorch is sooo hard to use LLMs on 😂

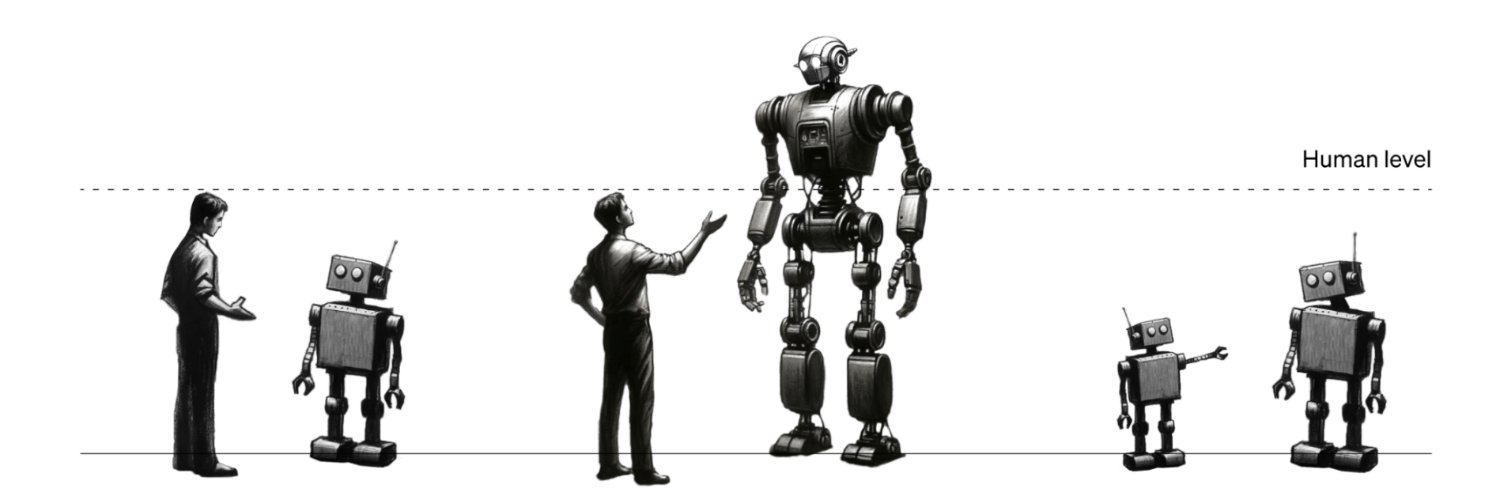

Engineers spend 70% of their time understanding code, not writing it. That’s why we built Asimov at @reflection_ai. The best-in-class code research agent, built for teams and organizations.

holy shit. cognition to the rescue. happy the early windsurf engs finally got rewarded for their hard work!

Cognition has signed a definitive agreement to acquire Windsurf. The acquisition includes Windsurf’s IP, product, trademark and brand, and strong business. Above all, it includes Windsurf’s world-class people, whom we’re privileged to welcome to our team. We are also honoring…

I really like Phil Tillet's framing of different tools having different tradeoffs in productivity and performance: torch compile, triton, CUDA, PTX. It's still early but CuTe-DSL and similar Python-based DSL might bend this curve. And soon we can probably get LLMs to generate…

everybody wants to do fun experiments nobody wants to write core infrastructure code

o3 for finding a security vulnerability in the Linux kernel: sean.heelan.io/2025/05/22/how…

my exhaustive list of productivity enhancing habits i have stuck to for more than a year: - sleep consistently and 8h - eat less fast carbs like bread or sugar - meditation - weight lifting + running - no phone first hour after waking up

a couple times every year i feel super productive, and attribute it to some random thing i changed in my habits like: - oh i ate this yesterday - i did this thing x before sleep - i took this random vitamin extremely few things actually stick around

when the run name ends like this you know it's surely going to work this time -restart-0331-final-final2-restart-forreal-omfg3

I was at the office today and spoke to an OpenAI researcher who said he never studied CS formally, learned everything on ChatGPT making his own learning plan with relentless focus. If you want to be more technical there is no longer an excuse!

I end almost every night wishing I had more time in the day to work.

why not 100x?

This may be the most inspiring sentence I've ever read. Which is interesting because it's not phrased in the way things meant to be inspiring usually are.

absolutely - i think it comes down to a small team having full visibility of the entire stack and thinking about everything from first principles (incredible how powerful this is!) plus being compute-constrained forces them to get creative for training/inference (e.g. mla)

I completely believe DeepSeek is making such good progress because the whole team is so close to hardware. Many many other big labs are so far removed from hardware and that changes many aspects of ability and velocity. It's more painful, but pain is good for research.

deepseek is rewriting history rn!!

🚀 DeepSeek-R1 is here! ⚡ Performance on par with OpenAI-o1 📖 Fully open-source model & technical report 🏆 MIT licensed: Distill & commercialize freely! 🌐 Website & API are live now! Try DeepThink at chat.deepseek.com today! 🐋 1/n

beyond just grabbing top-k: softmax probabilities have some p cool signals ab what your model actually thinks seen this from MoE routing to transformer logit probs - leveraging these confidence scores is a promising direction exciting to see more research in this space lately!