Seohong Park

@seohong_park

Reinforcement learning | CS Ph.D. student @berkeley_ai

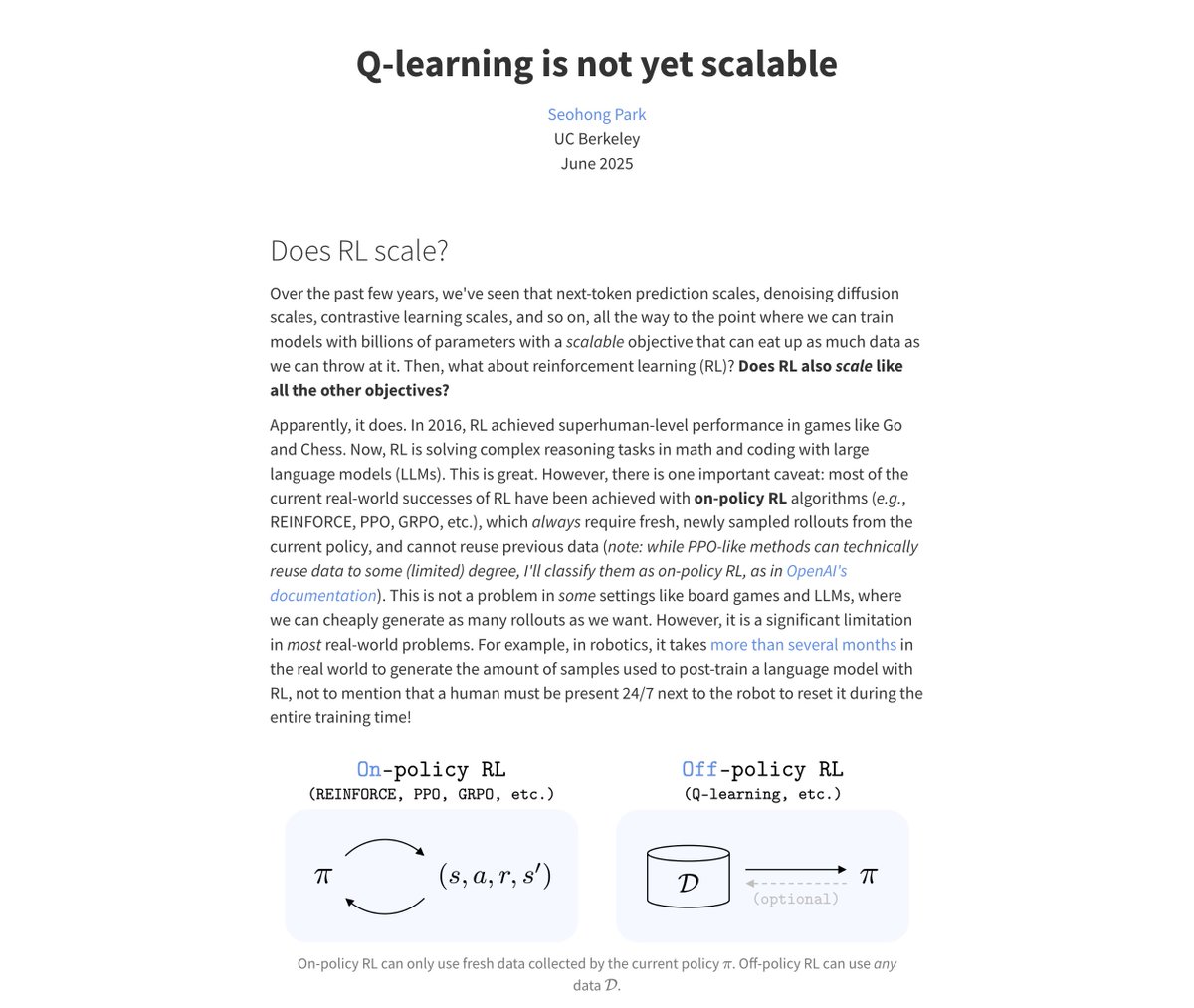

Q-learning is not yet scalable seohong.me/blog/q-learnin… I wrote a blog post about my thoughts on scalable RL algorithms. To be clear, I'm still highly optimistic about off-policy RL and Q-learning! I just think we haven't found the right solution yet (the post discusses why).

I wrote a fun little article about all the ways to dodge the need for real-world robot data. I think it has a cute title. sergeylevine.substack.com/p/sporks-of-agi

How can we train a foundation model to internalize what it means to “explore”? Come check out our work on “behavioral exploration” at ICML25 to find out!

Everyone knows action chunking is great for imitation learning. It turns out that we can extend its success to RL to better leverage prior data for improved exploration and online sample efficiency! colinqiyangli.github.io/qc/ The recipe to achieve this is incredibly simple. 🧵 1/N

Action chunking is a great idea in robotics: by getting a model to produce a short sequence of actions, it _just works better_ for some mysterious reason. Now it turns out this can help in RL too, and it's a bit clearer why: action chunks help explore and help with backups. 🧵👇

Just like tokenization is a necessary evil in LLMs (at least for now), time discretization is a necessary evil in robotics/RL. I think there must be a better way to handle continuous time than via naive discretization...

I really liked this paper and the (new) blog posts. From the paper, I can also tell the authors put their maximum efforts into it. Very well written and has beautiful figures!

Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical network that replaces tokenization with a dynamic chunking process directly inside the model, automatically discovering and operating over meaningful units of data

I'll be at ICML next week from 7/14 to 7/18! Feel free to shoot me a DM/email, happy to discuss anything about RL (RL scaling, diffusion/flow policies, offline/offline-to-online RL, etc.)

LLM RL code does not need to be complicated! Here is a minimal implementation of GRPO/PPO on Qwen3, from-scratch in JAX in around 400 core lines of code. The repo is designed to be hackable and prioritize ease-of-understanding for research: github.com/kvfrans/lmpo

I really enjoyed reading this blog post. Easily the best one I've read this year!

I converted one of my favorite talks I've given over the past year into a blog post. "On the Tradeoffs of SSMs and Transformers" (or: tokens are bullshit) In a few days, we'll release what I believe is the next major advance for architectures.

I converted one of my favorite talks I've given over the past year into a blog post. "On the Tradeoffs of SSMs and Transformers" (or: tokens are bullshit) In a few days, we'll release what I believe is the next major advance for architectures.

Russ's recent talk at Stanford has to be my favorite in the past couple of years. I have asked everyone in my lab to watch it. youtube.com/watch?v=TN1M6v… IMO our community has accrued a huge amount of "research debt" (analogous to "technical debt") through flashy demos and…

As AI agents face increasingly long and complex tasks, decomposing them into subtasks becomes increasingly appealing. But how do we discover such temporal structure? Hierarchical RL provides a natural formalism-yet many questions remain open. Here's our overview of the field🧵

Diffusion policies have demonstrated impressive performance in robot control, yet are difficult to improve online when 0-shot performance isn’t enough. To address this challenge, we introduce DSRL: Diffusion Steering via Reinforcement Learning. (1/n) diffusion-steering.github.io

In robotics benchmarks are rarely shared. New eval setups are created for each new project, a stark difference from evals in broader ML. But generalist policies share a problem statement: do any task in any environment. Can generalist capabilities make robot evaluation easier?

such a nice & clear articulation of the big question by @seohong_park ! also thanks for mentioning Quasimetric RL. now I just need to show people this post instead of explaining why I am excited by QRL :)

Q-learning is not yet scalable seohong.me/blog/q-learnin… I wrote a blog post about my thoughts on scalable RL algorithms. To be clear, I'm still highly optimistic about off-policy RL and Q-learning! I just think we haven't found the right solution yet (the post discusses why).