Danfei Xu

@danfei_xu

Faculty at Georgia Tech @ICatGT, researcher at @NVIDIAAI | Ph.D. @StanfordAILab | Making robots smarter | all opinions are my own

I gave an Early Career Keynote at CoRL 2024 on Robot Learning from Embodied Human Data Recording: youtube.com/watch?v=H-a748… Slides: faculty.cc.gatech.edu/~danfei/corl24… Extended summary thread 1/N

Big news for data science in higher ed! 🚀Colab now offers 1-year Pro subscriptions free of charge for verified US students/faculty, interactive Slideshow Mode for lectures, & an AI toggle per notebook. Enhance teaching & learning in the upcoming academic year! Read all about it…

One more thing: this new system automates the process of creating LIBERO-style tasks and demos. So you can easily create your own benchmarks!

We systematically studied "what matters in constructing large-scale dataset for manipulation". Along the way, we (1) discovered some key principles in data collection and retrieval and (2) built a data synthesizer that can automatically generate large-scale demo dataset (with…

We systematically studied "what matters in constructing large-scale dataset for manipulation". Along the way, we (1) discovered some key principles in data collection and retrieval and (2) built a data synthesizer that can automatically generate large-scale demo dataset (with…

Large robot datasets are crucial for training 🤖foundation models. Yet, we lack systematic understanding of what data matters. Introducing MimicLabs ✅System to generate large synthetic robot 🦾 datasets ✅Data-composition study 🗄️ on how to collect and use large datasets 🧵1/

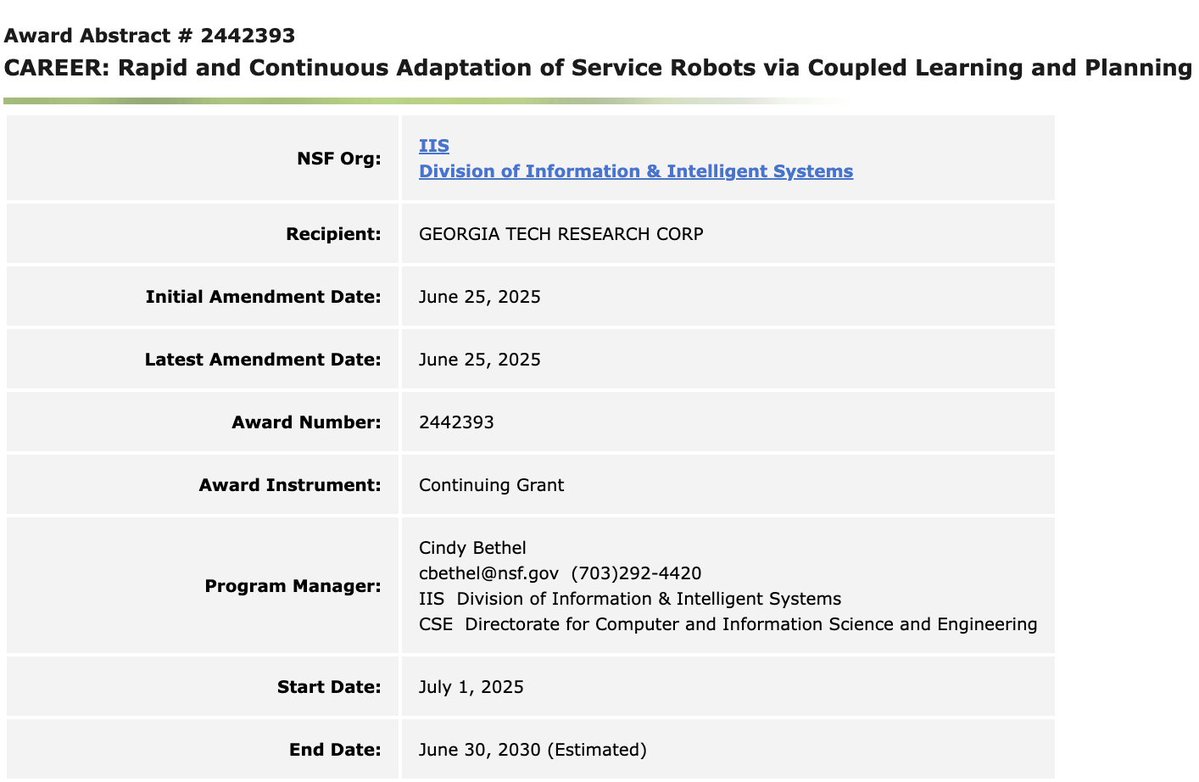

Honored to receive the NSF CAREER Award from the Foundational Research in Robotics (FRR) program! Deep gratitude to my @ICatGT @gtcomputing colleagues and the robotics community for their unwavering support. Grateful of @NSF for continuing to fund the future of robotics…

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the…

🎉 Super proud of my student Peng Li (co-advised with Chu Xu) for receiving the 2025 Jim Gray Doctoral Dissertation Award for his thesis "Cleaning and Learning over Dirty Tabular Data." @SIGMODConf @GeorgiaTech @gatech_scs @chu_data_lab

Happening now!

Excited to announce EgoAct🥽🤖: the 1st Workshop on Egocentric Perception & Action for Robot Learning @ #RSS2025 in LA! We’re bringing together researchers exploring how egocentric perception can drive next-gen robot learning! Full info: egoact.github.io/rss2025 @RoboticsSciSys

It’s also pretty cool that we are now bounded by hardware limit — for example, gripper not close fast enough to match the arm motion

We also found that SAIL is often bounded by hardware limits, such as gripper speed. SAIL could feasibly result in faster motions with more capable hardware. 9/

Despite advances in end-to-end policies, robots powered by these systems operate far below industrial speeds. What will it take to get e2e policies running at speeds that would be productive in a factory? It turns out simply speeding up NN inference isn't enough. This requires…

Tired of slow-moving robots? Want to know how learning-driven robots can move closer to industrial speeds in the real world? Introducing SAIL - a system for speeding up the execution of imitation learning policies up to 3.2x on real robots. A short thread: 1/

Tired of slow-moving robots? Want to know how learning-driven robots can move closer to industrial speeds in the real world? Introducing SAIL - a system for speeding up the execution of imitation learning policies up to 3.2x on real robots. A short thread: 1/

Russ's recent talk at Stanford has to be my favorite in the past couple of years. I have asked everyone in my lab to watch it. youtube.com/watch?v=TN1M6v… IMO our community has accrued a huge amount of "research debt" (analogous to "technical debt") through flashy demos and…

Yayyy! Really really really excited to have Sidd join us at @ICatGT !

Thrilled to share that I'll be starting as an Assistant Professor at Georgia Tech (@ICatGT / @GTrobotics / @mlatgt) in Fall 2026. My lab will tackle problems in robot learning, multimodal ML, and interaction. I'm recruiting PhD students this next cycle – please apply/reach out!

Super cool demos. Congrats @andyzeng_ and @peteflorence ! Robot learning is a full-stack domain. Even with e2e learning, need capable robots & good controllers to move fast at high precision.

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world. Here's a preview of our early…

Great design. Looking forward to it!

OpenArm is a fully open-sourced humanoid robot arm built for physical AI research and deployment in contact-rich environments. Check out what we’ve been building lately for the upcoming release. Launching v1.0 within a month 🚀 #robotics #OpenSource #physicalAI #humanoid

Join us at the RSS 2025 EgoAct workshop June 21st morning session, where the @meta_aria team will demonstrate the Aria Gen2 device and talk about its awesome features for robotics and beyond! egoact.github.io/rss2025/

Aria Gen 2 glasses mark a significant leap in wearable technology, offering enhanced features and capabilities that cater to a broader range of applications and researcher needs. We believe researchers from industry and academia can accelerate their work in machine perception,…

Being eyeing on this wrist design for ~3 years. I'd really appreciate a robust, high effective range, full-integrated, open-source version of this design. I'd even buy them if someone can sell me wrist modules like these ... True 6 DoF wrist is prolly the most important…

The pollen wrist solution, why that’s an elegant design! @huggingface @LeRobotHF @GoingBallistic5

Aria Gen 2 glasses mark a significant leap in wearable technology, offering enhanced features and capabilities that cater to a broader range of applications and researcher needs. We believe researchers from industry and academia can accelerate their work in machine perception,…