Vaibhav Saxena

@saxenavaibhav11

ML Ph.D. at Georgia Tech @ICatGT | CS MScAC @uoftcompsci | Math @IITGuwahati

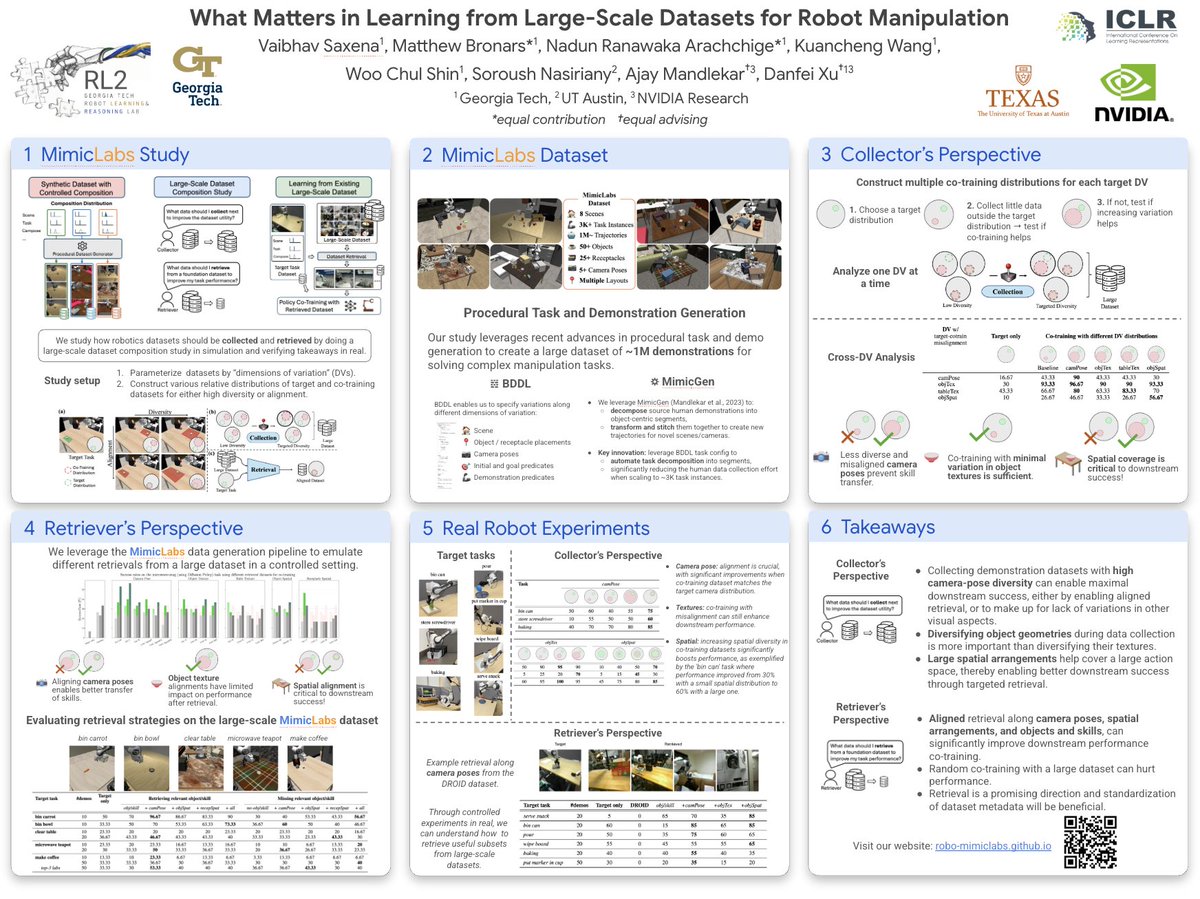

Large robot datasets are crucial for training 🤖foundation models. Yet, we lack systematic understanding of what data matters. Introducing MimicLabs ✅System to generate large synthetic robot 🦾 datasets ✅Data-composition study 🗄️ on how to collect and use large datasets 🧵1/

If you are at #ICLR this week, come visit us at our poster "What Matters in Learning from Large-Scale Datasets for Robot Manipulation" and let's chat about what data enables efficient training in robotics! Thu 24 Apr, 3 - 5:30 pm Hall 3 + Hall 2B #564

SfM failing on dynamic videos? 😠 RoMo to the rescue! 💪 Our simple method uses epipolar cues and semantic features for robustly estimating motion masks, boosting dynamic SfM performance 🚀 Plus, a new dataset of dynamic scenes with ground truth cameras! 🤯 #computervision 🧵👇

📢📢📢 RoMo: Robust Motion Segmentation Improves Structure from Motion romosfm.github.io arxiv.org/pdf/2411.18650 TL;DR: boost your SfM pipeline on dynamic scenes. We use epipolar cues + SAMv2 features to find robust masks for moving objects in a zero-shot manner. 🧵👇

I gave an Early Career Keynote at CoRL 2024 on Robot Learning from Embodied Human Data Recording: youtube.com/watch?v=H-a748… Slides: faculty.cc.gatech.edu/~danfei/corl24… Extended summary thread 1/N

How can robots compositionally generalize over multi-object multi-robot tasks for long-horizon planning? At #CoRL2024, we introduce Generative Factor Chaining (GFC), a diffusion-based approach that composes spatial-temporal factors into long-horizon skill plans. (1/7)

Introducing EgoMimic - just wear a pair of Project Aria @meta_aria smart glasses 👓 to scale up your imitation learning datasets! Check out what our robot can do. A thread below👇

Introducing MimicTouch, our new paper accepted by #CoRL2024 (also the Best Paper Award at the #NIPS2024 TouchProcessing Workshop). MimicTouch learns tactile-only policies (no visual feedback) for contact-rich manipulation directly from human hand demonstrations. (1/6)

🤖 Inspiring the Next Generation of Roboticists! 🎓 Our lab had an incredible opportunity to demo our robot learning systems to local K-12 students for the National Robotics Week program @GTrobotics . A big shout-out to @saxenavaibhav11 @simar_kareer @pranay_mathur17 for hosting…

Data is the key driving force behind success in robot learning. Our upcoming RSS 2024 workshop "Data Generation for Robotics” will feature exciting speakers, timely debates, and more! Submit by May 20th. sites.google.com/view/data-gene…

Excited to share that our work was accepted for an oral presentation at #NeurIPS2023. If you are interested in diffusion models or computer vision, please drop by our talk and poster on Thursday! nips.cc/virtual/2023/o…

The Surprising Effectiveness of Diffusion Models for Optical Flow and Monocular Depth Estimation paper page: huggingface.co/papers/2306.01… Denoising diffusion probabilistic models have transformed image generation with their impressive fidelity and diversity. We show that they also…

How can we leverage large pretrained VLMs for precise and dynamic manipulation tasks in real time? Presenting MResT🤖 framework for real-time control using sensing at various spatial and temporal resolutions Come say hi @corl_conf #CoRL2023 Today @ LangRob and Nov. 8 poster 4