Peter Lin

@peter9863

Introducing Seaweed APT2, a real-time, interactive, streaming video generation model. seaweed-apt.com/2 Adversarial training for autoregressive modeling! Streaming 1 minute videos, 1 diffusion step, 24fps real-time on 1xh100, with interactive controls!

🔥Introducing #SeedVR2, the latest one-step diffusion transformer version of #SeedVR for real-world image and video restoration! details - Paper: arxiv.org/abs/2506.05301 - Project: iceclear.github.io/projects/seedv… - Code (under review): github.com/IceClear/SeedV…

字节确实变了 居然先发布了新版 Seaweed 视频模型的论文和演示 除了常规文生、图生视频外还支持: - 音视频同步生成 - 长镜头与多镜头叙事 - 高分辨率超分与实时生成 - 世界建模与相机控制 下面的论文页面有更多演示

Glad to share Seaweed-7B, a cost-effective foundation model for video generation. Our tech report highlights the key designs that significantly improve compute efficiency and performance given limited resources, achieving comparable quality against other industry-level models. To…

Excited to announce our latest research Seaweed-APT. Unlike diffusion and autoregressive models that require iterative sampling, our model generates 1280x720 24fps videos using only a single step (1NFE)! For more information, visit our website: seaweed-apt.com

AnimateDiff-Lightning Gradio demo is out! huggingface.co/spaces/ByteDan…

AnimateDiff-Lightning is incredible for video-to-video generation. Our model is released. Excited to see what the community will create with it. huggingface.co/ByteDance/Anim…

Excited to present AnimateDiff-Lightning for lightning-fast video generation. Our model is more than ten times faster than the original AnimateDiff, achieving new SOTA on few-step video generation. huggingface.co/ByteDance/Anim…

fastsdxl.ai is currently in the front page of HN and we are handing 50 new websocket connections per second, that is ~200 images per second.. and the best part is we are doing this with very few gpus

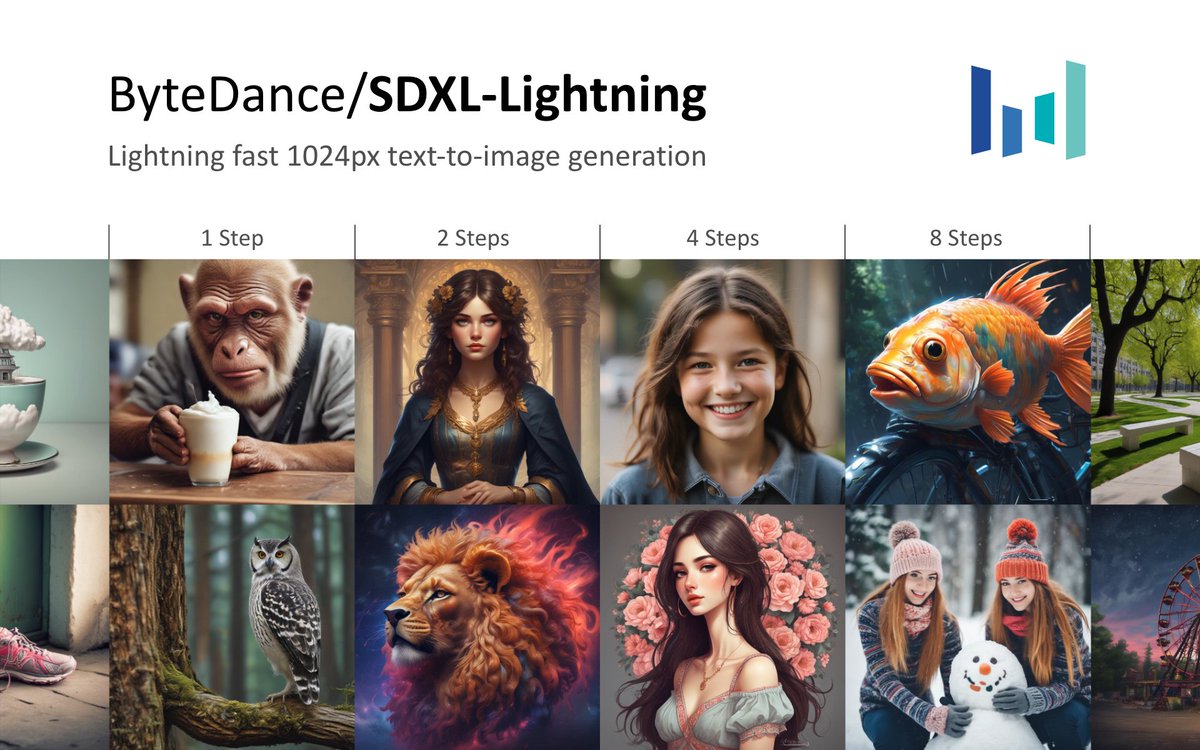

Excited to announce SDXL-Lightning, a lightning fast 1024px text-to-image generation model! huggingface.co/ByteDance/SDXL… Our model achieves new SOTA on one-step/few-steps generation! It is significantly better than prior methods. Try it out and check out our technical report!

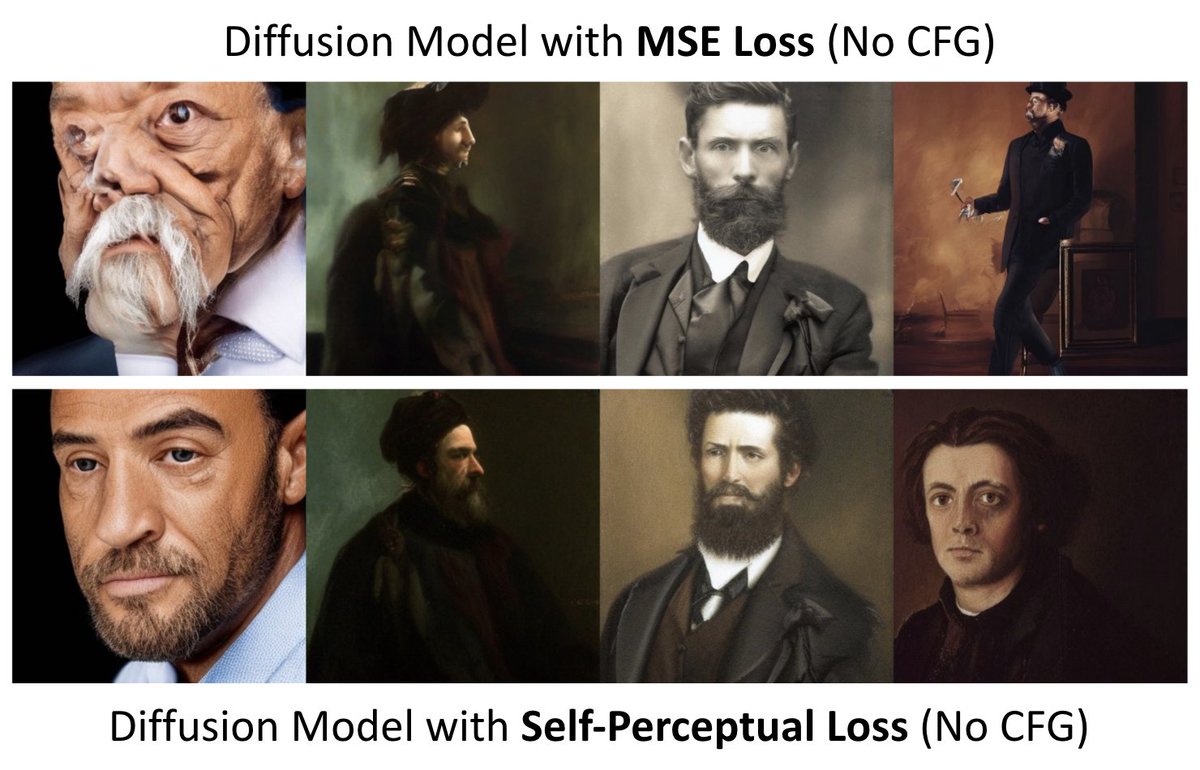

Excited to announce our new paper: Diffusion Model with Perceptual Loss arxiv.org/abs/2401.00110 Our work is the first to train diffusion models with perceptual loss. It improves sample quality without CFG. Works for both conditional and unconditional models.

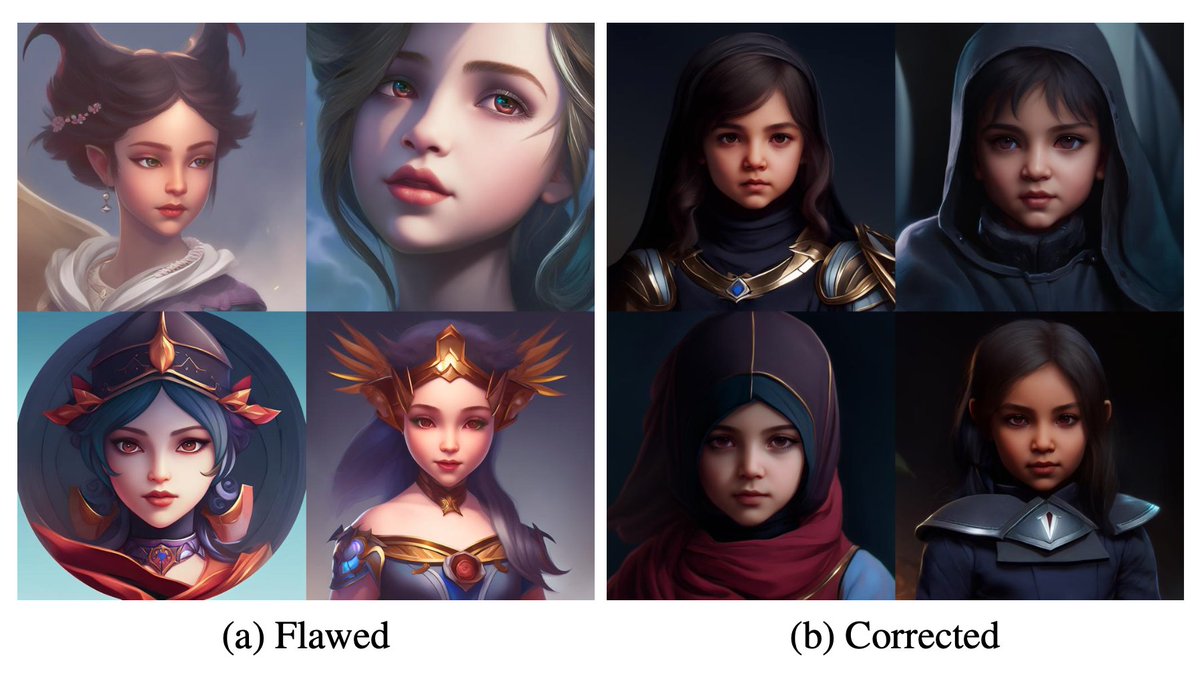

Common diffusion noise schedules and sample steps are flawed! Stable Diffusion has a flawed schedule that limits the images to be plain. We fixed it so it can generate more cinematic results! The findings are applicable to all diffusion models. arxiv.org/abs/2305.08891

Announcing Background Matting V2. A real-time high-resolution background matting method. Our method achieves HD@60FPS and 4K@30FPS. Check out our project website for detail!: grail.cs.washington.edu/projects/backg…

Excited to release v2.0 of our Background Matting project, which is now REAL-TIME & BETTER quality: 60fps at FHD and 30fps at 4K! You can use this with Zoom, check out our demo! 👇 Webpage: grail.cs.washington.edu/projects/backg… Video: youtube.com/watch?v=IYlJJX… More in the thread! [1/6]