Modal

@modal_labs

Bring your own code, and run CPU, GPU, and data-intensive compute at scale. The serverless platform for AI and data teams.

huge round of applause for @bernhardsson & @ekzhang1 at @modal_labs for supporting molab from @marimo_io. marimo.io/blog/announcin…

ICYMI, open models for transcription are very good now. In just the last few months, we've gotten @NVIDIA Parakeet and Canary, @kyutai_labs STT, and @MistralAI Voxtral. Running your own transcription at scale is now 100x faster and 100x cheaper than using a proprietary API.

always in the business of making your cold starts faster

The Modal Python SDK now has first-class support for uv, including both `uv_sync` and `uv_pip_install` 🤝

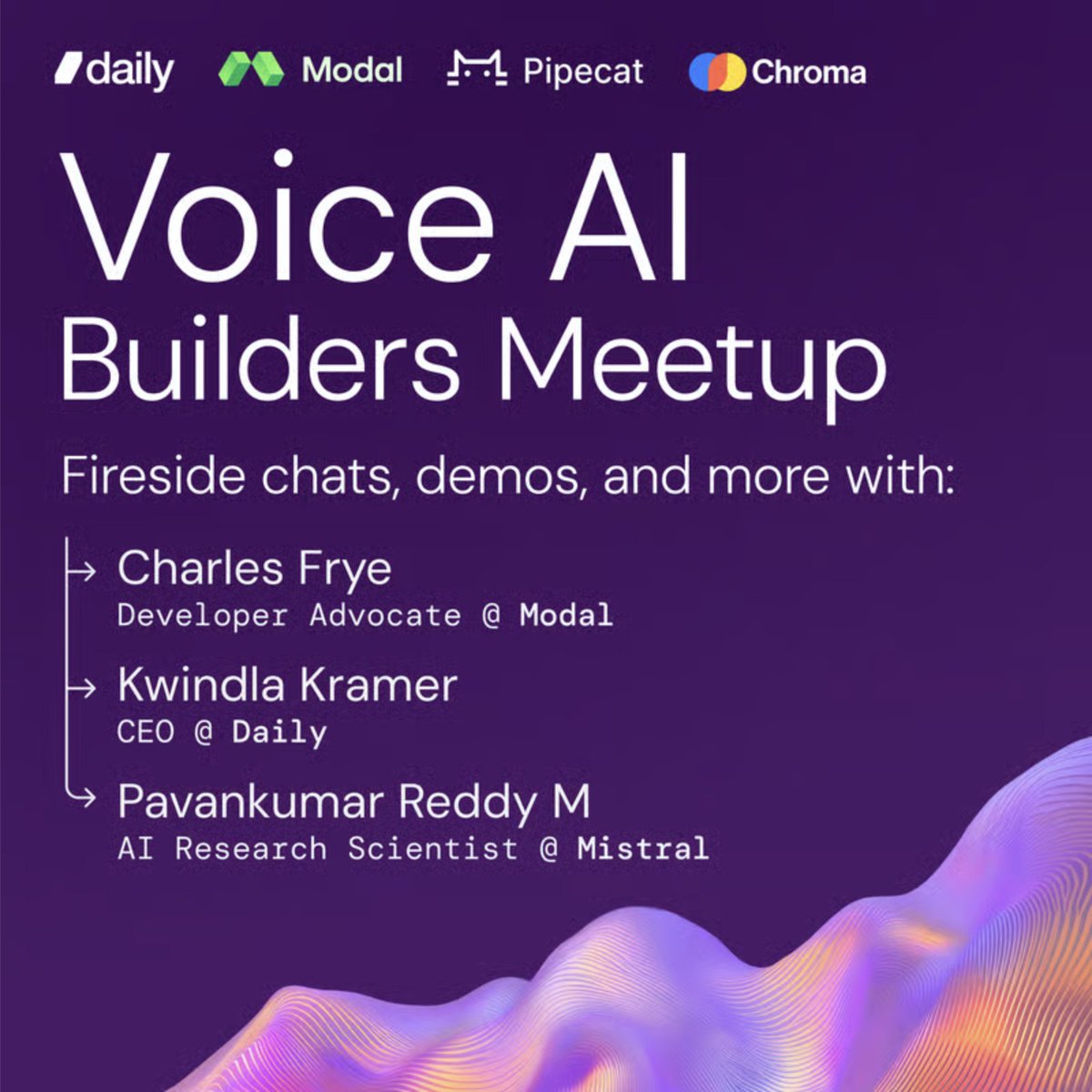

📣 Come learn about the latest in open-source voice AI! July 30th in SF With panel & demos ft @kwindla (Pipecat), @charles_irl (Modal), Pavankumar Reddy (Mistral) and more! Spots are limited, RSVP here: lu.ma/u3hzaj71

First-class uv support in the @modal_labs SDK!

Big day for Python tooling enjoyers: @modal_labs finally has first-class uv support! Use: • `uv_sync` to sync your Modal image with your local project • `uv_pip_install` to install packages lightning fast⚡️

Big day for Python tooling enjoyers: @modal_labs finally has first-class uv support! Use: • `uv_sync` to sync your Modal image with your local project • `uv_pip_install` to install packages lightning fast⚡️

molab is built on Modal Sandboxes! Our fast cold boots and support for dynamic image definitions make it easy for them to start notebooks quickly⚡️ Congrats on the launch!

Announcing molab: a cloud-hosted marimo notebook workspace with link-based sharing. Experiment on AI, ML and data using the world’s best Python (and SQL!) notebook. Launching with examples from @huggingface, @weights_biases, and using @PyTorch marimo.io/blog/announcin…

Excited to partner with @pipecat_ai as the training platform for this launch! We love the commitment to open source 🫡 All the code & data you need to train the model yourself is available in the linked repo 🌟

Smart Turn v2: open source, native audio turn detection in 14 languages. New checkpoint of the open source, open data, open training code, semantic VAD model on @huggingface, @FAL, and @pipecat_ai. - 3x faster inference (12ms on an L40) - 14 languages (13 more than v1, which…

It’s true. We burned our entire marketing budget on this.

Wordle 1,489 4/6* ⬛🟨⬛⬛⬛ ⬛🟨🟨⬛🟨 ⬛🟩🟩🟨⬛ 🟩🟩🟩🟩🟩 it's a pretty good wordle today @modal_labs @bernhardsson

Super excited that @JamsocketHQ joining @modal_labs with founders @paulgb and @taylorbaldwin. We've had many talks about virtualization and infrastructure with the Jamsocket team over the years. Looking forward to building some crazy stuff together.

Welcome to the team @paulgb + @taylorbaldwin! 💚

We’re thrilled to share that @JamsocketHQ is joining @modal_labs! We’ve known @paulgb and @taylorbaldwin for years now, and deeply admire their technical vision and execution in everything they’ve built. They’re cooking up exciting things at Modal already, more on this soon :)

The holy trinity of serverless GPU has been achieved internally at @modal_labs: ✅ Custom filesystem optimized for container cold start ✅ CPU snapshot+restore ✅ GPU snapshot+restore Last one is working internally but not released yet – stay tuned!

Lovable would not have been nearly as lovable without @modal_labs @Cloudflare @supabase @github @vite_js powering pooled VMs, hosting, backend, IDE sync, visual edits, and a lot of other great things. Thank you to all less known devs out there building stellar tech.