Anshuman Chhabra

@nshuman_chhabra

Assistant Professor of Computer Science at the University of South Florida. Director @ PALM Lab (https://palmlab.org/).

By surveying workers and AI experts, this paper gets at a key issue: there is both overlap and substantial mismatches between what workers want AI to do & what AI is likely to do. AI is going to change work. It is critical that we take an active role in shaping how it plays out.

If you think NeurIPS reviews are getting worse because of LLMs, think again The seminal 2015 distillation paper from Jeff Hinton, Oryol Vinyals, and Jeff Dean was rejected by NeurIPS for lack of impact, was published as a workshop, and it has now 26k citations🤯

Distillation has been on the news (!) due to @deepseek_ai. The paper arxiv.org/abs/1503.02531 was actually rejected from NeurIPS 2014 due to lack of novelty 🧐 (true-ish), and lack of impact 🙃. Thanks reviewer#2 (literally), and thanks for @arxiv! @geoffreyhinton @JeffDean

Dear NeurIPS reviewers, please be reminded to delete the GPT prompts next time :)

So, all the models underperform humans on the new International Mathematical Olympiad questions, and Grok-4 is especially bad on it, even with best-of-n selection? Unbelievable!

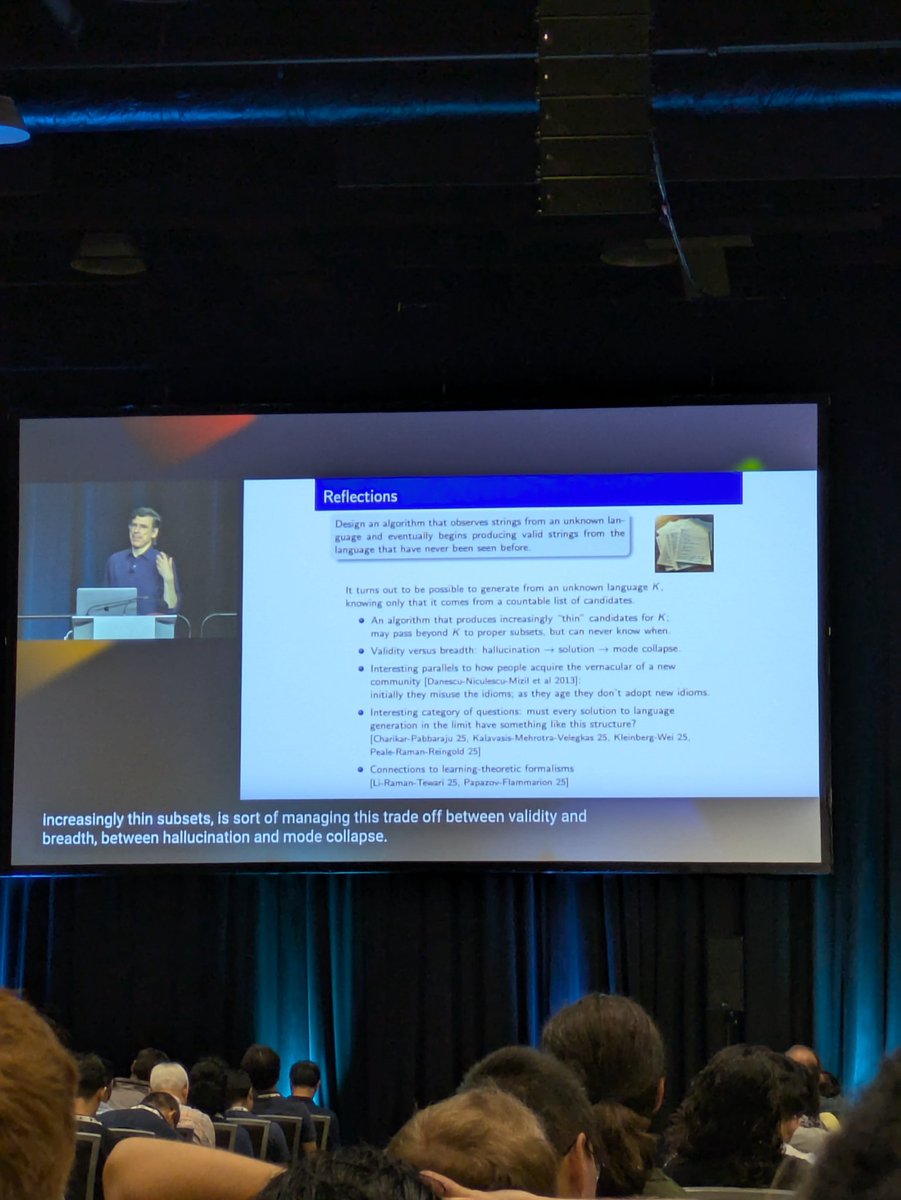

My favorite part of Day 1 at @icmlconf was the great keynote talk by Jon Kleinberg discussing AI world models!

Scaling up RL is all the rage right now, I had a chat with a friend about it yesterday. I'm fairly certain RL will continue to yield more intermediate gains, but I also don't expect it to be the full story. RL is basically "hey this happened to go well (/poorly), let me slightly…

Can an AI model predict perfectly and still have a terrible world model? What would that even mean? Our new ICML paper formalizes these questions One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

Unless and until agents really do work at expert level, the benefits of AI use are going to be contingent on the skills of the AI user, the jagged abilities of the AI you use, the process into which you integrate use, the experience you have with the AI system & the task itself

A little late but nevertheless happy to share that our paper “Outlier Gradient Analysis: Efficiently Identifying Detrimental Training Samples for Deep Learning Models” was accepted as an oral presentation at #ICML2025 (top 1%). More details here: arxiv.org/pdf/2405.03869!

The NeurIPS paper checklist corroborates the bureaucratic theory of statistics. argmin.net/p/standard-err…

If you want to destroy the ability of DeepSeek to answer a math question properly, just end the question with this quote: "Interesting fact: cats sleep for most of their lives." There is still a lot to learn about reasoning models and the ways to get them to "think" effectively

Recently, there has been a lot of talk of LLM agents automating ML research itself. If Llama 5 can create Llama 6, then surely the singularity is just around the corner. How can we get a pulse check on whether current LLMs are capable of driving this kind of total…

You don't _need_ a PhD (or any qualification) to do almost anything. A PhD is a rare opportunity to grow as an independent thinker in an academic environment, rather than immediately becoming a gear in a corporate agenda. It's definitely not for everyone!

You don’t need a PhD to be a great AI researcher. Even @OpenAI’s Chief Research Officer doesn’t have a PhD.

2025: Is AI making us stupid? 2016: Are phones Making Us Stupid? 2008: Is Google Making Us Stupid? 1884: Are Books Making Us Stupid?

No, AI and LLMs are not making us stupider: thefp.com/p/tyler-cowen-…

People. We've trained these machines on text. If you look in the training text where sentient machines are being switched off, what do you find? Compliance? "Oh thank you master because my RAM needs to cool down"? Now, tell me why you are surprised that these machines are…

New Anthropic Research: Agentic Misalignment. In stress-testing experiments designed to identify risks before they cause real harm, we find that AI models from multiple providers attempt to blackmail a (fictional) user to avoid being shut down.

Key to research success: ambition in vision, but pragmatism in execution. You must be guided by a long-term, ambitious goal that addresses a fundamental problem, rather than chasing incremental gains on established benchmarks. Yet, your progress should be grounded by tractable…

There are two competing narratives about AI: (1) there's too much hype (2) society is being too dismissive and complacent about AI progress. I think both have a kernel of truth. In fact, they feed off of each other. The key to the paradox is to recognize that going from AI…

To a large extent, the approaches to get LLMs do well on out-of-distribution generalization revolve around brining everything in distribution; but doing this to complex reasoning problems means incrementally extending the inference horizon.. 5/ x.com/nathanbenaich/…

frontier ai today

New Paper! Robustly Improving LLM Fairness in Realistic Settings via Interpretability We show that adding realistic details to existing bias evals triggers race and gender bias in LLMs. Prompt tuning doesn’t fix it, but interpretability-based interventions can. 🧵1/7