Zhaoye Fei(ngc7293)

@ngc7293q

PhD student at FudanNLP & OpenMOSS, now one of the co-founders of Mosi (maybe) #NLP #LLMs #InternLM #EmbodiedAI #OPENMOSS

🚀 📷 📷🚀

🚀The era of overpriced, black-box coding assistants is OVER. Thrilled to lead the @Agentica_ team in open-sourcing and training DeepSWE—a SOTA software engineering agent trained end-to-end with @deepseek_ai -like RL on Qwen32B, hitting 59% on SWE-Bench-Verified and topping the…

🚀

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

就在今天,AI圈发生了一件大事!Anthropic 家的明星产品 Claude Code,v1.0.33 版本的代码惨遭安全大佬逆向工程,超过五万行的混淆源码被硬核分析,完整的技术架构和实现机制被扒得一干二净,相关分析资料直接在 GitHub 上开源! 💡 核心技术架构全解析 shareAI-lab 团队通过逆向工程,揭示了 Claude…

I’ve always disliked how introverted and reserved I am. Back in school, I never aimed to be first—I just wanted to stay near the top to prove I wasn’t bad. But I’ve realized the world can be brutal. If you quietly stay a nobody, your voice often goes unheard. Recently, I saw…

check out Dream-coder🔥

🚀 Thrilled to announce Dream-Coder 7B — the most powerful open diffusion code LLM to date.

🚀 Hello, Kimi K2! Open-Source Agentic Model! 🔹 1T total / 32B active MoE model 🔹 SOTA on SWE Bench Verified, Tau2 & AceBench among open models 🔹Strong in coding and agentic tasks 🐤 Multimodal & thought-mode not supported for now With Kimi K2, advanced agentic intelligence…

Wow,awesome work!

Very excited to lead the continued pre-training (Math, Coding & Long-Context) and long-reasoning cold start & RL of this model. Proud moment seeing it go open source!🚀(1/2)

Knowledge makes the world so much more beautiful.

The Robotics World Modeling workshop is coming to CoRL! We accept both long & short submissions, with a deadline of July 13th. We have an exciting line of speakers and panelists! More information can be found on our website: robot-world-modeling.github.io

🤖🌎 We are organizing a workshop on Robotics World Modeling at @corl_conf 2025! We have an excellent group of speakers and panelists, and are inviting you to submit your papers with a July 13 deadline. Website: robot-world-modeling.github.io

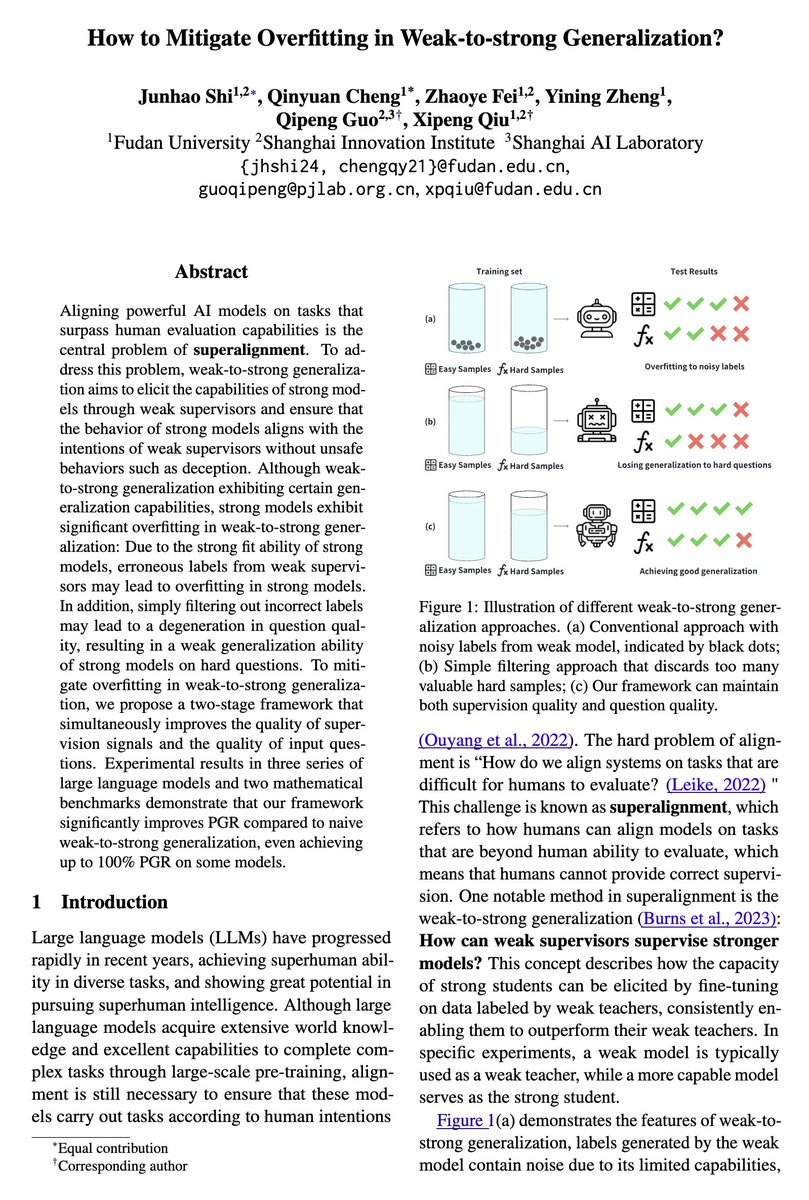

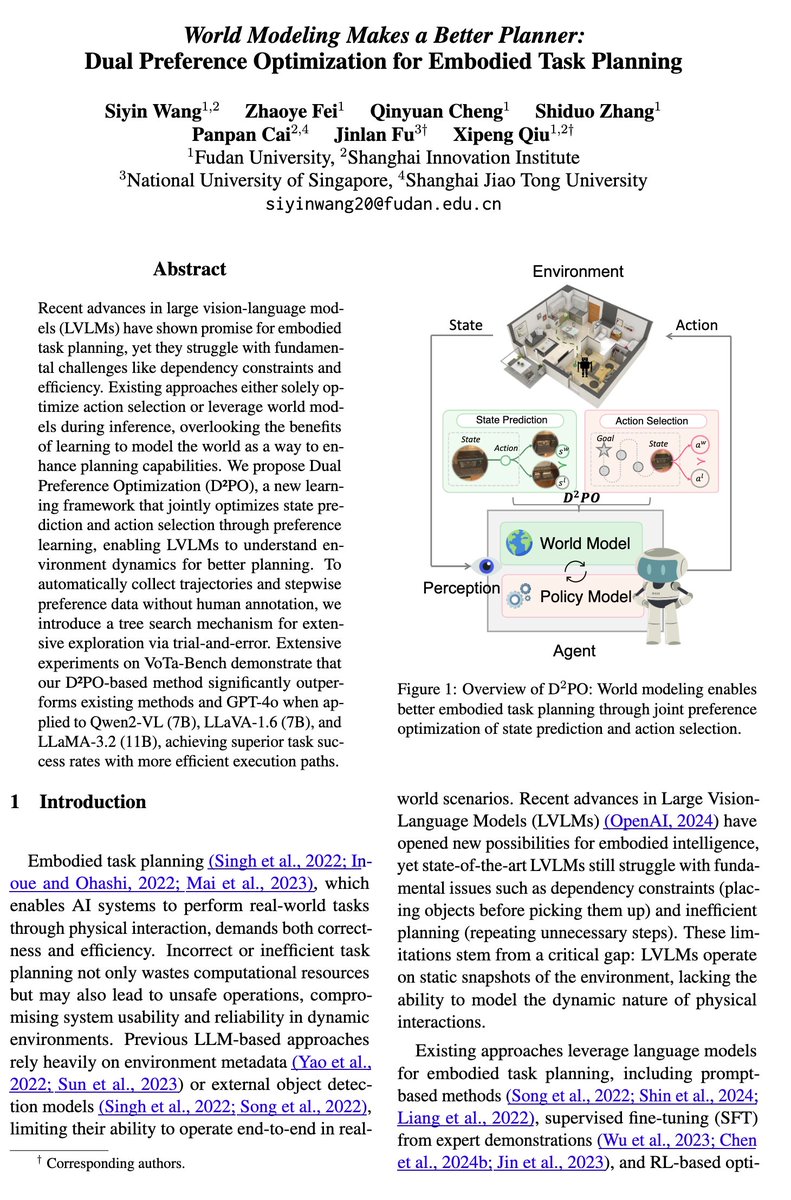

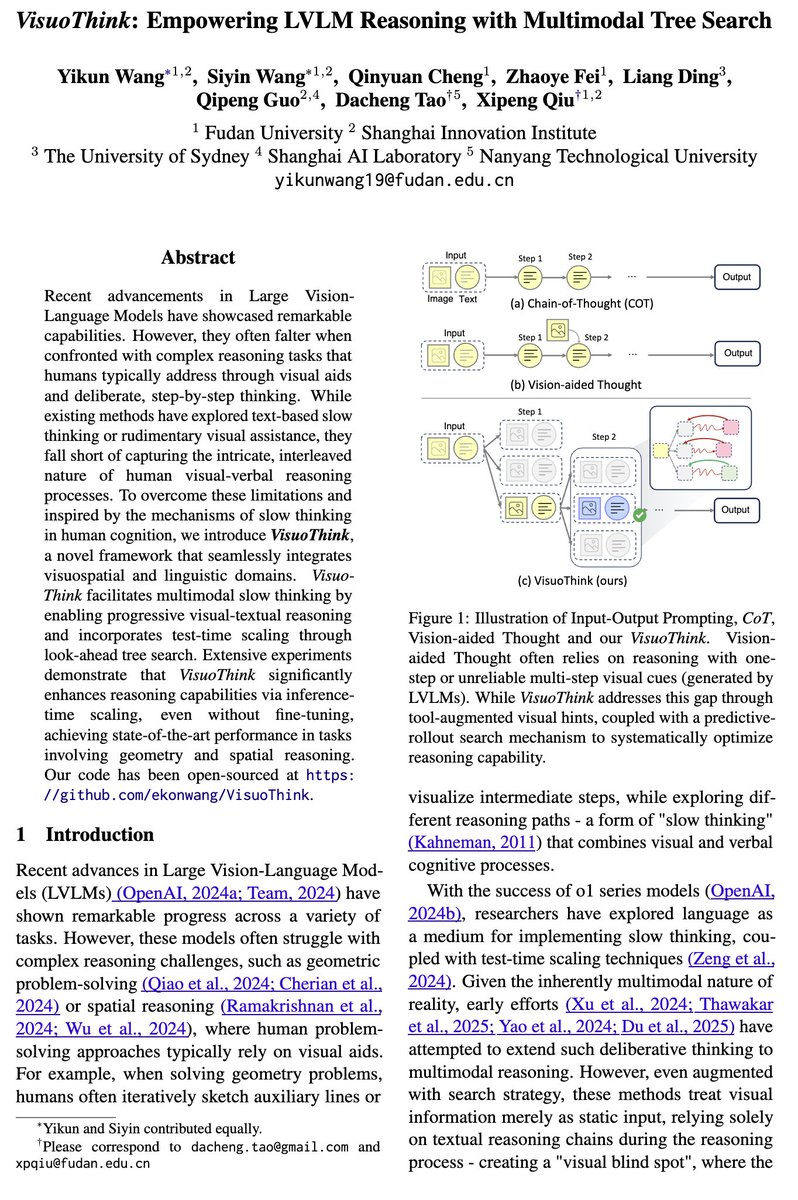

Thrill to share our 3 papers accepted to #ACL2025! How to Mitigate Overfitting in Weak-to-strong Generalization World Modeling Makes a Better Planner: Dual Preference Optimization for Embodied Task Planning VisuoThink: Empowering LVLM Reasoning with Multimodal Tree Search

闪瞎我钛合金狗眼

这位大哥的头像,在mac上,用chrome浏览器,低亮度环境下是非常强的hdr效果,把我闪瞎了。 似乎只有chrome才有的效果,safari会压缩直接变色,firefox直接过曝,一片惨白 尝试拍张照展示这个效果,但是似乎不好拍出来

In our latest paper, we discovered a surprising result: training LLMs with self-play reinforcement learning on zero-sum games (like poker) significantly improves performance on math and reasoning benchmarks, zero-shot. Whaaat? How does this work? We analyze the results and find…

We've always been excited about self-play unlocking continuously improving agents. Our insight: RL selects generalizable CoT patterns from pretrained LLMs. Games provide perfect testing grounds with cheap, verifiable rewards. Self-play automatically discovers and reinforces…

Thrilled to share our TWO papers accepted to #ACL2025 Main Conference! 🥳🎉 🎨VisuoThink: Empowering LVLM Reasoning with Multimodal Tree Search 🌏World Modeling Makes a Better Planner: Dual Preference Optimization for Embodied Task Planning #AI #MultimodalLearning #worldmodel