Zhihui Xie

@_zhihuixie

Ph.D. student @hkunlp2020 | Intern @AIatMeta | Previously @sjtu1896

🚀 Thrilled to announce Dream-Coder 7B — the most powerful open diffusion code LLM to date.

Apart from the performance, it’s pure entertainment just watching Qwen3‑Coder build Qwen Code all by itself. Agentic coding is really something: it explores, understands, plans, and acts seamlessly. Honored to be “in the game”—even if my entire work so far is smashing the Enter…

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

🔥 LLMs can fix bugs, but can they make your code faster? We put them to the test on real-world repositories, and the results are in! 🚀 New paper: "SWE-Perf: Can Language Models Optimize Code Performance on Real-World Repositories?" Key findings: 1️⃣ We introduce SWE-Perf, the…

You found the secret menu! 🤫

🔥 Kimi K2 is not a reasoning model but you can use it like one 🎯 here's how: 1. give it access to the 'Sequential Thinking' MCP (link below) 2. put it in an agent loop 3. tell it to think sequentially before answering it's so cheap that it won't cost you that much. use a…

My coauthor @hc81Jeremy will present EmbodiedBench at ICML 2025! 🤖 Oral Session 6A 📍 West Hall C 🕧July 17 3:30-3:45 pmPDT 📌 Poster Session 📍 East Hall A-B #E-2411🕜 July 17 4:30-7 pm PDT Come say hi and let’s talk about VLM agent training, evaluation, and benchmarking! 😀

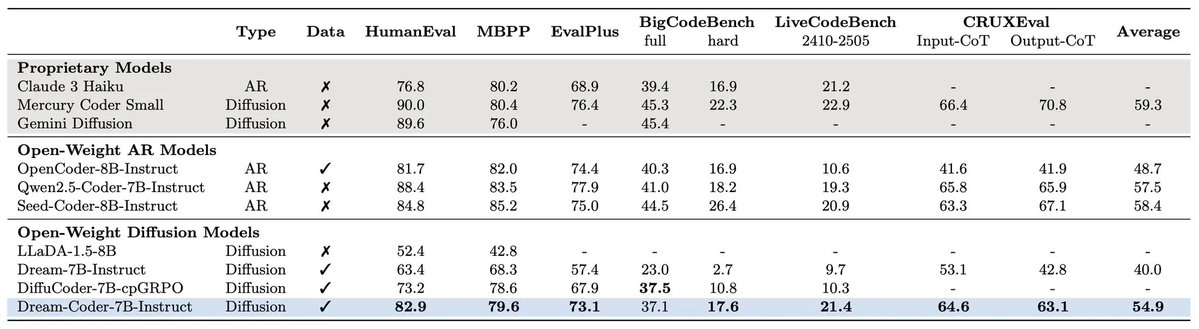

Follow-up to Dream 7B, now focused on code: Dream-Coder 7B is a diffusion-based code LLM from HKU + Huawei Noah’s Ark, built on Qwen2.5-Coder and 322B open tokens. It replaces autoregressive decoding with denoising-based generation, enabling flexible infilling via DreamOn. A…

Dream 7B is a 7B open diffusion language model co-developed by Huawei Noah’s Ark Lab, designed as a scalable, controllable alternative to autoregressive LLMs. It matches or outperforms AR models of similar size on general, math, and coding benchmarks, and demonstrates strong…

📢 Update: Announcing Dream's next-phase development. - Dream-Coder 7B: A fully open diffusion LLM for code delivering strong performance, trained exclusively on public data. - DreamOn: targeting the variable-length generation problem in dLLM!

We present DreamOn: a simple yet effective method for variable-length generation in diffusion language models. Our approach boosts code infilling performance significantly and even catches up with oracle results.

📢 We are open sourcing ⚡Reka Flash 3.1⚡ and 🗜️Reka Quant🗜️. Reka Flash 3.1 is a much improved version of Reka Flash 3 that stands out on coding due to significant advances in our RL stack. 👩💻👨💻 Reka Quant is our state-of-the-art quantization technology. It achieves…