Mike Lasby

@mikelasby

Machine learning researcher, engineer, & enthusiast | PhD Candidate at UofC | When I'm not at the keyboard, I'm outside hiking, biking, or skiing.

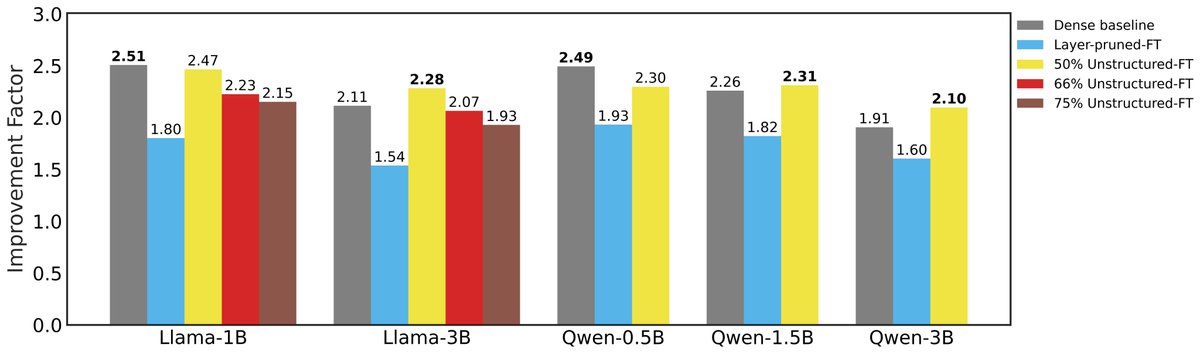

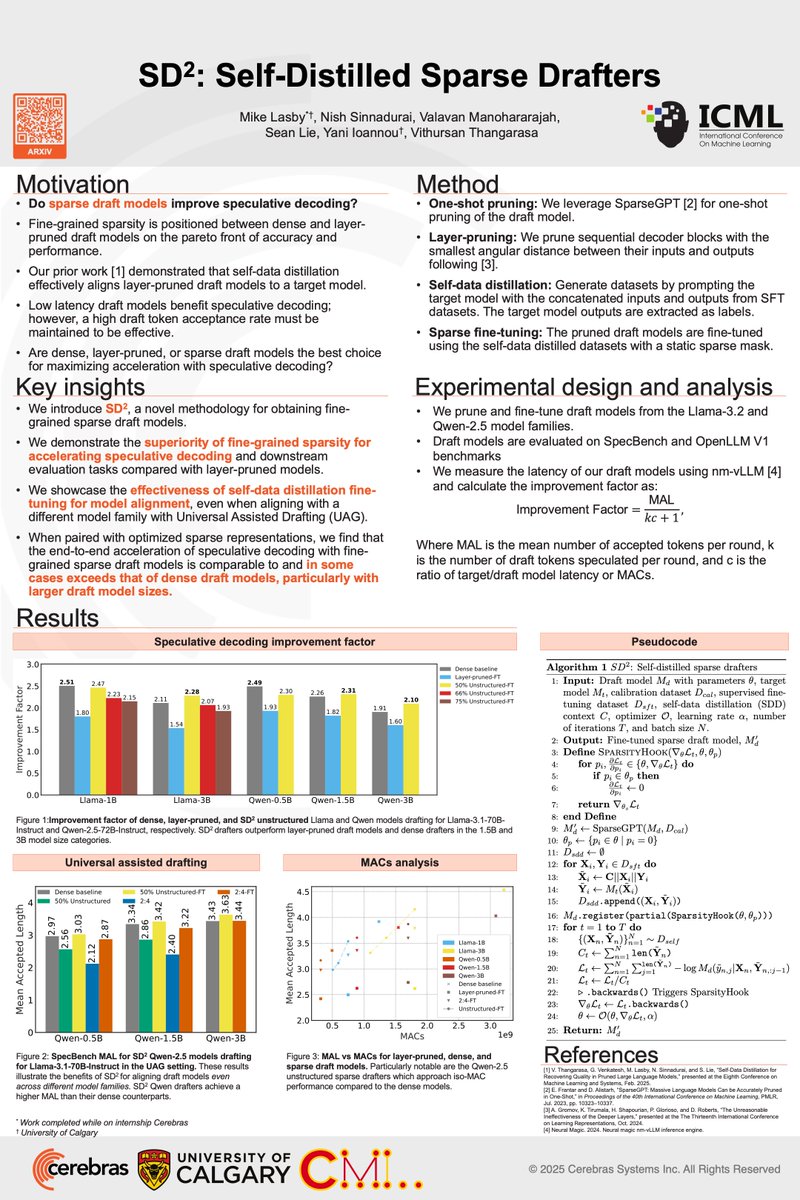

Want faster #LLM inference without sacrificing accuracy? 🚀 Introducing SD², a novel method using Self-Distilled Sparse Drafters to accelerate speculative decoding. We show that self-data distillation combined #sparsity can be used to train low-latency, well-aligned drafters.🧵

'Jonathan Ross and I made this bet in 2017. Groq is now the fastest inference solution in market' Society would expect @chamath to be truthful. I mean pick a model...any model. Look at independent benchmarks. These charts aren't hard to read.

.@JonathanRoss321 (the inventor and father of TPU) and I made this bet in 2017. @GroqInc is now the fastest inference solution in market today. Here are some lessons learned so far: - if we assume we get to Super Intelligence and then General Intelligence, the entire game…

Contributors: - QuTLASS is led by @RobertoL_Castro - FP-Quant contributors @black_samorez @AshkboosSaleh @_EldarKurtic @mgoin_ as well as Denis Kuznedelev and Vage Egiazarian Code and models: - github.com/IST-DASLab/FP-… - huggingface.co/ISTA-DASLab - github.com/IST-DASLab/qut…

We will be presenting Self-Distilled Sparse Drafters at #ICML this afternoon at the Efficient Systems for Foundation Models workshop. Come chat with us about acclerating speculative decoding! 🚀 @CerebrasSystems @UCalgaryML

Having ideas on backdoor attack, functional&representational similarity, and concept-based explainability? I am looking forward to all discussions. Come visit our posters today ! 📍 TAIG at West Meeting room 109 DIG-BUGS at West Ballroom A

I am excited to share that I will present two of my works at #ICML2025 workshops! If you are interested in AI security and XAI, feel free to come by and chat - I'm looking forward to all feedbacks and discussions!

It has been a while since I last gave a talk. I gave two lectures on compression and efficient finetuning this April at The Science and Engineering of Large Language Models Workshop organized for AIMS students. Check out the slides below: utkuevci.com/ml/aims-lectur…

[1/2] 🧵 Presenting our work on Understanding Normalization Layers for Sparse Training today at the HiLD Workshop, ICML 2025! If you're curious about how BatchNorm & LayerNorm impact sparse networks, drop by our poster!

We are presenting this poster at #ICML2025 today (Tues. July 15) in the first poster session 📆 11:00-13:30 in East Exhibition Hall A-B #E-2106 Drop by to learn more on why sparse training is difficult and how to reuse a mask for training from random initialization.

1/10 🧵 🔍Can weight symmetry provide insights into sparse training and the Lottery Ticket Hypothesis? 🧐We dive deep into this question in our latest paper, "Sparse Training from Random Initialization: Aligning Lottery Ticket Masks using Weight Symmetry", accepted at #ICML2025

@UCalgaryML will be at #ICML2025 in Vancouver🇨🇦 next week: our lab has 6 different works being presented by 5 students across both the main conference & 6 workshops! We are also recruiting fully-funded ML PhD/MSc students🧑🎓If attending, reach out to me on Whova app to meet 📅

Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical network that replaces tokenization with a dynamic chunking process directly inside the model, automatically discovering and operating over meaningful units of data

🦆🚀QuACK🦆🚀: new SOL mem-bound kernel library without a single line of CUDA C++ all straight in Python thanks to CuTe-DSL. On H100 with 3TB/s, it performs 33%-50% faster than highly optimized libraries like PyTorch's torch.compile and Liger. 🤯 With @tedzadouri and @tri_dao

I am excited to share that I will present two of my works at #ICML2025 workshops! If you are interested in AI security and XAI, feel free to come by and chat - I'm looking forward to all feedbacks and discussions!

Excited to be presenting our work at ICML next week! If you're interested in loss landscapes, weight symmetries, or sparse training, come check out our poster — we'd love to chat. 📍 East Exhibition Hall A-B, #E-2106. 📅 Tue, July 15 | 🕚 11:00 a.m. – 1:30 p.m PDT.

1/10 🧵 🔍Can weight symmetry provide insights into sparse training and the Lottery Ticket Hypothesis? 🧐We dive deep into this question in our latest paper, "Sparse Training from Random Initialization: Aligning Lottery Ticket Masks using Weight Symmetry", accepted at #ICML2025

Featured Paper at @icmlconf - The Internationall Conference on Machine Learning: SD² - Self-Distilled Sparse Drafters Speculative decoding is a powerful technique for reducing the latency of Large Language Models (LLMs), offering a fault-tolerant framework that enables the…

Looks like OpenReview had enough of these reviewers and decided to lay down :)

"Accept reality as it is, try not to regret the past, and try to improve the situation." "The challenge that AI poses is the greatest challenge of humanity ever. And overcoming will also bring the greatest reward. And in some sense, whether you like it or not, your life is going…

🥳 Happy to share our new work – Kinetics: Rethinking Test-Time Scaling Laws 🤔How to effectively build a powerful reasoning agent? Existing compute-optimal scaling laws suggest 64K thinking tokens + 1.7B model > 32B model. But, It only shows half of the picture! 🚨 The O(N²)…

I have bittersweet news to share. Yesterday we merged a PR deprecating TensorFlow and Flax support in transformers. Going forward, we're focusing all our efforts on PyTorch to remove a lot of the bloating in the transformers library. Expect a simpler toolkit, across the board.

Google Cloud Storage is down and PDF files are temporally unavailable through the OpenReview API. status.cloud.google.com/index.html

Singular Value Decomposition is a matrix factorization that expresses a matrix M as UΣVᵀ, where U and V are orthogonal matrices, and Σ is diagonal. It can interpreted as the action of a rotation, a scaling and a rotation/reflection.