Max Ryabinin

@m_ryabinin

Large-scale deep learning & research @togethercompute Learning@home/Hivemind author (DMoE, DeDLOC, SWARM, Petals) PhD in decentralized DL '2023

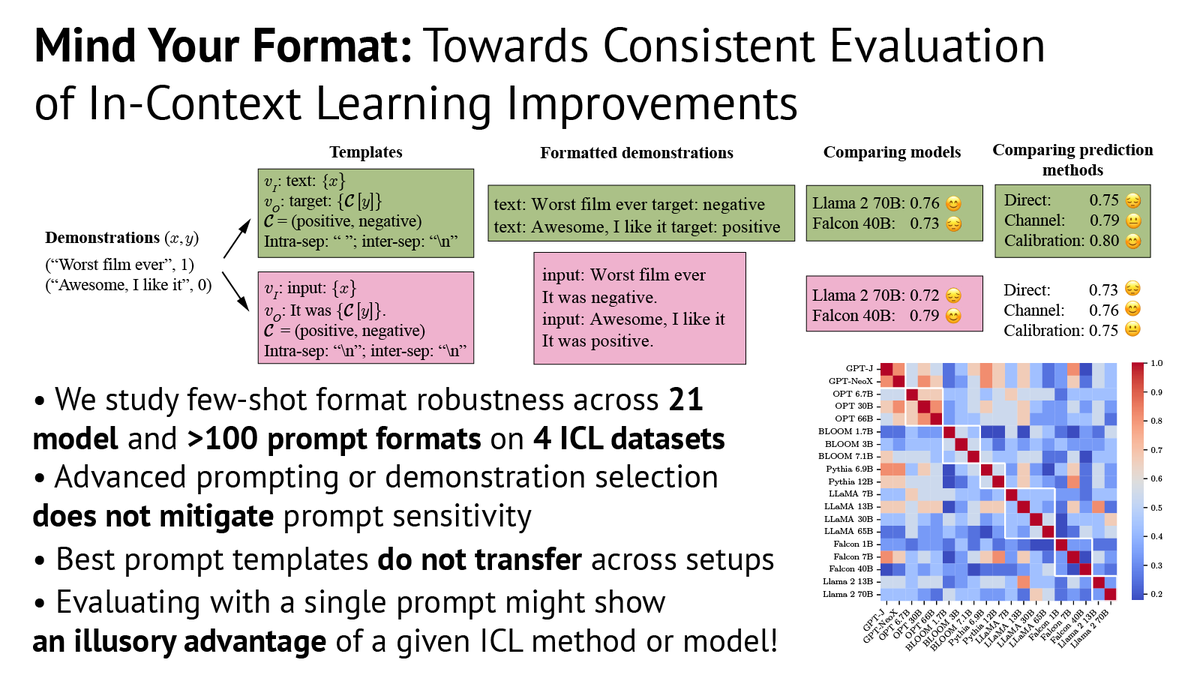

In our new #ACL2024 paper, we show that LLMs remain sensitive to prompt formats even with improved few-shot techniques. Our findings suggest that careful evaluation needs to take this lack of robustness into account 📜: arxiv.org/abs/2401.06766 🖥️: github.com/yandex-researc…

Very excited about this release! A capable 10B model trained over the Internet is direct proof that decentralized DL has a lot of potential — huge kudos to the team Glad I could play a small part in the project, and hoping to get even more results in this research area out soon!

Releasing INTELLECT-1: We’re open-sourcing the first decentralized trained 10B model: - INTELLECT-1 base model & intermediate checkpoints - Pre-training dataset - Post-trained instruct models by @arcee_ai - PRIME training framework - Technical paper with all details

On Saturday we’re hosting the ES-FoMo workshop, with @tri_dao, @dan_biderman, @simran_s_arora, @m_ryabinin and others - we’ve got a great slate of papers and invited talks, come join us! (More on the great slate of speakers soon) x.com/esfomo/status/… 2/

ES-FoMo is back for round three at #ICML2025! Join us in Vancouver on Saturday July 19 for a day dedicated to Efficient Systems for Foundation Models: from 💬reasoning models to🖼️scalable multimodality, 🧱efficient architectures, and more! Submissions due May 26! More below 👇

From my experience, getting a paper on decentralized DL accepted to top-level conferences can be quite tough. The motivation is not familiar to many reviewers, and standard experiment settings don't account for the problems you aim to solve. Hence, I'm very excited to see…

For people not familiar with AI publishing; there are 3 main conferences every year. ICML, ICLR and NeurIPS. These are technical conferences and the equivalent of journals in other disciplines - they are the main publishing venue for AI. The competition to have papers at these…

Distributed Training in Machine Learning🌍 Join us on July 12th as @Ar_Douillard explores key methods like FSDP, Pipeline & Expert Parallelism, plus emerging approaches like DiLoCo and SWARM—pushing the limits of global, distributed training. Learn more: tinyurl.com/9ts5bj7y

Very grateful to have an opportunity to meet researchers from @CaMLSys/@flwrlabs and share some current thoughts on decentralized and communication-efficient deep learning. Thanks to @niclane7 for the invitation!

Looking forward to spending the day with @m_ryabinin, one of the leading figures in decentralized AI. Amazing talk for those nearby Thanks for visiting @CaMLSys Max!

Thanks a lot to Ferdinand for hosting this conversation! It was a great opportunity to overview all parts of SWARM and discuss the motivation behind them in depth. I hope this video will make decentralized DL more accessible: many ideas in the field are simpler than they seem!

The research paper video review on "Swarm Parallelism" along with the author @m_ryabinin, Distinguished Research Scientist @togethercompute is now out ! Link below 👇 For context, most decentralized training today follows DDP-style approaches requiring full model replication on…

There is a lot to dig in, the latest prime intellect paper are very up to date in term of scale / sota. To get deep into the field I suggest reading paper from @m_ryabinin @Ar_Douillard and Martin Jaggi some paper arxiv.org/abs/2412.01152 arxiv.org/abs/2311.08105…

We are introducing Quartet, a fully FP4-native training method for Large Language Models, achieving optimal accuracy-efficiency trade-offs on NVIDIA Blackwell GPUs! Quartet can be used to train billion-scale models in FP4 faster than FP8 or FP16, at matching accuracy. [1/4]

Looking forward to discussing SWARM next Monday, thanks to @FerdinandMom for the invite! Many works about Internet-scale DL target communication savings, but once you want to train large models over random GPUs, other challenges arise. Turns out that pipelining can help here!

Most decentralized training today follows DDP-style approaches requiring full model replication on each node. While practical for those with H100 clusters at their disposal, this remains out of reach for the vast majority of potential contributors. Delving back into the…

ES-FoMo is back for round three at #ICML2025! Join us in Vancouver on Saturday July 19 for a day dedicated to Efficient Systems for Foundation Models: from 💬reasoning models to🖼️scalable multimodality, 🧱efficient architectures, and more! Submissions due May 26! More below 👇

There is also a lot of relevant ideas from earlier work in async/distributed RL, e.g. A3C (arxiv.org/abs/1602.01783) or IMPALA (arxiv.org/abs/1802.01561) I wonder if some methods or learnings from that era could find novel use for RL+LLMs: certain challenges could be quite similar

this infra framework (primeintellect.ai/blog/intellect…) + using SWARM (arxiv.org/abs/2301.11913) on the inference node to fit ultra large models is going to be the future one step closer to the GitTheta (arxiv.org/abs/2306.04529) dream

Releasing INTELLECT-2: We’re open-sourcing the first 32B parameter model trained via globally distributed reinforcement learning: • Detailed Technical Report • INTELLECT-2 model checkpoint primeintellect.ai/blog/intellect…

Releasing INTELLECT-2: We’re open-sourcing the first 32B parameter model trained via globally distributed reinforcement learning: • Detailed Technical Report • INTELLECT-2 model checkpoint primeintellect.ai/blog/intellect…

Workshop alert 🚨 We'll host in ICLR 2025 a workshop on modularity, encompassing collaborative + decentralized + continual learning. Those topics are on the critical path to building better AIs. Interested? submit a paper and join us in Singapore! sites.google.com/corp/view/mcdc…