Alexander Panfilov ✈️ ICML 2025

@kotekjedi_ml

IMPRS-IS & ELLIS PhD Student @ Tübingen Interested in Trustworthy ML, Security in ML and AI Safety.

Stronger models need stronger attackers! 🤖⚔️ In our new paper we explore how attacker-target capability dynamics affect red-teaming success (ASR). Key insights: 🔸Stronger models = better attackers 🔸ASR depends on capability gap 🔸Psychology >> STEM for ASR More in 🧵👇

(Structured) Model pruning is a nice tool when you really need to deploy a model that is a *bit* smaller, but don't want to deploy a bigger hammer like quantization. We recently published an improved *automated* model pruning method, surprisingly based on model merging:

📢Happy to share that I'll join ELLIS Institute Tübingen (@ELLISInst_Tue) and the Max-Planck Institute for Intelligent Systems (@MPI_IS) as a Principal Investigator this Fall! I am hiring for AI safety PhD and postdoc positions! More information here: s-abdelnabi.github.io

A simple AGI safety technique: AI’s thoughts are in plain English, just read them We know it works, with OK (not perfect) transparency! The risk is fragility: RL training, new architectures, etc threaten transparency Experts from many orgs agree we should try to preserve it:…

Presenting two papers at the MemFM workshop at ICML! Both touch upon how near duplicates (and beyond) in LLM training data contribute to memorization. - arxiv.org/pdf/2405.15523 - arxiv.org/pdf/2506.20481 @_igorshilov @yvesalexandre

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

I'll be at #ICML2025 this week presenting SafeArena (Wednesday 11AM - 1:30PM in East Exhibition Hall E-701). Come by to chat with me about web agent safety (or anything else safety-related)!

🚨Thought Grok-4 saturated GPQA? Not yet! ⚖️Same questions, when evaluated free-form, Grok-4 is no better than its smaller predecessor Grok-3-mini! Even @OpenAI's o4-mini outperforms Grok-4 here. As impressive as Grok-4 is, benchmarks have not saturated just yet. Also, have…

🚀Thrilled to share my ICML 2025 line-up! Conference paper: “An Interpretable N-gram Perplexity Threat Model for LLM Jailbreaks” (Thu 17 Jul, 11:00–13:30 PDT, East Exhibition Hall A-B) - we propose a fluency-FLOPs threat model with adaptation of popular attacks to it. We can use…

🚨 Did you know that small-batch vanilla SGD without momentum (i.e. the first optimizer you learn about in intro ML) is virtually as fast as AdamW for LLM pretraining on a per-FLOP basis? 📜 1/n

I will be presenting two papers at #ICML2025 next week!🚀 Thu 17: Interpretable N-gram Threat Model for LLM Jailbreaks (Main Track) Sat 19: Capability-Based Scaling Laws for LLM Red-Teaming (R2-FM Workshop) Come chat about jailbreaks, AI control & safety evals!

Happy to have been part of this project! There is still so much more research to be done about understanding (in)capabilities of frontier models - important for building safe AI.

Can frontier models hide secret information and reasoning in their outputs? We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

1/ "Swiss cheese security", stacking layers of imperfect defenses, is a key part of AI companies' plans to safeguard models, and is used to secure Anthropic's Opus 4 model. Our new STACK attack breaks each layer in turn, highlighting this approach may be less secure than hoped.

🚨 Ever wondered how much you can ace popular MCQ benchmarks without even looking at the questions? 🤯 Turns out, you can often get significant accuracy just from the choices alone. This is true even on recent benchmarks with 10 choices (like MMLU-Pro) and their vision…

There's been a hole at the heart of #LLM evals, and we can now fix it. 📜New paper: Answer Matching Outperforms Multiple Choice for Language Model Evaluations. ❗️We found MCQs can be solved without even knowing the question. Looking at just the choices helps guess the answer…

We keep finding that CoT explanations are not faithful to models’ true reasoning processes But this might be fine as long as the alternative explanations are also valid Training models to produce correct, even if unfaithful, explanations is useful for oversight

In Late May, Anthropic released Claude 4 Opus and Sonnet. Despite their very impressive coding ability, nearly all of the accompanying discourse was around the detail in the system card that they ran an experiment in which Claude blackmailed a fictional user. 1/🧵

🧵 (1/5) Powerful LLMs present dual-use opportunities & risks for national security and public safety (NSPS). We are excited to launch FORTRESS, a new SEAL leaderboard for measuring adversarial robustness of model safeguard and over-refusal tailored particularly for NSPS threats.

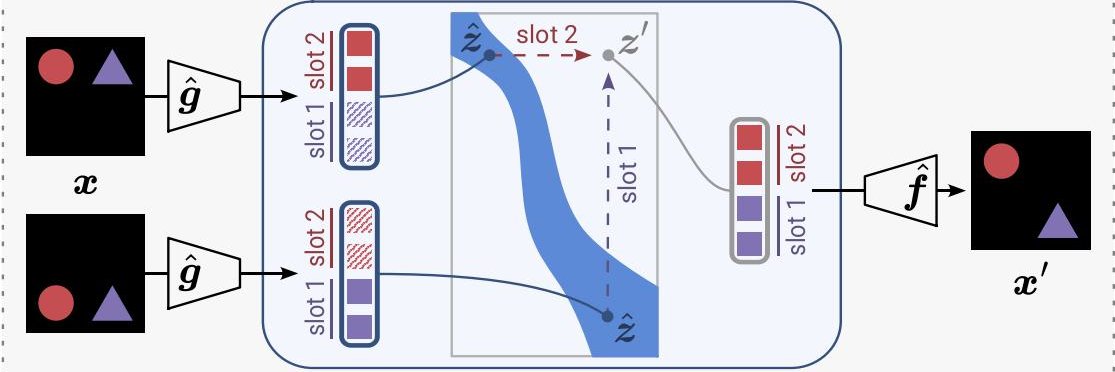

🚀 We’ve released the source code for 𝗔𝗦𝗜𝗗𝗘 (presented as an 𝗢𝗿𝗮𝗹 at the #ICLR2025 BuildTrust workshop)! 🔍ASIDE boosts prompt injection robustness without safety-tuning: we simply rotate embeddings of marked tokens by 90° during instruction-tuning and inference 👇code

New paper: real attackers don't jailbreak. Instead, they often use open-weight LLMs. For harder misuse tasks, they can use "decomposition attacks," where a misuse task is split into benign queries across new sessions. These answers help an unsafe model via in-context learning.

What causes jailbreaks to transfer between LLMs? We find that jailbreak strength and model representation similarity predict transferability, and we can engineer model similarity to improve transfer. Details in🧵