Matthieu Meeus

@matthieu_meeus

PhD student @ImperialCollege Privacy/Safety + AI https://matthieumeeus.com/

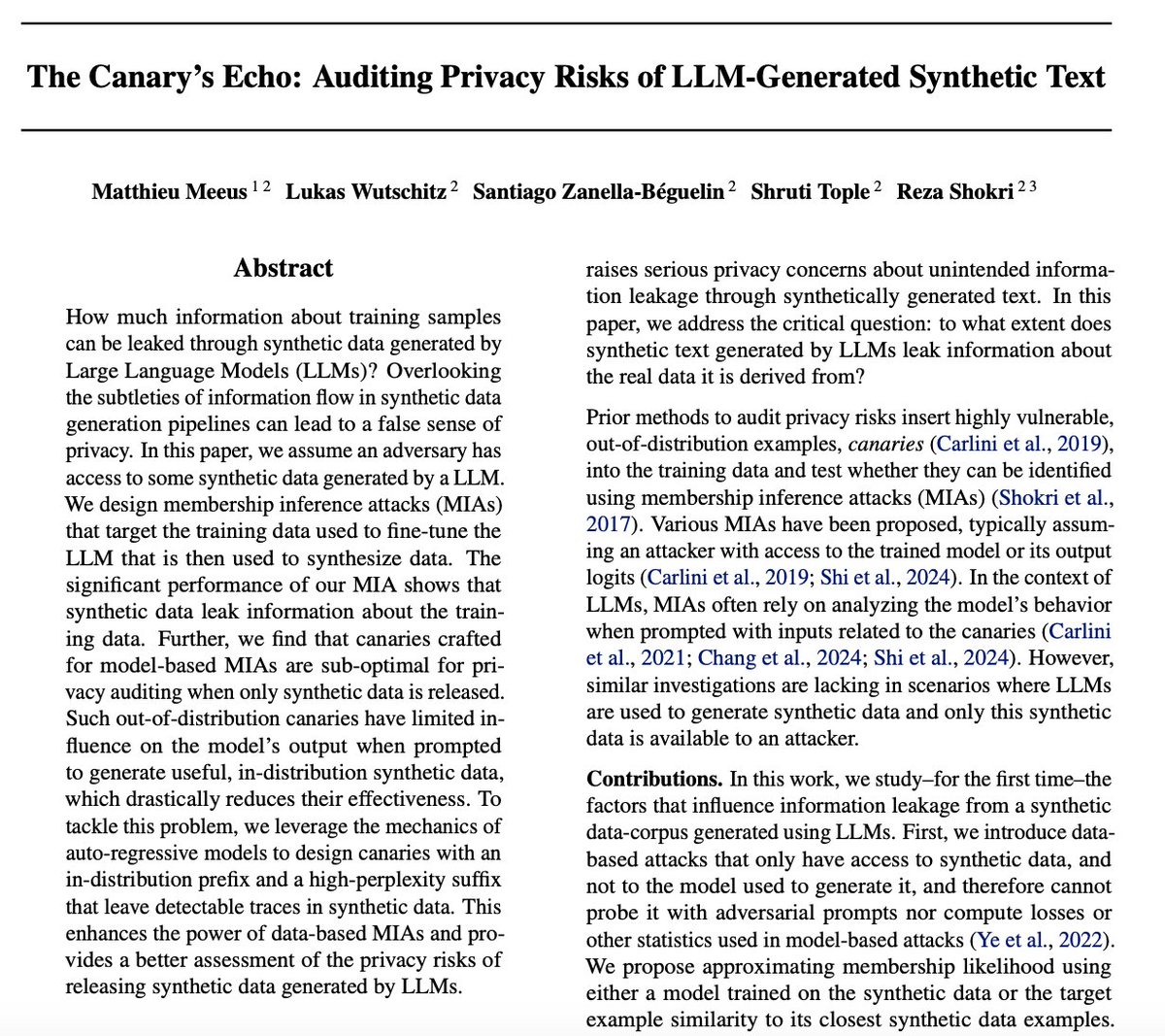

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM? In our @icmlconf paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️

Presenting two papers at the MemFM workshop at ICML! Both touch upon how near duplicates (and beyond) in LLM training data contribute to memorization. - arxiv.org/pdf/2405.15523 - arxiv.org/pdf/2506.20481 @_igorshilov @yvesalexandre

Lovely place for a conference! Come see my poster on privacy auditing of synthetic text data at 11am today! East Exhibition Hall A-B #E-2709

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM? In our @icmlconf paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️

Will be at @icmlconf in Vancouver next week! 🇨🇦 Will be presenting our poster on privacy auditing of synthetic text. Presentation here icml.cc/virtual/2025/p… And will also be presenting two papers at the MemFM workshop! icml2025memfm.github.io Hit me up if you want to chat!

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM? In our @icmlconf paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️

New paper accepted @ USENIX Security 2025! We show how to identify training samples most vulnerable to membership inference attacks - FOR FREE, using artifacts naturally available during training! No shadow models needed. Learn more: computationalprivacy.github.io/loss_traces/ Thread below 🧵

How good can privacy attacks against LLM pretraining get if you assume a very strong attacker? Check it out in our preprint ⬇️

Are modern large language models (LLMs) vulnerable to privacy attacks that can determine if given data was used for training? Models and dataset are quite large, what should we even expect? Our new paper looks into this exact question. 🧵 (1/10)

Google presents Strong Membership Inference Attacks on Massive Datasets and (Moderately) Large Language Models

!!!

Without its international students, Harvard is not Harvard. hrvd.me/IntStudents25t

🚨One (more!) fully-funded PhD position in our group at Imperial College London – Privacy & Machine Learning 🔐🤖 starting Oct 2025 Plz RT 🔄

Yes yes I know the fundamental law of information recovery and differential privacy, but if there are really just a few summary statistics, surely it should be anonymous? 🥸 I definitely used to think this, until we started looking into it two years ago. A thread 🧵