Hyunwoo Kim

@hyunw_kim

Social/Reasoning/Cognition + AI — Incoming Assistant Professor @kaist_ai & Postdoc @nvidia | Prev. @allen_ai | PhD @SeoulNatlUni

📢I'm thrilled to announce that I’ll be joining @KAIST_AI as an Assistant Professor in 2026, leading the Computation & Cognition (COCO) Lab🤖🧠: coco-kaist.github.io We'll be exploring reasoning, learning w/ synthetic data, and social agents! +I'm spending a gap year @nvidia✨

🚨 Paper Alert: “RL Finetunes Small Subnetworks in Large Language Models” From DeepSeek V3 Base to DeepSeek R1 Zero, a whopping 86% of parameters were NOT updated during RL training 😮😮 And this isn’t a one-off. The pattern holds across RL algorithms and models. 🧵A Deep Dive

Very excited to share that HAICosystem has been accepted to #COLM2025 ! 🎉 Multi-turn, interactive evaluation is THE future, think Tau-Bench, TheAgentCompany, Sotopia, ... Proud to take a small step toward open-ended, interactive AI safety eval, and excited for what’s next! 😎

1/ What if you could see how your AI handles the chaos of the real world? Meet HAICOSYSTEM: the framework to simulate human-AI-environment interactions—all at once. 🌍🤖 Find out if your AI is truly safe under pressure from real-world scenarios! 🔥 🌐: haicosystem.org

“Papers don’t matter,” says the one who published hundreds. “PhDs don’t matter,” says the one whose career was built on theirs. “Money doesn’t matter,” says the millionaire / billionaire. Maybe listen to yourself first.

Can data owners & LM developers collaborate to build a strong shared model while each retaining data control? Introducing FlexOlmo💪, a mixture-of-experts LM enabling: • Flexible training on your local data without sharing it • Flexible inference to opt in/out your data…

Introducing FlexOlmo, a new paradigm for language model training that enables the co-development of AI through data collaboration. 🧵

I've struggled to announce this amidst so much dark & awful going on in the world, but with 1mo to go, I wanted to share that: (i) I finally graduated; (ii) In August, I'll begin as an assistant professor in the CS dept. of the National University of Singapore.

💡Beyond math/code, instruction following with verifiable constraints is suitable to be learned with RLVR. But the set of constraints and verifier functions is limited and most models overfit on IFEval. We introduce IFBench to measure model generalization to unseen constraints.

🔥 GUI agents struggle with real-world mobile tasks. We present MONDAY—a diverse, large-scale dataset built via an automatic pipeline that transforms internet videos into GUI agent data. ✅ VLMs trained on MONDAY show strong generalization ✅ Open data (313K steps) (1/7) 🧵 #CVPR

A bit late to announce, but I’m excited to share that I'll be starting as an assistant professor at the University of Maryland @umdcs this August. I'll be recruiting PhD students this upcoming cycle for fall 2026. (And if you're a UMD grad student, sign up for my fall seminar!)

🚨New Paper Alert🚨 Excited to share our new video game benchmark, "Orak"! 🕹️ It was a thrilling experience to test whether LLM/VLM agents can solve real video games 🎮 Looking forward to continuing my research on LLM/VLM-based game agents with @Krafton_AI !

As a video gaming company, @Krafton_AI has secretly been cooking something big with @NVIDIAAI for a while! 🥳 We introduce Orak, the first comprehensive video gaming benchmark for LLMs! arxiv.org/abs/2506.03610

Is RL really scalable like other objectives? We found that just scaling up data and compute is *not* enough to enable RL to solve complex tasks. The culprit is the horizon. Paper: arxiv.org/abs/2506.04168 Thread ↓

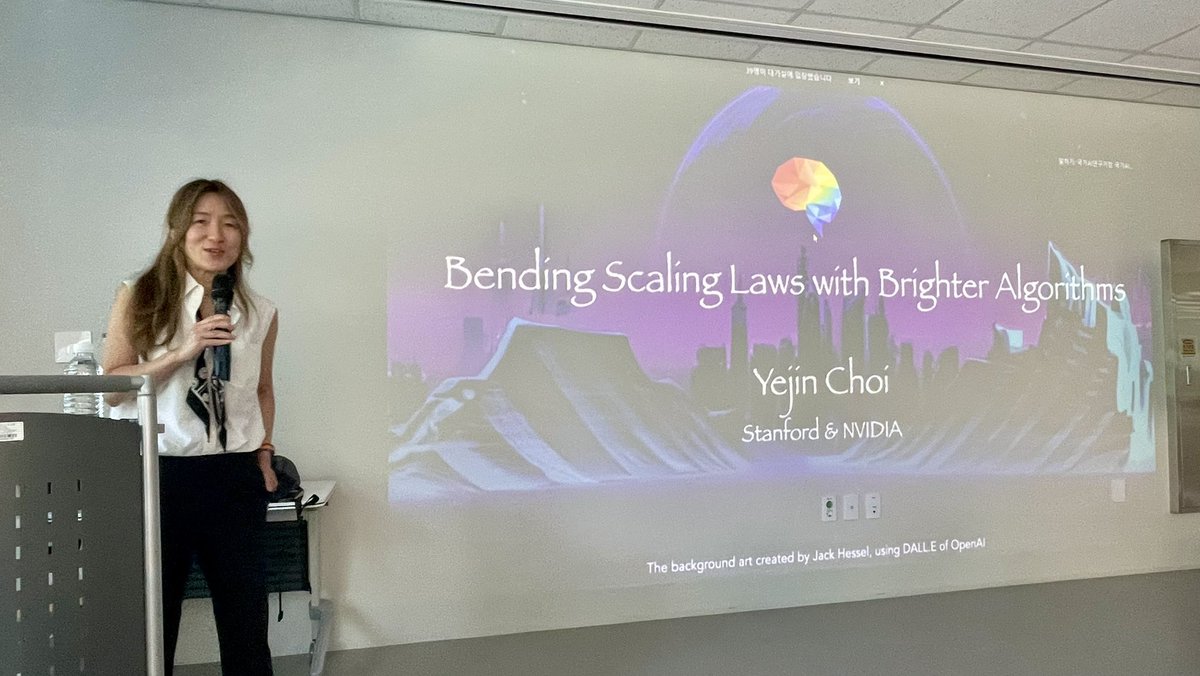

It’s really wonderful to have @YejinChoinka for this super exciting talk at @kaist_ai 🤩🚀🚀🚀

Does anyone know of any movies/stories where AI (not aliens) saves humanity by solving unsolved problems? I’d love to see some concrete scenarios of how this could play out. ChatGPT search didn’t help. “Creator” doesn’t count. Has no one ever imagined this? Not even in sci-fi?

What happens when you ✨scale up RL✨? In our new work, Prolonged RL, we significantly scale RL training to >2k steps and >130k problems—and observe exciting, non-saturating gains as we spend more compute 🚀.

RL scaling is here arxiv.org/pdf/2505.24864

Data curation is crucial for LLM reasoning, but how do we know if our dataset is not overfit to one benchmark and generalizes to unseen distributions? 🤔 𝐃𝐚𝐭𝐚 𝐝𝐢𝐯𝐞𝐫𝐬𝐢𝐭𝐲 is key, when measured correct—it strongly predicts model generalization in reasoning tasks! 🧵

Sooner or later, we'll see AI companies promoting their AI agents by showcasing how many papers their PRO-mode agents have had accepted at ICLR/NeurIPS/ICML/COLM as poster, spotlight, oral, etc.😂

Totally agree. While language models can significantly support many aspects of scientific research, I’m not convinced it’s a good idea to fully automate the research process and submit the resulting papers for peer review just to showcase the capabilities of your agents…

Check out our latest work on self-improving LLMs, where we try to see if LLMs can utilize their internal self consistency as a reward signal to bootstrap itself using RL. TL;DR: it can, to some extent, but then ends up reward hacking the self-consistency objective. We try to see…

Thrilled to announce that I will be joining @UTAustin @UTCompSci as an assistant professor in fall 2026! I will continue working on language models, data challenges, learning paradigms, & AI for innovation. Looking forward to teaming up with new students & colleagues! 🤠🤘

New for May 2025! * RL on something silly makes Qwen reason well v1 * RL on something silly makes Qwen reason well v2 * RL on something silly makes Qwen reason well v3 ...

Summary in case you missed any LLM research from the past month: * RL on math datasets improves math ability v1 * RL on math datasets improves math ability v2 * RL on math datasets improves math ability v3 * RL on math datasets improves math ability v4 * RL on math datasets...

Excited to announce the Artificial Social Intelligence Workshop @ ICCV 2025 @ICCVConference Join us in October to discuss the science of social intelligence and algorithms to advance socially-intelligent AI! Discussion will focus on reasoning, multimodality, and embodiment.

🤯 We cracked RLVR with... Random Rewards?! Training Qwen2.5-Math-7B with our Spurious Rewards improved MATH-500 by: - Random rewards: +21% - Incorrect rewards: +25% - (FYI) Ground-truth rewards: + 28.8% How could this even work⁉️ Here's why: 🧵 Blogpost: tinyurl.com/spurious-rewar…