@fraboeni

@fraboeni

Tenure track faculty @CISPA. Interested in #MachineLearning, #Privacy, and #Trustworthiness.

Our panelists at the workshop on the impact of memorization on foundation models @icmlconf are discussing about the gaps between academia and industry when it comes to memorization. Don‘t miss it and join us!

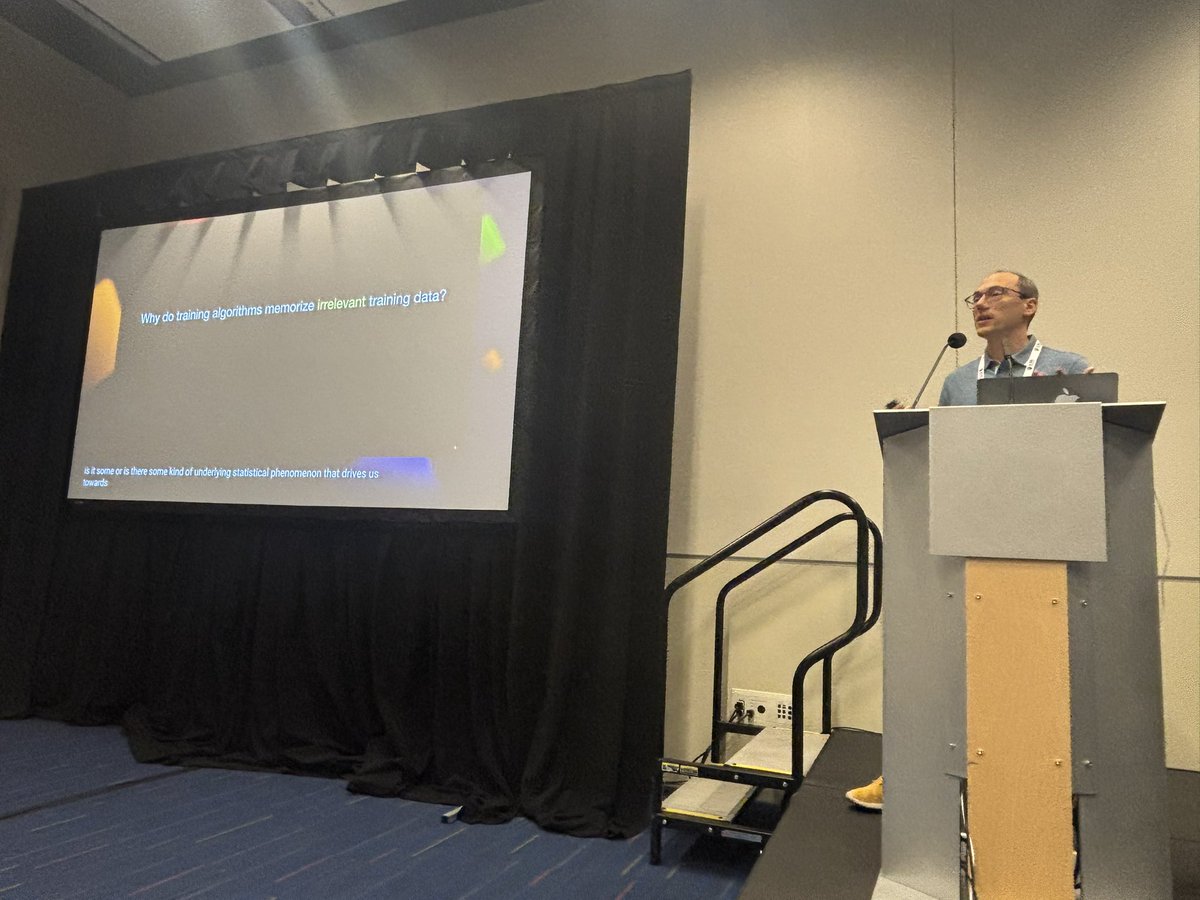

🧠📚Join us for Vitaly Feldman‘s explanation on the relevant question of: „Why do training algorithms memorize *irrelevant* training data?“ #memorization #workshop #MemFM #ICML2025

Big kudos also to @niloofar_mire for leading us through an exciting day full of #memorization at our #MemFM workshop at #ICML!

We’re happy to have such a great audience, incited, and contributed talks at our #MemFM workshop at #ICML‘25. And everyone is excitedly waiting for Feder Cooper‘s upcoming talk on #copyrighted data in #foundation #models. ⏲️Happening in 10 minutes!!

Join us in west building room 223 for the #Memorization workshop!!

Our poster session at the #MemFM #Workshop at #ICML is taking off! But there is also a lot of excitement in the air for the first talk by @rzshokri ! ⏰Happening in 15 minutes!!!!

Our workshop #MemFM at #ICML‘25 is going to start in 15 minutes in West Meeing room 223-224 in the #Vancouver Convention Center. We’re looking much forward to hosting you for interesting talks. We also start the day with #breakfast snacks. icml2025memfm.github.io

🚨 Join us at ICML 2025 for the Workshop on Unintended Memorization in Foundation Models (MemFM)! 🚨 📅 Saturday, July 19 🕓 08:25 am - 5 pm 📍 West Meeting Room 223-224, Vancouver Convention Centre Link: icml2025memfm.github.io Let's build more Trustworthy Foundation Models!

Come to our poster E-2608 at #ICML2025 to find out if your data was used to train a generative model. This is an amazing work with Bihe Zhao @pratyushmaini @fraboeni!

Are modern large language models (LLMs) vulnerable to privacy attacks that can determine if given data was used for training? Models and dataset are quite large, what should we even expect? Our new paper looks into this exact question. 🧵 (1/10)

Super excited to announce the second batch of speakers for our DIG-BUG's workshop on #reliable and #trustworthy #GenerativeAI at #ICML’25! Looking forward to @tatsu_hashimoto, @RICEric22 and Ivan Evtimov! Hope to see all of you there.

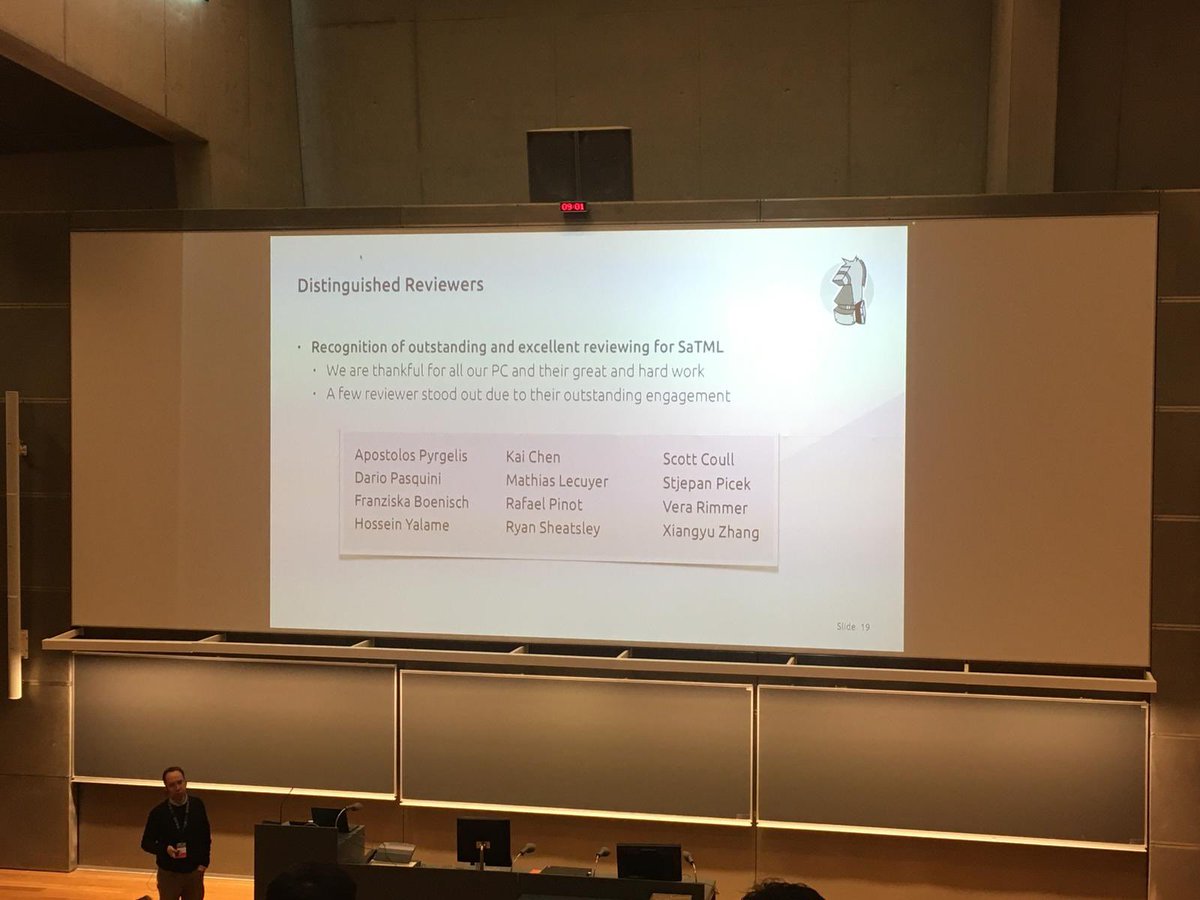

I am excited to announce that I have been named "Distinguished Reviewer" for the #SaTML #conference, the second year in a row.

I‘m so excited. The 120 students who signed up definitely show that there is a need to learn about novel facets and risks of machine learning. We compiled all the content around trustworthy ML into one overview lecture with videos, reading lists, and coding assignments! 👩💻

Today @fraboeni and @adam_dziedzic kicked off the new semester with a full lecture hall. 👩🎓If you also want to learn about #Trustworthy #MachineLearning, we invite you to follow our lecture online: youtube.com/playlist?list=…! #teaching #university #students #semester

Today @fraboeni and @adam_dziedzic kicked off the new semester with a full lecture hall. 👩🎓If you also want to learn about #Trustworthy #MachineLearning, we invite you to follow our lecture online: youtube.com/playlist?list=…! #teaching #university #students #semester

Check out our latest work that has been recently accepted at ICLR'25 🥳.

🔥 New ICLR 2025 Paper! It would be cool to control the content of text generated by diffusion models with less than 1% of parameters, right? And how about doing it across diverse architectures and within various applications? 🚀 🫡 Together with @lukxst, we show how: 🧵 1/

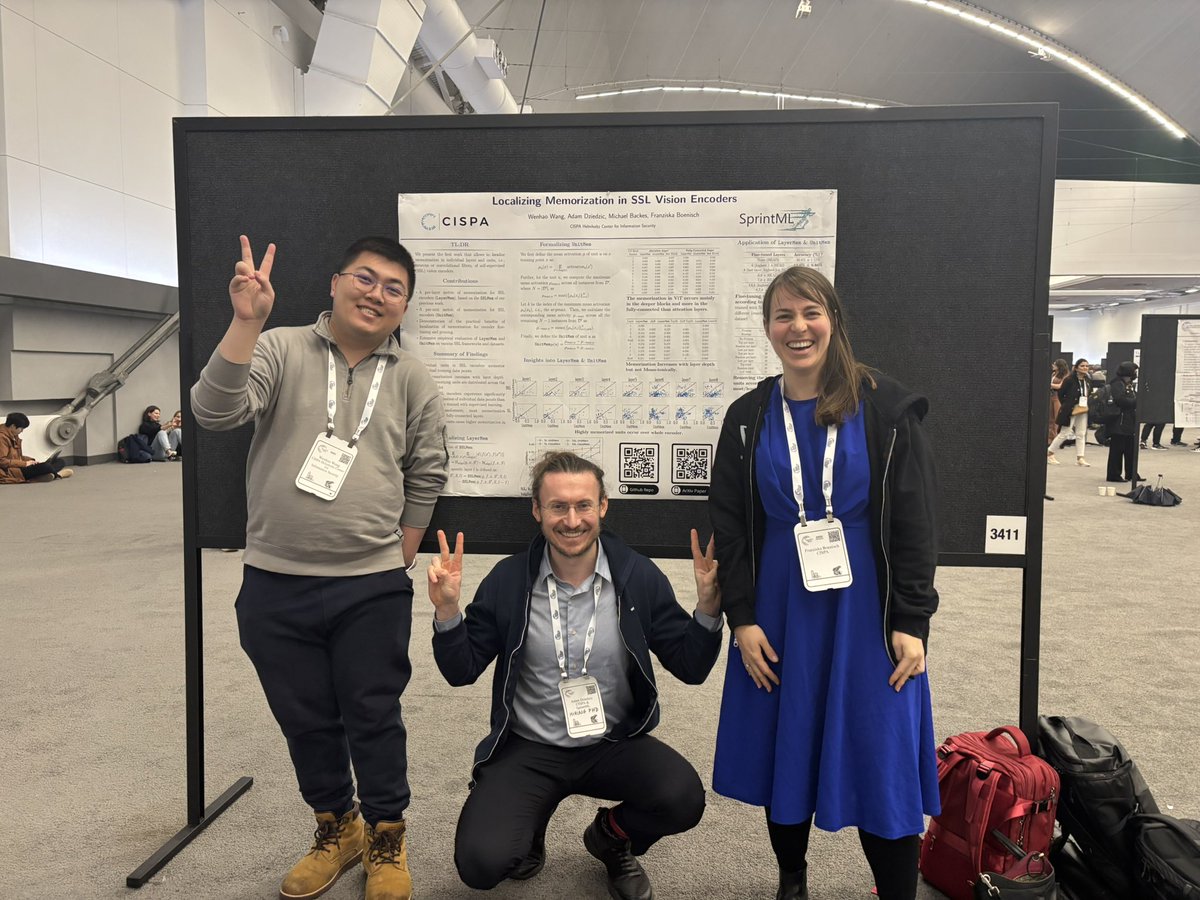

Come and find us to learn about localizing #memorization in individual parameters of #SSL models. 📍East Hall, #3411, at #NeurIPS2024

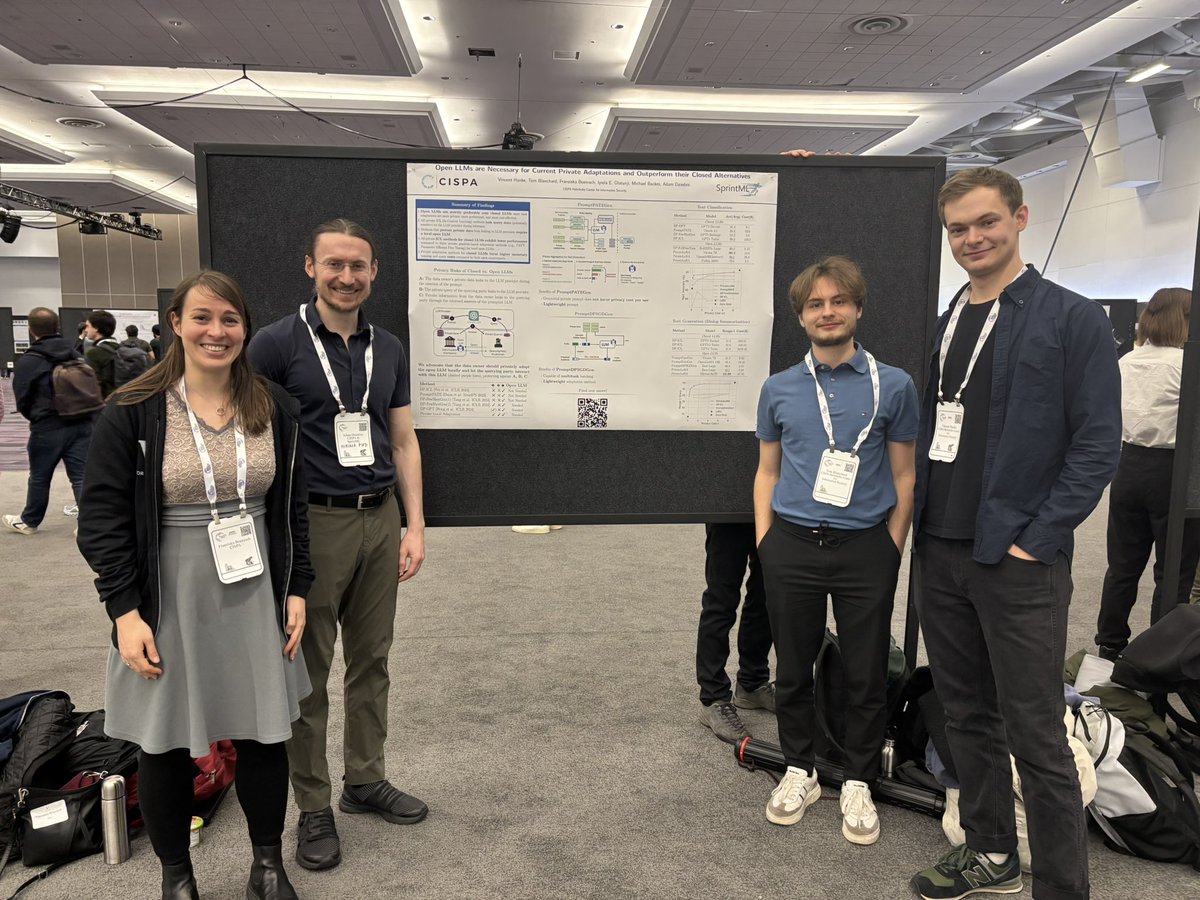

We’re getting started in the East Hall #2204 talking about Open vs Closed #LLMs and their adaptations with #privacy. #NeurIPS2024 #private #tuning 🔒