SprintML

@SprintML

The SprintML lab does research on Secure, Private, Robust, INterpretable, and Trustworthy ML @CISPA. We are currently hiring PhDs! Visit us: http://sprintml.com

📜Also consider submitting your papers. ‼️ We extended our deadline to ** May 25th, 2025 **, 11:59 PM (AoE). ‼️

Super excited to announce the second batch of speakers for our DIG-BUG's workshop on #reliable and #trustworthy #GenerativeAI at #ICML’25! Looking forward to @tatsu_hashimoto, @RICEric22 and Ivan Evtimov! Hope to see all of you there.

Today @fraboeni and @adam_dziedzic kicked off the new semester with a full lecture hall. 👩🎓If you also want to learn about #Trustworthy #MachineLearning, we invite you to follow our lecture online: youtube.com/playlist?list=…! #teaching #university #students #semester

Very interesting insights: Image AutoRegressive Models might be better and faster than Diffusion models BUT they leak more #privacy! If you want to learn more about these trade-offs, check out our latest insights!

🚨 Image AutoRegressive Models Leak More Training Data Than Diffusion Models🚨 IARs — like the #NeurIPS2024 Best Paper — now lead in AI image generation. But at what risk? IARs: 🔍 Are more likely than DMs to reveal training data 🖼️ Leak entire training images verbatim 🧵 1/

A truly great outcome for a summer internship in our SprintML lab. If you are interested in working on these kinds of cool projects with us this summer, please apply: 🖇️ sprintml.com/positions/

🔥 New ICLR 2025 Paper! It would be cool to control the content of text generated by diffusion models with less than 1% of parameters, right? And how about doing it across diverse architectures and within various applications? 🚀 🫡 Together with @lukxst, we show how: 🧵 1/

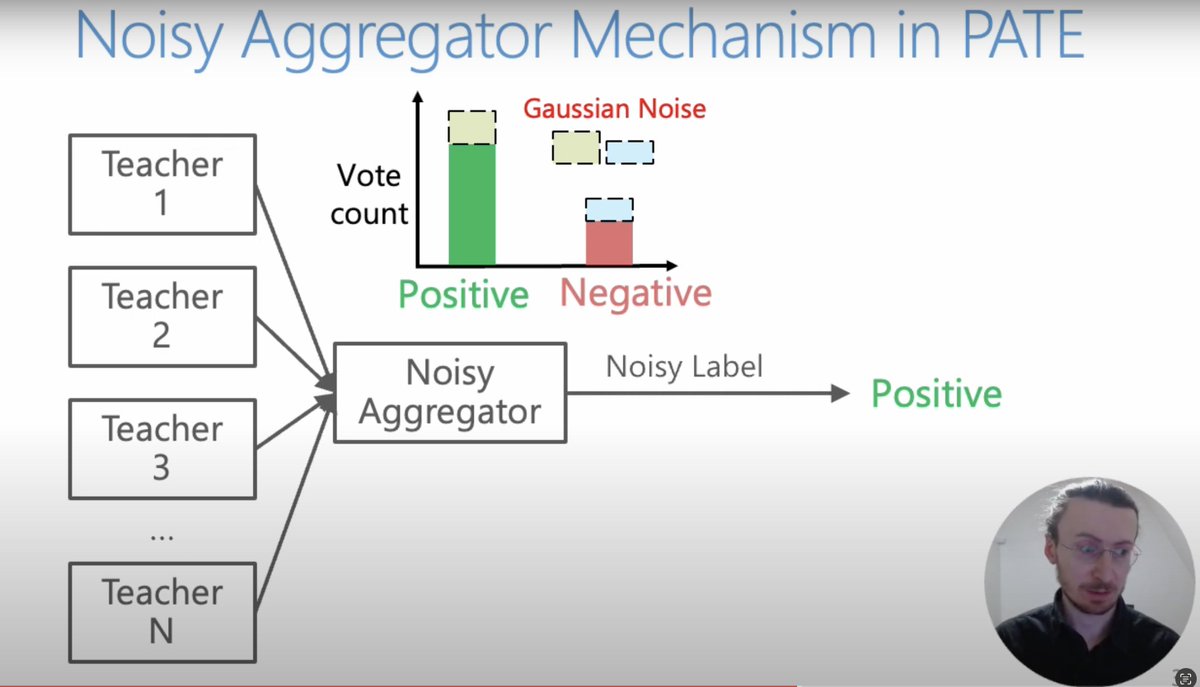

Have you ever wondered how you can train an #ML #model with #privacy guarantees? In our lecture on #DifferentiallyPrivate ML, we cover the two canonical algorithms: #DPSGD and the #PATE. We discuss principles, privacy analysis and accounting, and effects: youtube.com/watch?v=0wbN0C…

Did you know that the predictions of an #ML model can give away #private information of the model’s training data? We cover the problem of #privacy leakage in our new lecture on #trustworthy ML and introduce you to #DifferentialPrivacy: youtube.com/watch?v=Jpc-i4…

Wanna learn about #trustworthiness of #MachineLearning? @fraboeni and @adam_dziedzic created a new lecture series about trustworthy #ML. Follow every week as we talk about #privacy, #confidentiality, #robustness, #fairness, #bias, #explainability: youtube.com/playlist?list=…

Very exciting for you those who are still around at @iclr_conf: @NicolasPapernot is presenting the most recent exciting projects of his lab in A3!! #llms #privacy #unlearning #censorship

Find us at #148 to chat about our paper „Memorization in SSL improves downstream generalization“ @iclr_conf this afternoon at 4:30!

Want to know what's happening at @satml_conf today? Luckily we have @thegautamkamath of @UWaterloo on the ground reporting for us! First up: this morning's session on privacy chaired by @fraboeni feat. work by @FerryJulien12,@hjy836, and others. A thread... 🧵#SaTML2024 (1/5)

Happy to share your recently accepted #ICLR2024 papers on #arxiv? But spending hours and hours on cleaning off comments, compiling, re-compiling? You don't have to - just follow the simple guide we compiled for our #PhD #students 👇 sprintml.com/phdlife/2023-0…

Check @adam_dziedzic's latest talk on differential privacy for prompting LLMs: youtu.be/GYg8a6X3yvc?si… It covers our latest paper from #NeurIPS2023 adam-dziedzic.com/static/assets/… We propose to privately learn to prompt and show how to create private discrete and continuous/soft prompts

Visit us this afternoon at 5PM at poster #1619 at @NeurIPSConf to talk about #privacy in #LLM prompting!

🔥 New ICLR 2025 Paper! It would be cool to control the content of text generated by diffusion models with less than 1% of parameters, right? And how about doing it across diverse architectures and within various applications? 🚀 🫡 Together with @lukxst, we show how: 🧵 1/

🔓Eager for some Privacy-Preserving Machine Learning before Christmas? 🎄 🎓Join us on Dec. 21 for double research seminar with @fraboeni and @adam_dziedzic of @CISPA @SprintML ideas-ncbr.pl/en/events/mach…

Visit us at @NeurIPSConf - #1617 - work done with @jan_dubinski_ Stanisław @fraboeni @tomasztrzcinsk1 @adam_dziedzic !

Visit our poster currently displayed @NeurIPSConf #1617. Excited to discuss with you about actively defending SSL models.

🔥 New ICLR 2025 Paper! It would be cool to control the content of text generated by diffusion models with less than 1% of parameters, right? And how about doing it across diverse architectures and within various applications? 🚀 🫡 Together with @lukxst, we show how: 🧵 1/

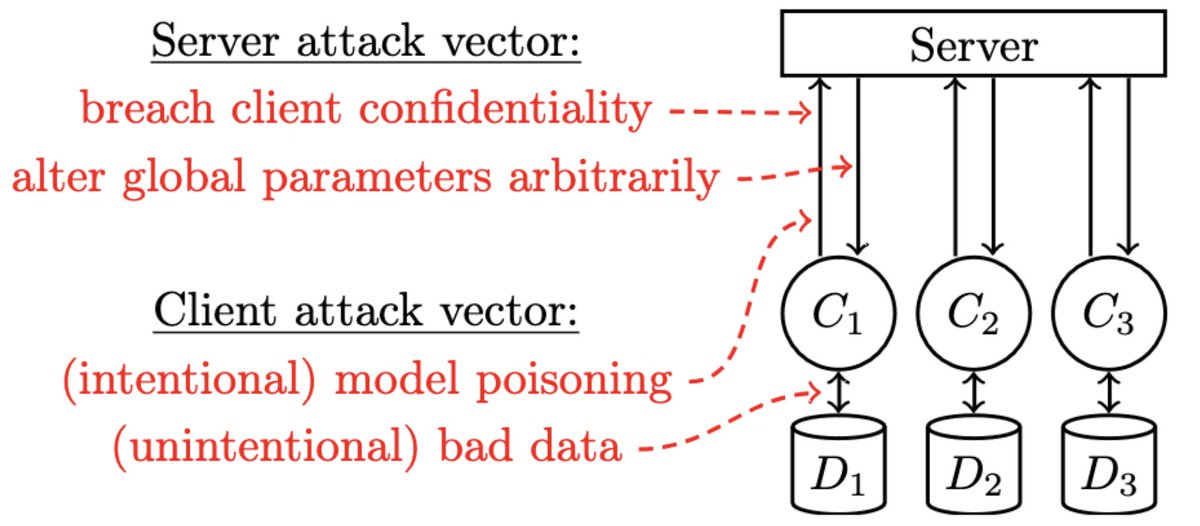

We propose the first peer-to-peer (P2P) learning scheme that is secure against malicious servers and robust to malicious clients. Our generic framework transforms any algorithm for robust aggregation of model updates to tackle these vulnerabilities: neurips.cc/virtual/2023/p…

Our #sprintml group presents 3 talks at @MLinPL today! @fraboeni talks about #privacy in federated learning #FL @adam_dziedzic presents our work on the differentially private prompts for LLMs, & Janek Dubiński introduces our new active defense against encoder stealing. Join us!