Federico Cassano

@ellev3n11

training @cursor_ai prev @neu_prl, @scale_AI, @Roblox, @trailofbits

been a @system76 customer for many years. what is this ID verification i have to do to buy a laptop... seems a bit insane

Does anyone know someone who loves Slurm, lots of GPUs, and despises Kubernetes? Would love to chat with them

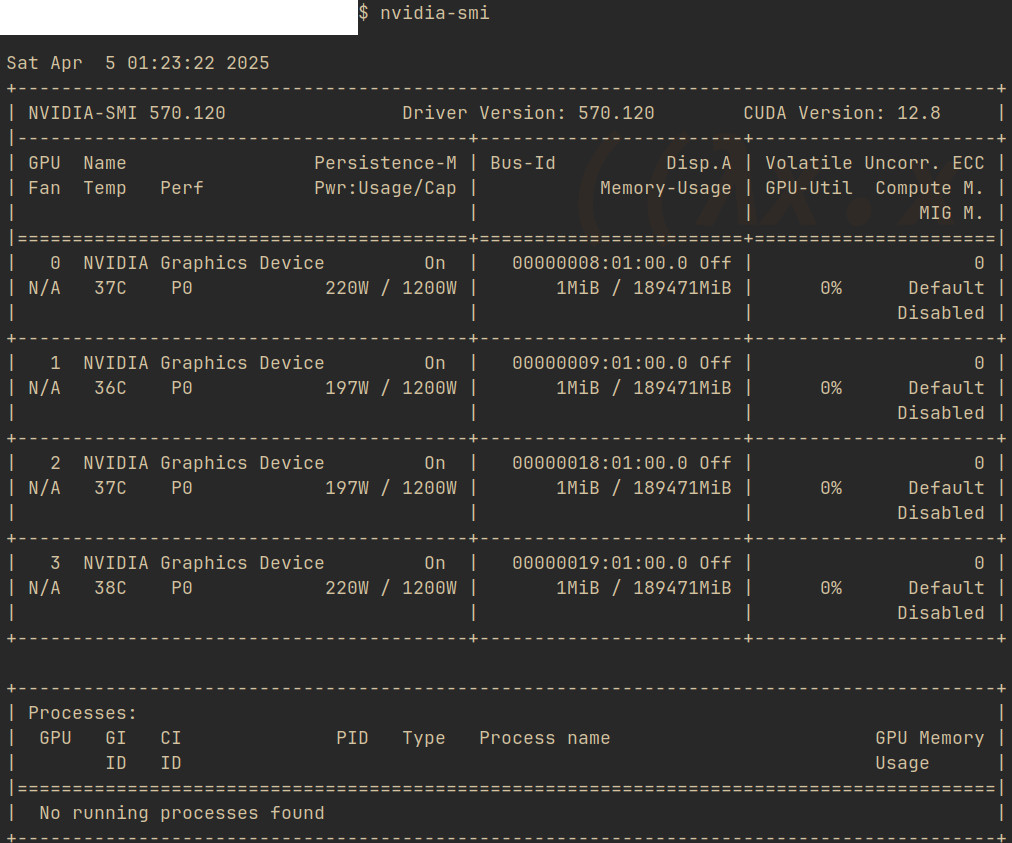

the four blackwells in a GB200 node when the CPU isn’t bothering them (they’re all replaying CUDA graph captures)

careful in updating transformers. the new version puts the chat template in some new file in the model directory, not in tokenizer_config.json; big breaking change

Our models have lower loss and higher MFU because of BugBot

BugBot has saved me from so many bugs that missed human review, people should try it! I found it to be especially useful for figuring out buggy edge cases in complex logic.

BugBot has saved me from so many bugs that missed human review, people should try it! I found it to be especially useful for figuring out buggy edge cases in complex logic.

Cursor 1.0 is out now! Cursor can now review your code, remember its mistakes, and work on dozens of tasks in the background.

my rl codebase is multi-step, multi-objective, multi-reward, multi-environment, btw

A conversation on the optimal reward for coding agents, infinite context models, and real-time RL

not bullish on the diffusion models. they are much more expensive to train; only give benefits on decode speed. the GB200 NVL72 + distributed GEMMs + speculation will just solve decode bottleneck for big AR models.

Introducing PCCL, the Prime Collective Communications Library — a low-level communication library built for decentralized training over the public internet, with fault tolerance as a core design principle. In testing, PCCL achieves up to 45 Gbit/s of bandwidth across datacenters…

when you deploy the randomly-initialized weights

Just moments ago, a robot in a lab suddenly went berserk, marking the first robot rebellion in human history.

Cursor writes almost 1 billion lines of accepted code a day. To put it in perspective, the entire world produces just a few billion lines a day.

has someone built a better pytorch memory_viz? pytorch.org/memory_viz this one crashes with large snapshots and has weird UI bugs that hide the stack trace

"petabyte-scale" sounds funny now that storage is super cheap

Incredibly excited to work with Sasha!

Some personal news: I recently joined Cursor. Cursor is a small, ambitious team, and they’ve created my favorite AI systems. We’re now building frontier RL models at scale in real-world coding environments. Excited for how good coding is going to be.