Dane Malenfant

@dvnxmvl_hdf5

MSc. Computer Science @Mila_Quebec & @mcgillu in the LiNC lab | Currently distracted with multi-agent RL and neuroAI | Restless | Ēka ē-akimiht

Preprint Alert 🚀 Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always true. Example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan. 1/8

Having competed in the IMO, I find the generalist training this work provides to be its most incredible aspect. 🧙♂️ No more rabbithole specialization as we did in high school, thinking specifically about olympiad problem solving 🤔, eating and sleeping olympiad problems.…

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

🚀Introducing Hierarchical Reasoning Model🧠🤖 Inspired by brain's hierarchical processing, HRM delivers unprecedented reasoning power on complex tasks like ARC-AGI and expert-level Sudoku using just 1k examples, no pretraining or CoT! Unlock next AI breakthrough with…

🚨New workshop alert 🚨 Calling all RL researchers in the New York area 🧠🗽 Present your work at the first-ever New York Reinforcement Learning Workshop (NYRL), co-organized by Amazon, Columbia Business School & NYU Tandon School of Engineering. ny-rl.com

Our team at @GoogleDeepMind is looking to hire a talented new Research Scientist! Our group (under @edchi) aims to push the frontier of AI-human interactions through personalization of LLMs and deeply understanding the open-ended nature of user intentions. Beneath this lies…

After living in Montréal for 7 years, I’ve always really liked being able to walk at night

Welcome to Prince Albert.

Ethan is spot on. Sadly, widespread hostility toward AI in the humanities means they miss opportunities to shape the tools, platforms, and debates of tomorrow. That reluctance only deepens the marginalisation of cultural and historical knowledge in our society.

Don't leave AI to the STEM folks. They are often far worse at getting AI to do stuff than those with a liberal arts or social science bent. LLMs are built from the vast corpus human expression, and knowing the history & obscure corners of human works lets you do far more with AI

🔥🚨 Preprint alert: Relative Entropy Pathwise Policy Optimization #REPPO 🚨🔥 What if you could have on-policy training without the instability and parameter tuning that plagues #PPO? What if training with deterministic policy gradient just worked? With our new method it does!

As the field moves towards agents doing science, the ability to understand novel environments through interaction becomes critical. AutumnBench is an attempt at measuring this abstract capability in both humans and current LLMs. Check out the blog post for more insights!

We’re proud to announce the launch of AutumnBench, an open-source benchmark developed on our Autumn platform. This benchmark, led by our MARA team, provides a novel platform for evaluating world modeling and causal reasoning in both human and artificial intelligence.

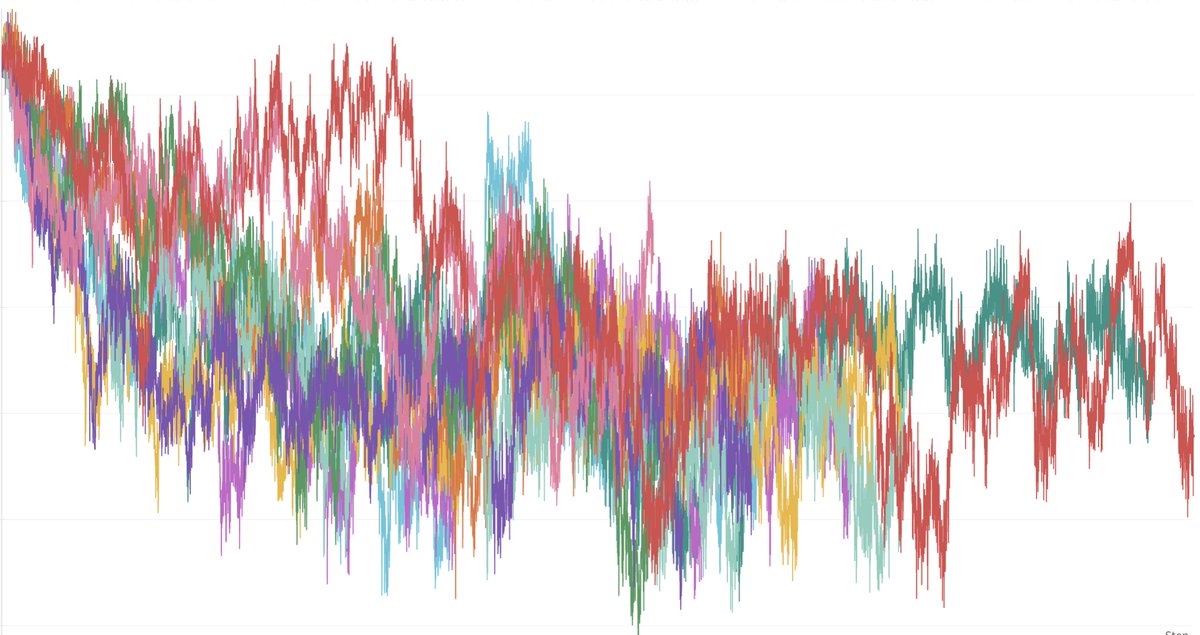

I really like how @weights_biases plots the KL in multi-agent PPO

That’s a wrap on the Indigenous AI Gathering 2025. Over two days, Indigenous knowledge keepers, technologists, youth, and allies came together to chart new paths in AI inclusive of Indigenous ethics and perspectives. Thank you for being part of this vital conversation. With…

Recently went on a podcast to talk about consciousness and AI. Very timely given how common interactions with AI systems are becoming, and how convincingly "human" LLMs can appear to be in our conversations with them. Was a fun conversation - check it out! youtu.be/C7kGlnVWHjQ

🎉Personal update: I'm thrilled to announce that I'm joining Imperial College London @imperialcollege as an Assistant Professor of Computing @ICComputing starting January 2026. My future lab and I will continue to work on building better Generative Models 🤖, the hardest…

Excited to announce our recent work on low-precision deep learning via biologically-inspired noisy log-normal multiplicative dynamics (LMD). It allows us to train large neural nets (such as GPT-2 and ViT) in FP6. arxiv.org/abs/2506.17768

Even simple tasks like sharing objects for others can be hard to learn

"Just train the AI models to be good people" might not be sufficient when it comes to more powerful models, but it sure is a dumb step to skip.

What does it mean when I read IRL as inverse reinforcement learning instead of in real life?

In our latest paper, we discovered a surprising result: training LLMs with self-play reinforcement learning on zero-sum games (like poker) significantly improves performance on math and reasoning benchmarks, zero-shot. Whaaat? How does this work? We analyze the results and find…

We've always been excited about self-play unlocking continuously improving agents. Our insight: RL selects generalizable CoT patterns from pretrained LLMs. Games provide perfect testing grounds with cheap, verifiable rewards. Self-play automatically discovers and reinforces…