Chi Zhang

@dr_chizhang

Assistant Professor@AGI Lab, Westlake Univerisity; PhD@NTU

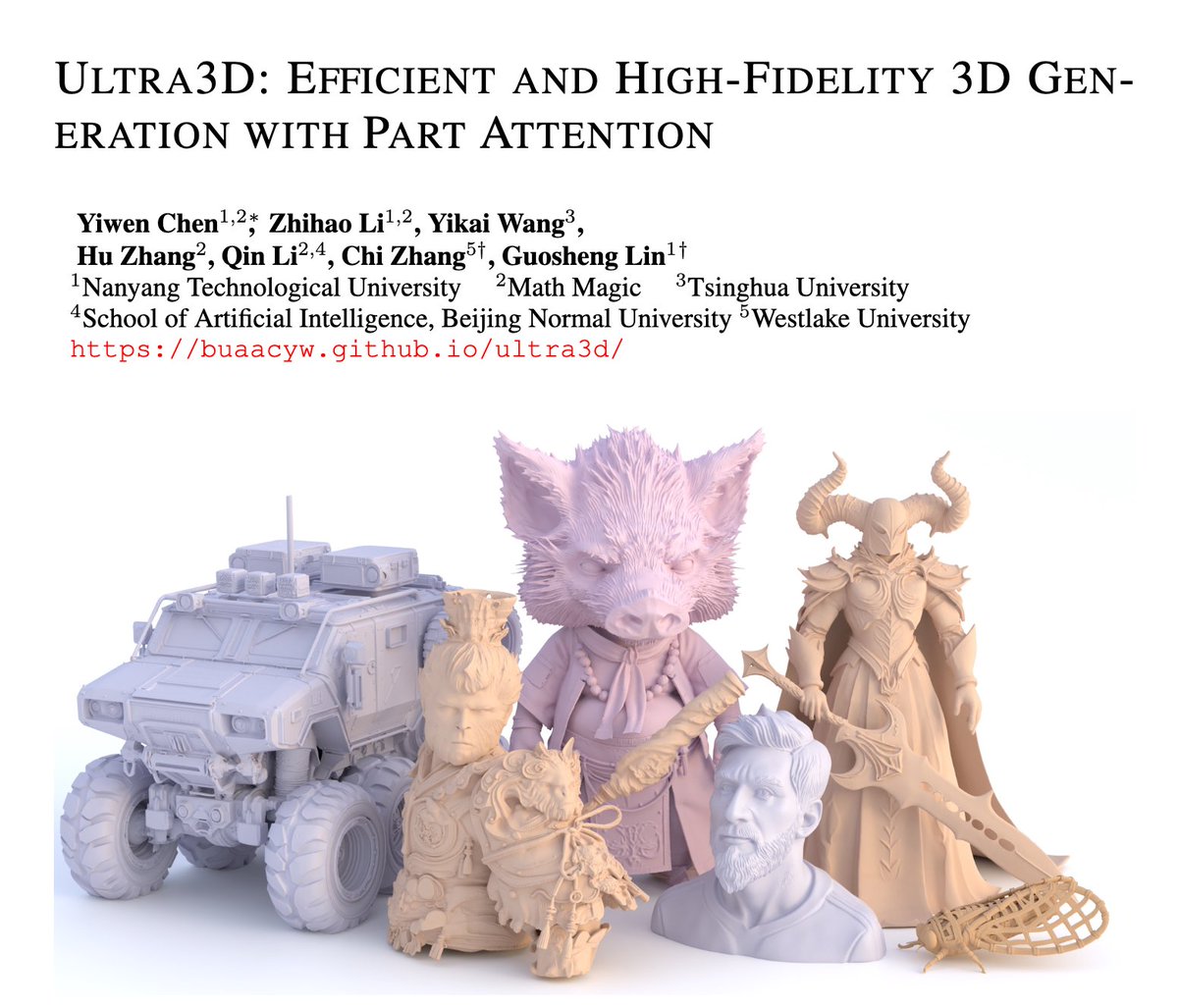

🚀 Excited to share Ultra3D — our latest research on high-resolution 3D asset generation! Achieves SOTA quality with ultra-detailed geometry & texture. Check it out on HuggingFace Papers 🧠👇 huggingface.co/papers/2507.17…

西湖大学发布AppAgentX:会自我进化的AI智能体,手机操作更高效!Westlake University Launches AppAgen... youtube.com/shorts/K3YgtBh… 来自 @YouTube

Recently, the AGI Lab at Westlake University in China introduced #AppAgentX, a GUI agent with self-evolution capabilities. By continuously learning and optimizing its behavior during task execution, it enhances operational efficiency. Experimental results show that AppAgentX…

Distill-Any-Depth: A New SOTA Monocular Depth Estimation Model Achieves Unprecedented Performance agientry.com/blog/380

昨日のより軽い奥行き推定(Distill-Any-Depthのbase)モデル。でもTDPTと一緒に動かすとまだ重いな

🚀 Introducing AppAgent X – the next-generation of AppAgent, a self-evolving GUI agent that gets smarter and more efficient over time! 🔓 Open-sourced with a Gradio interface! 📂 Check out our code & models at: appagentx.github.io Paper: huggingface.co/papers/2503.02…

13. Distill Any Depth: Distillation Creates a Stronger Monocular Depth Estimator 🔑 Keywords: Monocular Depth Estimation, Cross-Context Distillation, depth normalization, pseudo-labels, multi-teacher framework 💡 Category: Computer Vision 🌟 Research Objective: The paper aims…

🚀 The AGI Lab at Westlake University is currently recruiting international PhD students and summer research interns to work on cutting-edge research in Generative AI and Multimodal AI. Feel free to email me for more details!

thanks @_akhaliq for sharing our work

Distill Any Depth Distillation Creates a Stronger Monocular Depth Estimator a SOTA depth estimation model trained with knowledge distillation algorithm, which efficiently distills knowledge from multiple teacher models to achieve superior performance

Distill Any Depth Distillation Creates a Stronger Monocular Depth Estimator a SOTA depth estimation model trained with knowledge distillation algorithm, which efficiently distills knowledge from multiple teacher models to achieve superior performance

🚀 Excited to share our new Awesome repo 📚! We've curated a collection of literature on 「diffusion-based style transfer」 to support and accelerate research in this exciting area. Check it out and contribute! 👉github.com/Westlake-AGI-L… #Airdrop #StyleTransfer #DiffusionModels