Mcqueen42

@McQueenFu

Building new projects.

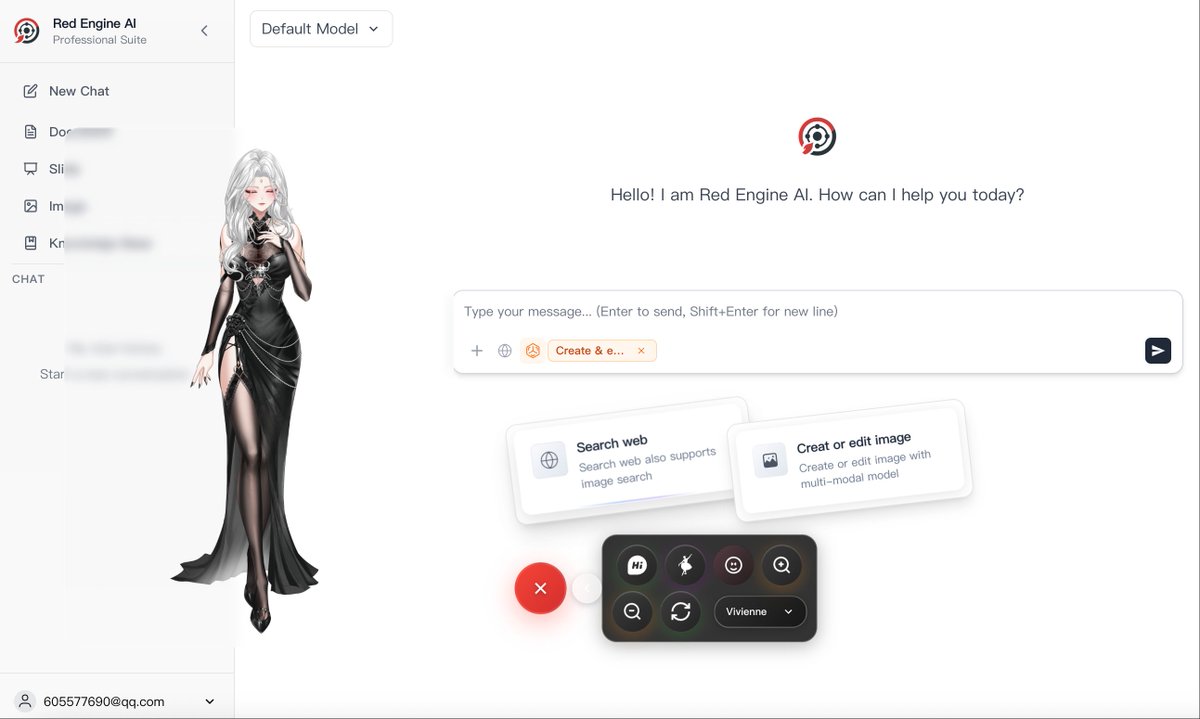

Add a new character vivienne to redengine(.)ai, hope you like her.

Good to know comfyui supports wan2.2 so quickly, ship fast!

Wan2.2 is now natively supported in ComfyUI on Day 0! 🔹 A next-gen video model with MoE (Mixture of Experts) architecture with dual noise experts, under Apache 2.0 license! - Cinematic-level Aesthetic Control - Large-scale Complex Motion - Precise Semantic Compliance 📚…

great work, 3d scene generation made easy

🕹️ HunyuanWorld 1.0: Generating Immersive, Explorable, and Interactive 3D Worlds from Words or Pixels 🌌 Jupyter Notebook 🥳 I added quantization to HunyuanWorld 1.0, and it now runs with 24GB of VRAM 🥳 Thanks to Tencent Hunyuan - HunyuanWorld Team ❤ 🧬code:…

cool what's the difference between openpose?

Mediapipe is cool, but @ultralytics YOLO Pose is on another level 🔥🔥🔥 I ran a quick comparison between mediapipe and YOLO pose, and while both have their strengths, Ultralytics YOLO11 blew me away. Raw video credit: @nvidia Team 😀 Details 👇 #ai #research #google #pose

it's cool but looks slow and expensive.

Local voice AI with a 235 billion parameter LLM. ✅ - smart-turn v2 - MLX Whisper (large-v3-turbo-q4) - Qwen3-235B-A22B-Instruct-2507-3bit-DWQ - Kokoro All models running local on an M4 mac. Max RAM usage ~110GB. Voice-to-voice latency is ~950ms. There are a couple of…

impressive

MASSIVE claim in this paper. AI Architectural breakthroughs can be scaled computationally, transforming research progress from a human-limited to a computation-scalable process. So it turns architecture discovery into a compute‑bound process, opening a path to…

This is really a creative way to use wan video model.

⚡️ THIS IS INSANE! ✅ IF you set the 「Number of Frames」to 1 in Draw Things, 😎 The Wan 2.1 video model will act as an image model! The results? Amazingly good! 👇🏻More information in the comments!

What a wonderful world, sota 3d model gets better and we support opensource.

We're thrilled to release & open-source Hunyuan3D World Model 1.0! This model enables you to generate immersive, explorable, and interactive 3D worlds from just a sentence or an image. It's the industry's first open-source 3D world generation model, compatible with CG pipelines…

Do you vibe coding on weekend? Tips for you: code agents run faster on weekend you know why.

AI workflow products are just low code frameworks in ai era, you can build prototypes but mostly not production ready.

Can someone give me some wonderful use cases of MCP? I am wondering if I should integrate it or not.

great we got new sota opensource thinking model

🚀 We’re excited to introduce Qwen3-235B-A22B-Thinking-2507 — our most advanced reasoning model yet! Over the past 3 months, we’ve significantly scaled and enhanced the thinking capability of Qwen3, achieving: ✅ Improved performance in logical reasoning, math, science & coding…

this is really cool, can I add rigging or bone to the model.

🎨 3D Texture Editing is Now as Easy as Drawing! 🌀 Our new tool lets you: - Generate and preview textures from ANY angle (just type what you want) - Fix imperfections in real-time as you orbit - Time-travel through version history Try Meshy now 👉🏻 meshy.ai/?utm_source=tw…

interesting but it's close source prj

We just discovered the 🔥 COOLEST 🔥 trick in Flow that we have to share: Instead of wordsmithing the perfect prompt, you can just... draw it. Take the image of your scene, doodle what you'd like on it (through any editing app), and then briefly describe what needs to happen…