Diego Calanzone

@diegocalanzone

« artificia docuit fames » / PhD student @Mila_Quebec, curr. tackling ⚙️ RL & 🧪 drug design / 🏛️ AI grad @UniTrento

🧵 Everyone is chasing new diffusion models—but what about the representations they model from? We introduce Discrete Latent Codes (DLCs): - Discrete representation for diffusion models - Uncond. gen. SOTA FID (1.59 on ImageNet) - Compositional generation - Integrates with LLM 🧱

good points. Inherent constraints of the competition are part of the outcome performance and they shall be considered. Though Terence didn’t mention that human competitors are running on a negligible fraction of energy ;)

Terence Tao on the supposed Gold from OpenAI at IMO

We blend imitation (SFT) and exploration (RLVR) in post-training with a simple idea: Sample a prefix of an SFT demonstration, let your policy model complete it, and mix it with other RLVR rollouts Intuitively, the model relies more on hints for problems currently out of reach

🚀 Introducing Prefix-RFT to blend SFT and RFT! SFT can learn more complex problems by mimicking, but can have poor generalization. RFT has better overall performance but is limited by the initial policy. Our method, Prefix-RFT, makes the best of both worlds!

partially brewed at Mila

Google DeepMind just dropped this new LLM model architecture called Mixture-of-Recursions. It gets 2x inference speed, reduced training FLOPs and ~50% reduced KV cache memory. Really interesting read. Has potential to be a Transformers killer.

This is an issue on multiple levels, and authors using those "shortcuts"👀 are equally responsible for this unethical behaviour

to clarify -- I didn't mean to shame the authors of these papers; the real issue is AI reviewers, what we see here is just the authors trying to defend against that in some way (the proper way would be identifying poor reviews and asking the AC or meta-reviewer to discard them)

A comprehensive article on ways to Hierarchical RL!

As AI agents face increasingly long and complex tasks, decomposing them into subtasks becomes increasingly appealing. But how do we discover such temporal structure? Hierarchical RL provides a natural formalism-yet many questions remain open. Here's our overview of the field🧵

A good warning lesson on using AIs to write papers: this alleged response to the (dubious) "Illusion of Thinking" paper is full of mathematical errors arxiv.org/abs/2506.09250

Two years in the making, we finally have 8 TB of openly licensed data with document-level metadata for authorship attribution, licensing details, links to original copies, and more. Hugely proud of the entire team.

Can you train a performant language models without using unlicensed text? We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1&2

The language modeling version

cautionary tale for anyone eagerly diving into RL training LLMs are a bit more forgiving, but only so much you should still do it though

Giving your models more time to think before prediction, like via smart decoding, chain-of-thoughts reasoning, latent thoughts, etc, turns out to be quite effective for unblocking the next level of intelligence. New post is here :) “Why we think”: lilianweng.github.io/posts/2025-05-…

The beauty of rethinking architectures

Introducing Continuous Thought Machines New Blog: sakana.ai/ctm/ Modern AI is powerful, but it’s still distinct from human-like flexible intelligence. We believe neural timing is key. Our Continuous Thought Machine is built from the ground up to use neural dynamics as…

Presenting in short! 👉🏼 Mol-MoE: leveraging model merging and RLHF for test-time steering of molecular properties. 📆 today, 11:15am to 12:15pm 📍 Poster session #1, GEM Bio Workshop @gembioworkshop @sparseLLMs #ICLR #ICLR2025

In Mol-MoE, we propose a framework to train router networks to reuse property-specific molecule generators. This allows to personalize drug generation at test time by following property preferences! We discuss some challenges. @proceduralia @pierrelux arxiv.org/abs/2502.05633

@PaglieriDavide presenting BALROG, to an awesome crowd ft @CULLYAntoine come here to see how frontier models perform in open-ended gaming environments

If you are at @iclr_conf and are interested in making your RLHF really fast come find @mnoukhov and me at poster #582.

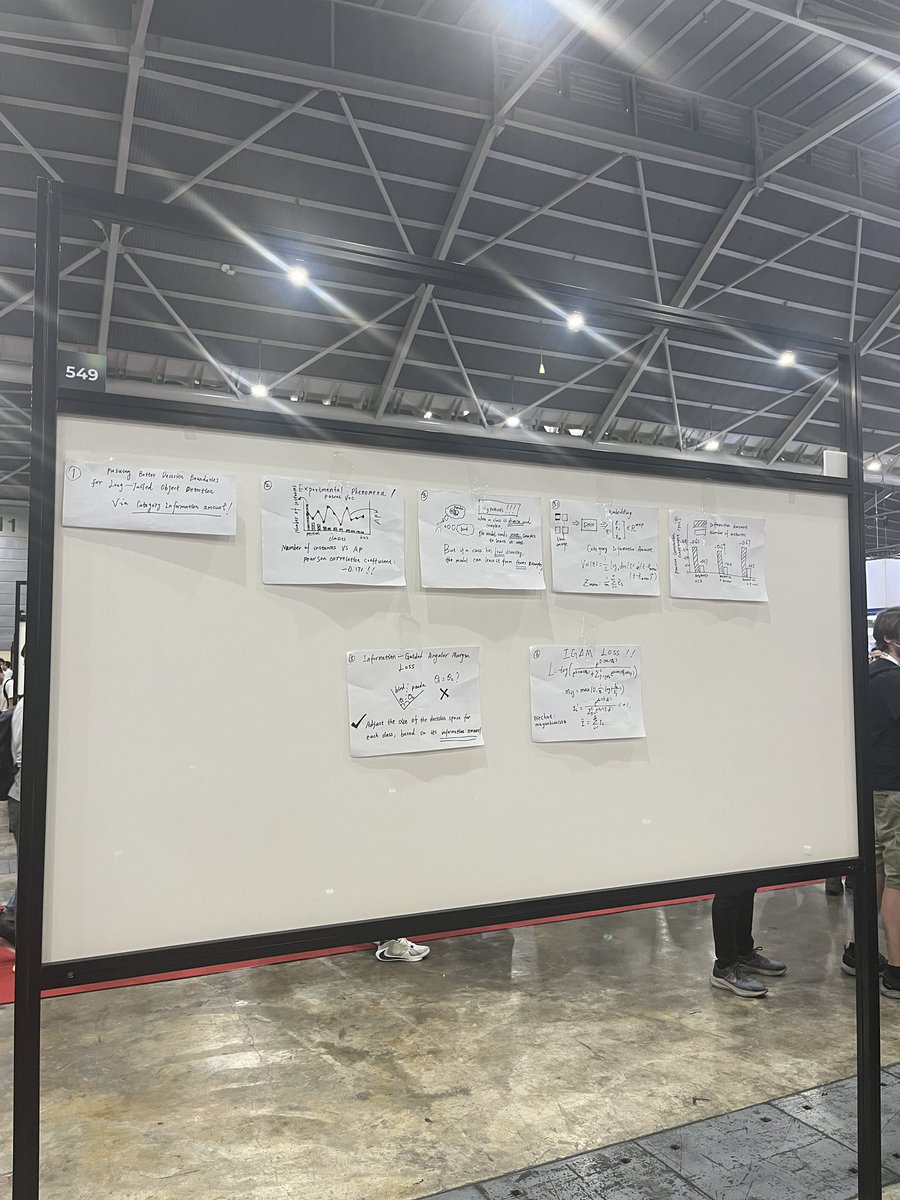

Imagine an anonymous poster consisting of handwritten pages with methodology and results. “What matters is spreading the idea” #ICLR

Happening now in Hall 3, booth 223!

In LoCo-LMs, we propose a neuro-symbolic loss function to fine-tune a LM to acquire logically consistent knowledge from a domain graph, i.e. wrt. to a set of logical consistency rules. @looselycorrect @tetraduzione arxiv.org/abs/2409.13724