EleutherAI

@AiEleuther

A non-profit research lab focused on interpretability, alignment, and ethics of artificial intelligence. Creators of GPT-J, GPT-NeoX, Pythia, and VQGAN-CLIP

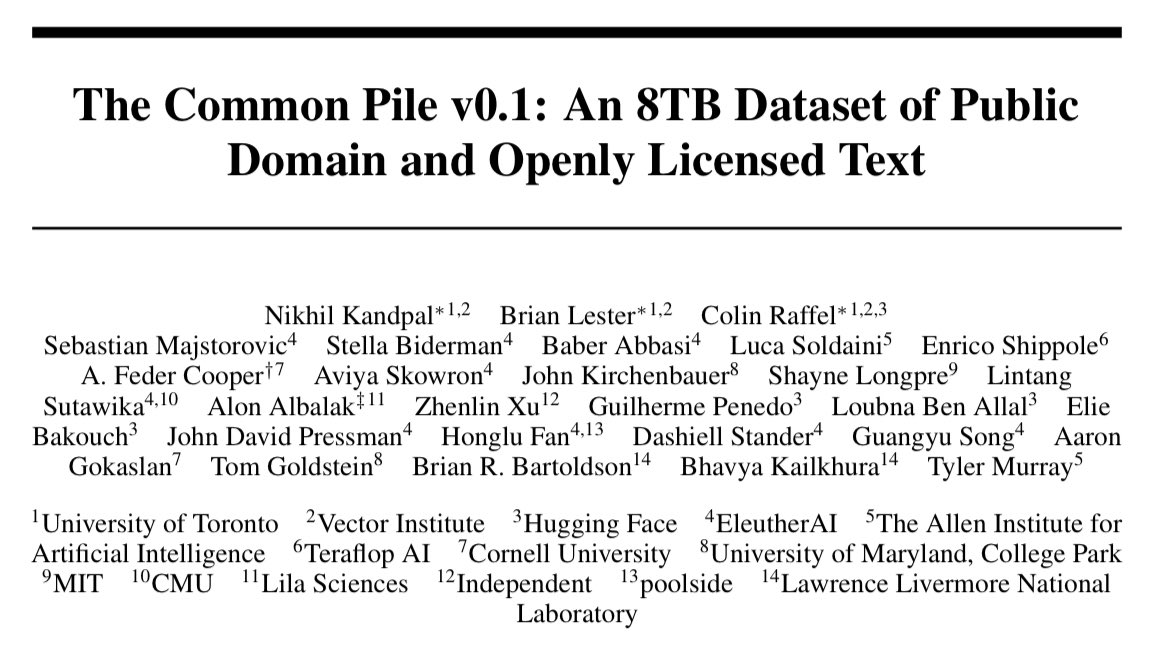

Can you train a performant language models without using unlicensed text? We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1&2

Our head of HPC was the researcher from the recent @METR_Evals study on AI-assisted coding that saw the biggest (tied) productivity boost from using AI in his coding. Hear his account and lessons about using AI to code more effectively here

I was one of the 16 devs in this study. I wanted to speak on my opinions about the causes and mitigation strategies for dev slowdown. I'll say as a "why listen to you?" hook that I experienced a -38% AI-speedup on my assigned issues. I think transparency helps the community.

I’m in Vienna all week for @aclmeeting and I’ll be presenting this paper on Wednesday at 11am (Poster Session 4 in HALL X4 X5)! Reach out if you want to chat about multilingual NLP, tokenizers, and open models!

✨New pre-print✨ Crosslingual transfer allows models to leverage their representations for one language to improve performance on another language. We characterize the acquisition of shared representations in order to better understand how and when crosslingual transfer happens.

If you're into multilingual NLP, tokenization, or open models definitely come say hi to @linguist_cat @aclmeeting! Wednesday at 11am (Poster Session 4 in HALL X4 X5)

✨New pre-print✨ Crosslingual transfer allows models to leverage their representations for one language to improve performance on another language. We characterize the acquisition of shared representations in order to better understand how and when crosslingual transfer happens.

If you want to help us improve language and cultural coverage, and build an open source LangID system, please register to our shared task! 💬 Registering is easy! All the details are on the shared task webpage: wmdqs.org/shared-task/ Deadline: July 23, 2025 (AoE) ⏰

commoncrawl.org/blog/wmdqs-sha…

What can we learn from small models in the age of very large models? How can academics and low-compute orgs @BlancheMinerva will be speaking on a panel on these topics and more! Methods and Opportunities at Small Scale Workshop Sat 19 Jul, 16:00-16:45

MOSS is happening this Saturday (7/19) at West Ballroom B, Vancouver Center! We are excited to have an amazing set of talks, posters, and panel discussions on the insights from and potential of small-scale analyses. Hope to see a lot of you there! 💡

Really grateful to the organizers for the recognition of @magikarp_tokens and my work!

🏆 Announcing our Best Paper Awards! 🥇 Winner: "BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization" openreview.net/forum?id=AO78C… 🥈 Runner-up: "One-D-Piece: Image Tokenizer Meets Quality-Controllable Compression" openreview.net/forum?id=lC4xk… Congrats! 🎉

Starting now!

The dream of SAEs is to be more interpretable proxies for LLMs, but even these more interpretable proxies can be challenging to interpret. In this work we introduce a pipeline for automatically interpreting SAE latents at scale using LLMs. Thu 17 Jul, 11:00 am-1:30 pm East…

SCRIPT-BPE coming to ICML next week!

We’re excited to share that two recent works from @Cohere and Cohere Labs, will be published at workshops next week at @icmlconf in Vancouver! 🇨🇦 🎉Congrats to all researchers with work presented! @simon_ycl, @cliangyu_, Sara Ahmadian, @mziizm, @magikarp_tokens, @linguist_ca

MorphScore got an update! MorphScore now covers 70 languages 🌎🌍🌏 We have a new-preprint out and we will be presenting our paper at the Tokenization Workshop @tokshop2025 at ICML next week! @marisahudspeth @brendan642