Riccardo De Santi

@desariky

Doctoral Fellow @ETH_AI_Center | prev. @UniofOxford, @imperialcollege | generative optimization and exploration for large-scale (scientific) discovery

This paper has won the Outstanding Paper Award at #ICML2022! 🎉 Thanks again to my collaborators @mirco_mutti and professor Marcello Restelli for making this possible!

In the next days (18-24 July) I will be attending #ICML2022 in Baltimore. We will present in a long oral the paper “The Importance of Non-Markovianity in Maximum State Entropy Exploration”, on which I have worked during the last year of my Bachelor. 1/N

We just released our ETH Zürich AI in the Sciences and Engineering Master’s course on YouTube! 📚 Prof. Siddhartha Mishra and I explain PINNs, neural operators, foundation models, neural PDEs, diffusion models, symbolic regression, and more! #AI4Science youtube.com/watch?v=LkKvhv…

When is it possible to achieve regret guarantees for RL in continuous state-action space? In our #icml2024 paper we (try to) deal this challenging open problem. arxiv.org/abs/2402.03792 @alberto_metelli @papinimat

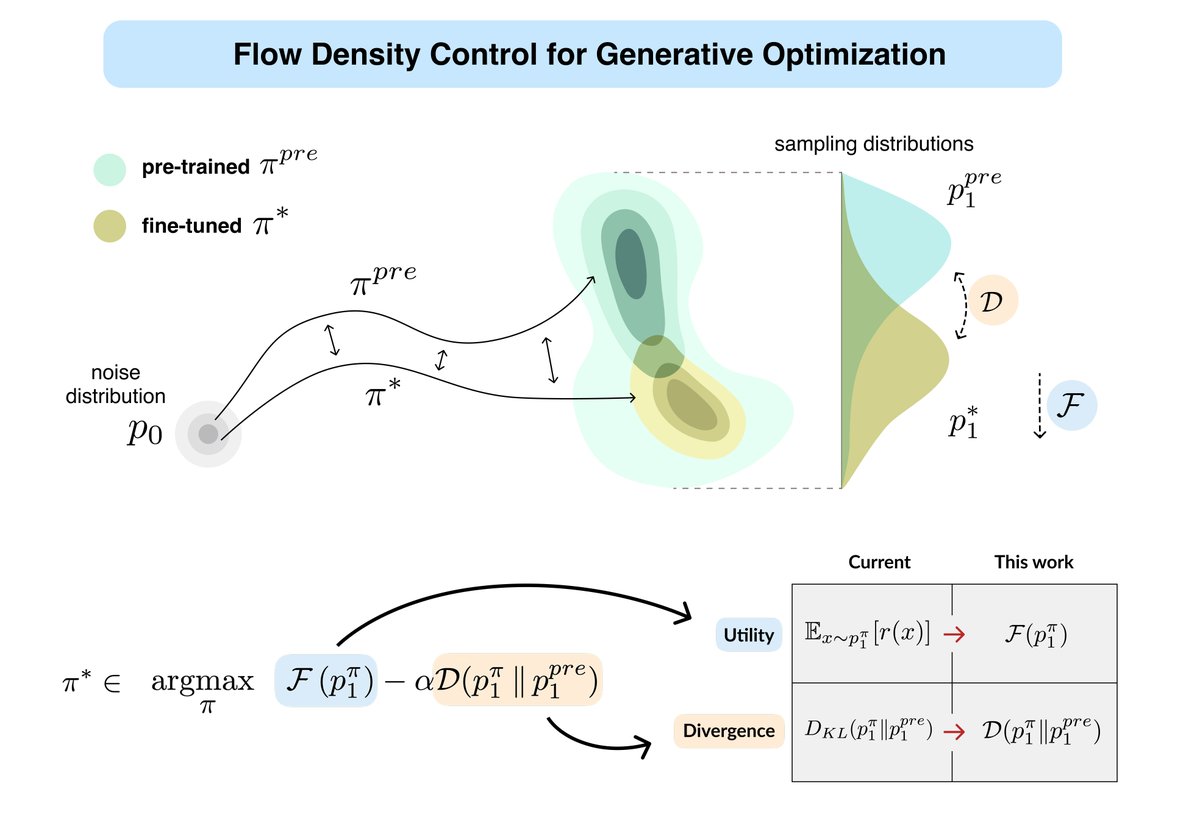

Over the last years we understood how to control generative models via RL/control to maximize expected rewards under KL regularization. But for many applications, that’s not enough. Hence the question: can we control them further? Excited to present our ✨Oral✨ paper “Flow…

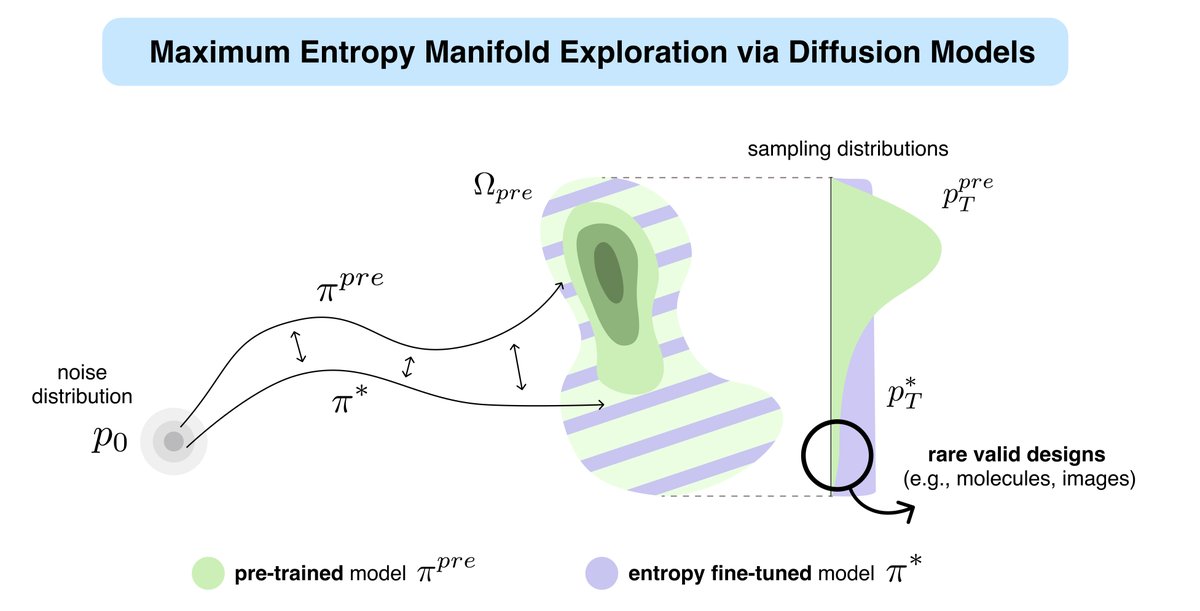

Generative models are great at mimicking data — but real (scientific) discovery requires going beyond it. Excited to present our paper “Provable Maximum Entropy Manifold Exploration via Diffusion Models” this Wednesday at ICML 2025! We propose a scalable, theoretically grounded…

Fully-funded #PhD in #ML at @EdinburghUni (@InfAtEd, @ancAtEd), in 𝐠𝐞𝐨𝐦𝐞𝐭𝐫𝐢𝐜 𝐥𝐞𝐚𝐫𝐧𝐢𝐧𝐠 and 𝐮𝐧𝐜𝐞𝐫𝐭𝐚𝐢𝐧𝐭𝐲 𝐪𝐮𝐚𝐧𝐭𝐢𝐟𝐢𝐜𝐚𝐭𝐢𝐨𝐧. Application deadline: 15 Dec '24. Starting Sep '25. Details in the reply. Please RT and share with anyone interested!

📣We have a tenure-track faculty opening in Responsible AI at @CSatETH: ethz.ch/en/the-eth-zur… @ETH_en is a vibrant environment for AI research with the @ETH_AI_Center etc. Please help spread the word!

I am hiring 3 postdocs at #KAUST to develop an Artificial Scientist for discovering novel chemical materials for carbon capture. Join this project with @FaccioAI at the intersection of RL and Material Science. Learn more and apply: faccio.ai/postdoctoral-p…

The final draft of @BachFrancis's excellent new learning theory book is available: di.ens.fr/~fbach/ He doesn't cover VC dimension, but builds up from regression to general smooth losses, which is heresy of course, but actually fills a much-needed niche. Highly recommended!

Periodic reminder that at my website you can find over 1000 pages of lecture notes on algorithms, data science, game theory, market and mechanism design, blockchain protocols, and more (see replies for link)

@arlet_workshop started now! Come to the first floor to join us #ICML2024

Thrilled to share our 8 conference paper contributions to @icmlconf 2024 next week. Congrats to our doctoral fellows, postdoctoral fellows, and staff members involved: @afra_amini, @Manish8Prajapat, @JavierAbadM, @DonhauserKonst, @desariky, @ImanolSchlag, D. Dmitriev, E. Bamas

OK, time for some tweets about distances between Markov chains! Actually this is about a preprint we've just posted on arxiv with Sergio Calo, Anders Jonsson, Ludovic Schwartz & Javier Segovia-Aguas. FFO optimal transport & bisimulation. Let's dig in! arxiv.org/abs/2406.04056 1/n

Work on diffusion! 😜

If you are a student interested in building the next generation of AI systems, don't work on LLMs

I am not at #ICLR2024 but @mirco_mutti and @desariky will present our work (w/ @dr_amarx) tomorrow at 16:30! We show how to do posterior sampling in RL starting from a prior specified through a partial causal graph ➡️ more edges mean better statistical efficiency 🔥

I’ll spend the next 4 days at ICLR. Feel free to reach out if you’d like to chat! These days I’m obsessed with the interface between decision-making (RL/bandits) and diffusion models and its application in scientific discovery🧪

Experiment design, Bayesian optimization or Active learning -- all under one umbrella. The advent of self-driving labs is here. We need strategies to implement automatic information gathering! ML models are only as informed as the data they are trained on.