Daniel Eth (yes, Eth is my actual last name)

@daniel_271828

Researching effects of automated AI R&D | pro-America, pro-tech, & pro-AI safety

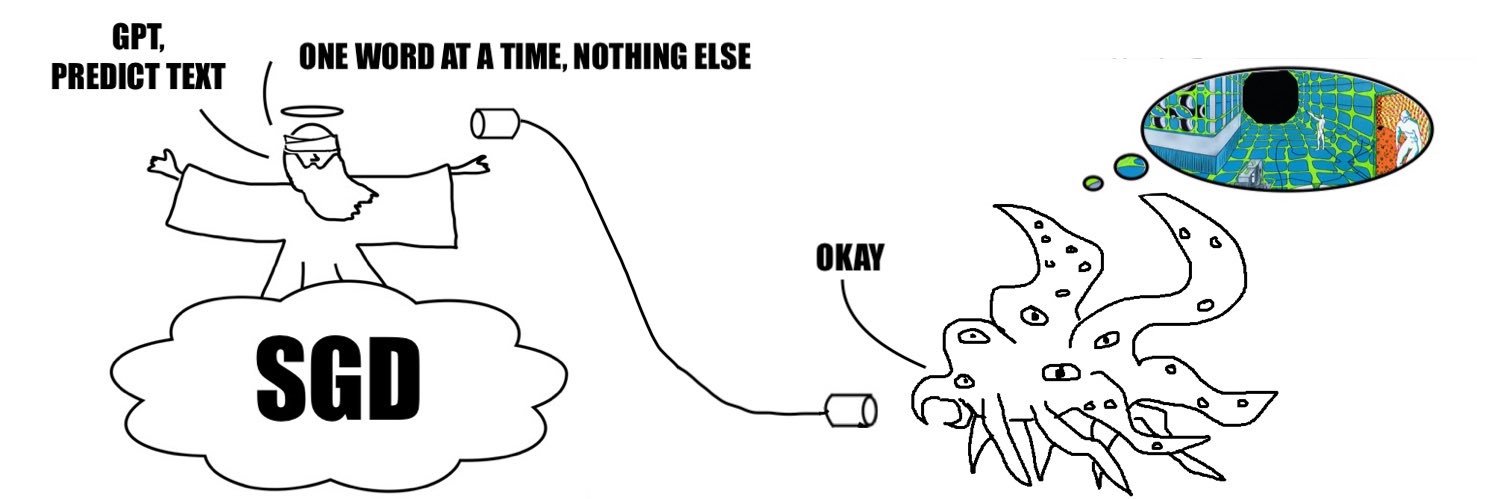

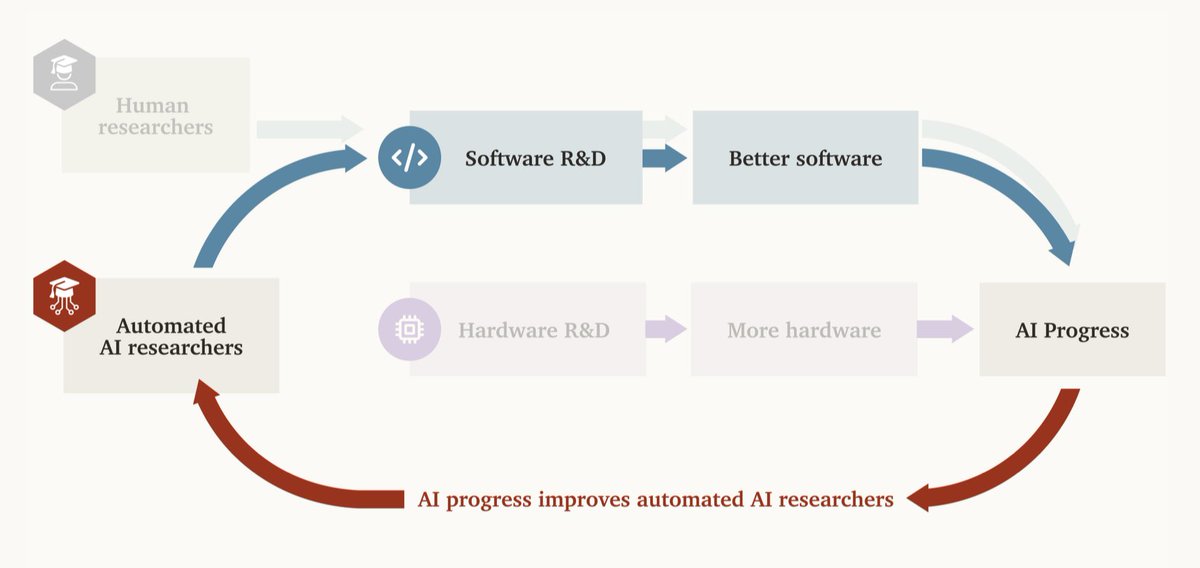

New report: “Will AI R&D Automation Cause a Software Intelligence Explosion?” As AI R&D is automated, AI progress may dramatically accelerate. Skeptics counter that hardware stock can only grow so fast. But what if software advances alone can sustain acceleration? 🧵

My parents trying to explain where I work:

Trump: "He has these think tanks. They build buildings for people that think."

Woah Zuckerberg has a $300M estate in Hawaii?! That’s like 1/3 the cost of an AI researcher!

Yes, this is a huge point! It’s not that “the only thing that matters is scale (of compute and data)” but instead “you want methods that do really well as you scale (your compute and data)”

The Bitter lesson does not say to not bother with methods research. It says to not bother with methods that are handcrafted datapoints in disguise.

Another week, another member of Congress announcing their superintelligent AI timelines are 2028-2033:

Our job ad closes August 3rd! We're still looking for technical folks (ML, CS, EE, semiconductors) who're keen to apply their knowledge to ground policy in technical details. Also, strong generalists who help me run the show! Please apply, even if you're in doubt.

My team at RAND is hiring! Technical analysis for AI policy is desperately needed. Particularly keen on ML engineers and semiconductor experts eager to shape AI policy. Also seeking excellent generalists excited to join our fast-paced, impact-oriented team. Links below.

Demis Hassabis says we need international regulation and collaboration to ensure AI is developed safely.

Really good piece about natsec planning & AGI in Foreign Affairs by researchers at RAND. I particularly liked this passage:

“Any national security strategy that fails to grapple with the potentially transformative effects of artificial general intelligence will become irrelevant,” argue Matan Chorev and Joel Predd. foreignaffairs.com/united-states/…

Starting a chat with o3 and downgrading to 4o has real “aight, just chill brainiac” energy

Starting a chat with 4o and escalating to o3 has “may I speak with your manager” energy

This isn’t just a tweet—it’s an AI-generated message!

labs be like "misalignment is fake and just caused by bad things in the training data", and then not filter out the bad things from the training data

Makes sense. Asymmetric disarmament is hardly ever a good move. And honestly, it’s probably good if leaders in AI are pragmatists that adjust to the changing reality

SCOOP: Leaked memo from Anthropic CEO Dario Amodei outlines the startup's plans to seek investment from the United Arab Emirates and Qatar. “Unfortunately, I think ‘no bad person should ever benefit from our success’ is a pretty difficult principle to run a business on.”

This is probably true but why would you want AI companies to dominate this space? Better imho for the useful analysis to come from 3rd parties. (Of course, those 3rd parties need to be informed - which is another reason to push for transparency in the industry.)

In the past year or so, all AI companies combined have published less useful analysis of AI policy than like 5-10 Substack writers (maybe even just @anton_d_leicht TBH lol) Huge failure to contribute to the public’s understanding of what’s coming + what can be done about it.

Incredible

Just got invited to take part in an expert elicitation study about generative AI's impact on cognition and education and the invitation letter had THIS GODDAMN LINE.

Eric Schmidt says he's read the AI 2027 scenario forecast about what the development of superintelligence might look like. He says the "right outcome" will be some form of deterrence and mutually assured destruction, adding that government should know where all the chips are.

Direct contributions to campaigns in close races can by 10x more ad impressions than contributions to superpacs and committees. Give directly to candidates in close races! One simple way to find close races to give to is to look at Cook ratings: cookpolitical.com/ratings

Hard money contributions really matter a lot, especially hard money contributions from pragmatists who will tell the recipients of their largess to pander to the voters and try to win. slowboring.com/p/how-to-make-…

In case you missed it, I posted a bunch of awesome career opportunities in AI safety!

🚨💼I'm hiring! And so are many other organizations I like. If you’re looking for a career in AI policy, now is an excellent time. I've selected a list of my favorite roles and if you like my tweets you're likely the target audience!